Wake up! It's HighScalability time:

Do you like this sort of Stuff? I'd greatly appreciate your support on Patreon. Know anyone who needs cloud? I wrote Explain the Cloud Like I'm 10 just for them. It has 39 mostly 5 star reviews. They'll learn a lot and love you forever.

- 2%: of sales spent by consumer packaged goods companies on R&D (14% for tech); 272 million: metric tons of plastic are produced each year around the globe; 100+ fps: Google's Edge TPU; 6,000: bugs per million lines of code; 2.2 GB/sec: SIMD JSON parser; 20-30%: fall in DRAM prices; 8x: Russian hackers faster than North Korean hackers; 50%: EV car sales in China by 2025;

- Quoteable Quotes:

- @davygreenberg: If I do a job in 30 minutes it’s because I spent 10 years learning how to do that in 30 minutes. You owe me for the years, not the minutes.

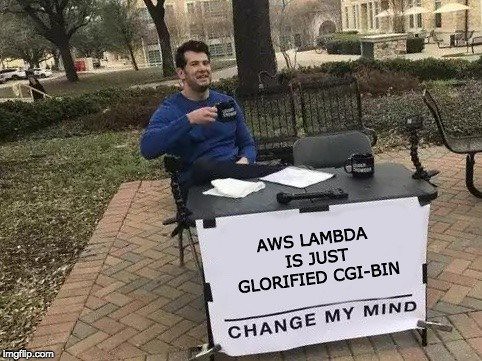

- @PaulDJohnston: Lambda done badly is still better than Kubernetes done well

- Ross Mcilroy: we now believe that speculative vulnerabilities on today's hardware defeat all language-enforced confidentiality with no known comprehensive software mitigations, as we have discovered that untrusted code can construct a universal read gadget to read all memory in the same address space through side-channels. In the face of this reality, we have shifted the security model of the Chrome web browser and V8 to process isolation.

- @ben11kehoe: Statelessness is not the critical property of #serverless compute, it's ephemerality. Being positively limited in duration means the provider can *transparently* manage the platform, no scheduled (or unscheduled, in Fargate's case) downtime needed.

- mark_l_watson: It surprises me how many large companies do not have a ‘knowledge graph strategy’ while everyone is on board with machine learning (which is what I currently do, managing a machine learning team). I would argue that a high capacity, low query latency Knowledge Graph should be core infrastructure for most large companies and knowledge graphs and machine learning are complementary.

- codinghorror: We host thousands of Discourse sites on maybe ~40-50 servers at the moment. So the ratio isn’t that far off, but it is true the .net framework is extremely efficient in ways that Ruby + Rails is … uhhh… not? Postgres has gotten quite competitive with SQL Server in the last decade, as well, so that’s good news, particularly in the “if you have to ask how much the licensing of SQL Server is gonna cost, you can’t afford it” department

- @Jaxbot: The future is now, specifically that Nike didn't QA the *android* version of their Adapt app as thoroughly as their iOS app, and all customers with Android devices now have bricked shoes due to broken firmware update routine.

- Karol Severin: As MIDiA has reported multiple times before, engagement has declined throughout the sector, suggesting that the attention economy has peaked. Consumers simply do not have any more free time to allocate to new attention seeking digital entertainment propositions, which means they have to start prioritising between them.

- Adam Dallis: Microservices architecture on paper sounds amazing but unless the business as a whole is not committed to it, then your department will end up with low morale, low productivity, and tones of code debt.

- Albert-Laszlo Barabasi~ Innovation has no age. As long as you keep trying your chances of breaking through at 80 is exacly the same as breaking through in your 20s.

- Spyros Blanas: In collaboration with Oracle researchers, we are investigating a new interface for data-intensive applications, the Remote Direct Memory Operation (RDMO) interface. An RDMO is a short sequence of reads, writes and atomic memory operations that will be transmitted and executed at the remote node without interrupting its CPU, similar to an RDMA operation

- Steve Pawlowski: Everybody is striving for a chip that has 100 TeraOPS of performance. But to get the efficiency of that chip, you must have several things going on simultaneously. This means having to bring data into the chip and get it out of the chip as fast as possible

- Qnovo: But why can’t we just manufacture the perfect battery that will never catch fire? Simply put, it is prohibitively expensive.

- Sean Dorrance Kelly: If we allow ourselves to slip in this way, to treat machine “creativity” as a substitute for our own, then machines will indeed come to seem incomprehensibly superior to us. But that is because we will have lost track of the fundamental role that creativity plays in being human.

- Ben Darnell: This issue demonstrates both the power and limitations of Jepsen as a testing tool. It’s one of the best tools around for finding inconsistencies, and it found a bug that nothing else did. However, it’s only as good as the workloads in the test suite

- Yann LeCun~ In my opinion, the future of AI is self-supervised learning. Today’s chips using multiply-accumulate arrays to process tensors likely won’t be useful for the new kinds of operations that algorithm designers are developing. The good news is that a variety of low-cost, low-power inference accelerators for embedded systems will be the biggest opportunity,

- Mike Orcutt: Just a year ago, this nightmare scenario was mostly theoretical. But the so-called 51% attack against Ethereum Classic was just the latest in a series of recent attacks on blockchains that have heightened the stakes for the nascent industry. In total, hackers have stolen nearly $2 billion worth of cryptocurrency since the beginning of 2017, mostly from exchanges, and that’s just what has been revealed publicly.

- @jeffsussna: DevOps is nothing more nor less than unifying the making of things with the running of things.

- antirez: Basically what happens is that even if you start to be cash positive, you want to get a bigger company, so you need to scale a lot the sales team and the customer support team, so you spend more money in order to end with more customers, not caring about the short term profitability. This is common to most startups basically. Btw the Redis Labs development team at this point is quite large, because Redis open source is the core, but the company produces a number of things in order to provide a good managed Redis experience, all the additional features and modules, and so forth. But even so, I think it is accurate to say that most money in most companies of this kind will go to orchestrate the company at a different level, not just R&D.

- JTTR: Either Apple gets it right with this revision, or ours is one industry that will inevitably move to other machines. There's not that many of us, but Apples dominance of the creative industries has been a huge part of it's marketing appeal to a more general audience. It'll be interesting to see if they'll regret alienating it down the line.

- averiantha: It seems as though modularity gets taken to far sometimes. The BA says 'lets write a new voucher system'. The developer says rather than create a voucher system, let's create a modular service that will handle all our string matching needs via regular expressions. It gets to a point where the service architecture becomes overly convoluted in which it doesn't have to be. I think logically microservices make sense, but pragmatically monolithic design is the way to go in a lot of cases.

- Michael Wittig: We learned it the hard way. Scaling container instances is a challenge. That’s why we recommend using Fargate. Fargate is much easier to operate

- codemonkey: Ironic that developers seemed to be shifting away from monoliths on the server side while shifting towards huge monolith web front end projects.

- @QuinnyPig: Further, "your dev environment costs us $400 a month, we need you to spend time getting that under $100" is a generally dumb request to an engineer whose loaded cost is $250K.

- shereadsthenews: The hardware replacement cycle is much longer than you’ve implied. Servers I am building today will still be TCO-positive for 15 years. In 2010 Google data centers would still have been full of their first generation dual-socket Opteron machine.

- Daniel Dennett: One of the disturbing lessons of recent experience is that the task of destroying a reputation for credibility is much less expensive than the task of protecting such a reputation. Wiener saw the phenomenon at its most general: “In the long run, there is no distinction between arming ourselves and arming our enemies.” The information age is also the disinformation age.

- Charlotte Jee: The problem? Dr Genevera Allen, associate professor at Rice University, has warned that the adoption of machine learning techniques is contributing to a growing "reproducibility crisis" in science, where a worrying number of research findings cannot be repeated by other researchers, thus casting doubt on the validity of the initial results. "I would venture to argue that a huge part of that does come from the use of machine learning techniques in science," Dr Allen told the BBC. In many situations, discoveries made this way shouldn’t be trusted until they have been checked, she argued.

- jacquesm: Chips without a public toolchain are not worth investing your time in. It is bad enough if your work is tied to specific hardware for which there may at some point not be a replacement but to not even have the toolchain under your control makes it a negative.

- David Rotman: But what if our pipeline of new ideas is drying up? Economists Nicholas Bloom and Chad Jones at Stanford, Michael Webb, a graduate student at the university, and John Van Reenen at MIT looked at the problem in a recent paper called “Are ideas getting harder to find?” (Their answer was “Yes.”) Looking at drug discovery, semiconductor research, medical innovation, and efforts to improve crop yields, the economists found a common story: investments in research are climbing sharply, but the payoffs are staying constant.

- Iain Cockburn: New methods of invention with wide applications don’t come by very often, and if our guess is right, AI could dramatically change the cost of doing R&D in many different fields. Machine learning could be much faster and cheaper by orders of magnitude.

- @signalapp: We need to get in touch with someone on the Play Store team who can help us resolve a problem. After more than 65 days, we are stuck in a feedback loop and it's getting harder to ship Signal updates. Are you a human at Google? Please email us here: prayforplay@signal.org

- James Hamilton: The Nitro System supports key network, server, security, firmware patching, and monitoring functions freeing up the entire underlying server for customer use. This allows EC2 instances to have access to all cores – none need to be reserved for storage or network I/O. This both gives more resources over to our largest instance types for customer use – we don’t need to reserve resource for housekeeping, monitoring, security, network I/O, or storage. The Nitro System also makes possible the use of a very simple, light weight hypervisor that is just about always quiescent and it allows us to securely support bare metal instance types.

- Animats: There are only a few things that parallelize so well that large quantities of special purpose hardware are cost effective. • 3D graphics - hence GPUs. • Fluid dynamics simulations (weather, aerodynamics, nuclear, injection molding - what supercomputers do all day.) • Crypto key testers - from the WWII Bombe to Bitcoin miners • Machine learning inner loops. That list grows very slowly. Everything on that list was on it by 1970, if you include the original hardware perceptron.

- @dmwlff: We have been looking at FaaS here at Robinhood, but it feels like it's not ready for a company like ours yet. My chief concerns are about threading model and ownership (do we need an oncall for every "function?") But it's super compelling and it sure feels like the future.

- @sokane1: Musk says he thinks the forthcoming custom AI chip Tesla is building for Autopilot is 1000x, maybe even 2000x better than the @nvidia chip the company's current cars use.

- @mattturck: One additional (and unfair) complexity for older founders: they’ve had more time to demonstrate greatness, so the expectation is higher. Conversely, it is much easier to *project* future greatness on a fresh YC grad who hasn’t really done anything yet. cc @laureltouby

- dejv: I did work on optical sorting machine: you have stream of fruits on very fast conveyor belt and then machine vision system scans the passing fruits, detects each object and reject (by firing stream of air) those that don't pass: those can be molded fruits, weird color or foreign material like rocks or leaves. 100 fps might be enough, but faster you go, faster your conveyor belt could be.

- weego: So now we're stuffing sql in our react as well as html and CSS? React is on course to reinvent the PHP that everyone spent so long laughing about

- boulos: That said, I don’t agree that 3 years is a reasonable depreciation period for GPUs for deep learning (the focus of this analysis). If you had purchased a box full of P100s before the V100 came out, you’d have regretted it. Not just in straight price/performance, but also operator time: a 2x speedup on training also yields faster time-to-market and/or more productive deep learning engineers (expensive!). People still use K80s and P100s for their relative price/performance on FP64 and FP32 generic math (V100s come at a high premium for ML and NVIDIA knows it), but for most deep learning you’d be making a big mistake. Even FP32 things with either more memory per part or higher memory bandwidth mean that you’d rather not have a 36-month replacement plan. If you really do want to do that, I’d recommend you buy them the day they come out (AWS launched V100s in October 2017, so we’re already 16 months in) to minimize the refresh regret.

- Backblaze: SSDs are a different breed of animal than a HDD and they have their strengths and weaknesses relative to other storage media. The good news is that their strengths — speed, durability, size, power consumption, etc. — are backed by pretty good overall reliability.

- @ossia: When you’re fundraising, it’s AI.When you’re hiring, it’s ML. When you’re implementing, it’s linear regression. When you’re debugging, it’s printf(). - Baron Schwartz (@xaprb)

- portillo: As an individual, the cheapest and simplest options [to send up a satellite] would be to use Nanoracks, Spaceflight, or a similar company that handles most of the launch process. Their price is ~$100k for a 1U Cubesat. I have serious doubts that they would be OK with a 3x3x3 cm satellite, as these are really hard to track and they are big enough that, if there is a collision, they can cause some serious damage.

- Freeman Dyson: Nature's tool for the creation and support of a rich diversity of wildlife is the species, for example the half million species of beetle that astonished Darwin, produced in abundance by the rapid genetic drift of small populations according to Kimura, and in even greater abundance by the rapid mutation of mating-system genes according to Goodenough. In the near future, we will be in possession of genetic engineering technology which allows us to move genes precisely and massively from one species to another.

- George Church: If robots don’t have exactly the same consciousness as humans, then this is used as an excuse to give them different rights, analogous to arguments that other tribes or races are less than human. The line between human and machines blurs, both because machines become more humanlike and humans become more machinelike.

- George Dyson: There are three laws of artificial intelligence. The first states that any effective control system must be as complex as the system it controls. The second law, articulated by John von Neumann, states that the defining characteristic of a complex system is that it constitutes its own simplest behavioral description. The third law states that any system simple enough to be understandable will not be complicated enough to behave intelligently, while any system complicated enough to behave intelligently will be too complicated to understand. The third law offers comfort to those who believe that until we understand intelligence, we need not worry about superhuman intelligence arising among machines. But there is a loophole in the third law. It is entirely possible to build something without understanding it. You don’t need to fully understand how a brain works in order to build one that works.

- Eric Tune: A pattern I have seen is role specialization within ops teams, which can bring efficiencies. Some members specialize in operating the Kubernetes cluster itself, what I call a “Cluster Operations” role, while others specialize in operating a set of applications (microservices). The clean separation between infrastructure and application – in particular the use of Kubernetes configuration files as a contract between the two groups – supports this separation of duties.

- Jeff Williams: And I know centers are noisy, so I have a lot of experience with this. But I wasn't ready for the fact that motors are a flux field of evilness when you have a magnetic rotary encoder. And a simple PID control loop isn't going to cut it because, depending on where the motor shaft is, and what the flux field is in the inductor, the magnetic environment is totally changing. So I think it's a classic example of things seem really simple, like, "How hard could it be? It's a motor, and it's a cutter, and it's a center.” And then you open it up and it's like, "Oh, it's the natural world and physics applies, and now I have to solve this crazy problem, which mostly got solved by switching the algorithm."

- James Urquhart: Ford’s distinction is one that finally helps me articulate a key concern I’ve had with respect to Platform-as-a-Service tools for some time now. In my mind, there are primarily two classes of PaaS systems on the market today (now articulated in Ford’s terms). One class is contextual PaaS systems, in which a coding framework is provided, and code built to that framework will gain all of the benefits of the PaaS with little or no special configuration or custom automation. The other is composable PaaS, in which the majority of benefits of the PaaS are delivered as components (including operational automation) that can be assembled as needed to support different applications.

- Carl Hewitt: I disagreed with Tony Hoare about using synchronous communication as the primitive because it is too slow for both IoT and many-core chips. Instead, the primitive for communication should be asynchronous sending and receiving, from which more complex protocols can be constructed. I disagreed with Joe Armstrong about requiring use of external mailboxes because they are inefficient in both space and time. Instead of requiring an external mailbox for each Actor, buffering/reordering/scheduling should be performed inside an Actor as required.

- Colin Breck: I think, which has already been mentioned, as these systems become more complex, especially at scale, I think we're going to start to take a more empirical approach to answering these kind of questions. It won't be like, “Is the system working or is it not?” Because it's always going to be partially broken, and we'll operate these systems a lot more like we would in the physical world, actually. It'll look a lot more like operating the oil refinery or something like this, like a physical process. And we'll take empirical approaches, actually, to determining the health of the system.

- Not all clouds are created equal. Comparing the Network Performance of AWS, Azure and GCP: AWS has less performance stability in Asia; GCP is 3x slower from Europe to India; Inter-AZ performance is between .5ms - 1.5ms; AWS, Azure, and GCP peer directly with each other - negligible packet loss and jitter, traffic does not leave their backbones; Cloud trend - monetization of the backbone, with Global Accelerator AWS charges more to ride the AWS backbone instead of the internet; Inter-AZ performance is reliable and consistent Indicates robust regional backbone for redundant multi-AZ architectures; Comparable end user performance in North America and Western Europe

- It cost a mere $2000 to induce a NBA ref to fix a game. Given the mostly invisible influence of all the algorithms that control our lives these days, what's the risk of throwing algorithms?

- We're cutting plywood here. Great use of machine learning in the real world. Circular Saw Kickback Killer.

- A circular saw kicks back when material pinches the blade. Within a 10th of second the saw wants to jump out of your hands like a bucking bronco stuck with a red hot poker. It's scary as hell. What the video shows is an invention combining machine learning and 9 sensors (accelerometer, gyro, magnetometer) to detect the leading edge of when a saw starts to kickback. On kickback detection the saw immediately stops. A stopped saw is a safer saw. Brilliant! The sensor thresholds were not programmed, the were learned using data gathered from hundreds of saws. Why don't saw makers already do this? Because change is rarely made by insiders.

- In this example we see the bargain the future will make with us. If we give up our privacy what we will get back is better and safer devices. Imagine a saw maker installing sensors that continually send telemetry data back home. All that data could be used to improve the functionality and safety of their entire line. Isn't that a good deal? The price is all the things we "own" will spy on us—for the greater good. The data could be anonymized, but you know the data will also be used to automatically send new blades when the old blades wear out, or recommend a new saw if the duty cycle of their current saw is being exceeded, or a dozen other things I hope I'm incapable of imagining. It's a complicated world.

- At the end of the video they mentioned making a version for chain saws. The same sort of pinch and kickback scenario happens with chain saws too. While I don't often use circular saws, I do use chain saws, so this would be great. Worth enough for my chain saw to spy on me? I'm not sure, but I am sure future generations will be far more open to the bargain. They won't even frame it as a bargain. It will just be how the world works.

- Fascinating conjecture about how serverless could lead to more hardware specialization—and performance is always gained by specialization. @schrockn: 13/ Later in paper: "Alas, the x86 microprocessors that dominate the cloud are barely improving in performance. In 2017, single program performance improvement only 3%". Serverless enabled constrained environments, which could allow for hardware specialization. @nerdguru: The hardware specialization part of this is super interesting. For ~70 years the tech industry had been based on general purpose hardware but that’s changing with things like AWS Nitro and Netflix caching cabinets. More of this to come, I think. gizmo686: Parallelization is not the only to pursue specialized hardware [0]. The real benefit of specialized hardware is that it can do one thing very well. Most of what a CPU spends its energy on is deciding what computation to do. If you are designing an circuit that only ever does one type of computation you can save vast amounts of energy.

- Google has big plans for CSP [Cloud Services Platform] to commiditize your complements. Urs Hölzle: It’s not AWS versus Azure versus GCP versus Alibaba versus IBM. These are not stacks. These are clouds. And the two stacks have not emerged fully, but we certainly believe in the open source one that is emerging. And you can argue that in the Windows base, it has emerged with Azure Stack. Those are the two most likely to last for the next 20 years. And I would argue that CSP [] has a good chance to be the majority choice in in those next 20 years just because Linux has grown quite a bit along with the whole open source ecosystem, and CSP plays right into that ecosystem...this is really a software stack and are our goal is to win and be the default software stack for the next 20 years. That’s really what we want to do. You know we want everyone to conclude that that this is as safe choice says as Linux.

- Want fine grained control over the execution of your serverless functions? Then you need to run your own infrastructure. In this case it's Fission.io. Four Techniques Serverless Platforms Use to Balance Performance and Cost.

- You can imagine the cloud as a giant switchboard over which calls are routed. Early on calls traveled circuitous routes. As the cloud evolves the routing topology shrinks and we're able to link calls together with less and less routing needed. We're closing in on the age of cloud patch panel, where we can just wire services directly together. For example, you can access AWS services directly using API Gateway without going through that universal call router called Lambda. Connect AWS API Gateway directly to SNS using a service integration. You can use AWS service integration to connect HTTP endpoints directly to other AWS services which allows you to skip your custom compute layer altogether. For example, you can send the body of an HTTP request directly into an AWS SNS topic. Or on Kinesis you can create a stream, deleting a stream, or list all streams. The win: you don’t have to write or pay for the execution of code whose sole job is to forward data from a client to a downstream service. You can connect these two directly.

- The lessons you draw usually depend on the examples you choose. Here are Lessons from 6 software rewrite stories. Netflix, Basecamp, Visual Studio & VS Code, Gmail & Inbox, FogBugz & Trello, FreshBooks & BillSpring. The meta lesson: Once you’ve learned enough that there’s a certain distance between the current version of your product and the best version of that product you can imagine, then the right approach is not to replace your software with a new version, but to build something new next to it — without throwing away what you have.

- Change your algorithm change the exploitable infrastructure niches. Massively Parallel Hyperparameter Optimization on AWS Lambda: I’m excited to share a hyperparameter optimization method we use at Bustle to train text classification models on AWS Lambda incredibly quickly— an implementation of the recently released Asynchronous Successive Halving Algorithm paper...The chart above shows our implementation of ASHA running with 300 parallel workers on Lambda, tuning fastText models with 11 hyperparameters on the ag_news data set and reaching state-of-the-art precision within a few minutes (seconds?). We also show a comparison with AWS SageMaker tuning blazingtext models with 10 params: the Lambda jobs had basically finished by the time SageMaker returned its first result! This is not surprising, given that SageMaker has to spin up EC2 instances and is limited to a maximum of 10 concurrent jobs — whereas Lambda had access to 300 concurrent workers. Default limits for Lambda on left, SageMaker on right: Not exactly a fair fight This really highlights the power that Lambda has — you can deploy in seconds, spin up literally thousands of workers and get results back in seconds — and only get charged for the nearest 100ms of usage. SageMaker took 25 mins to complete 50 training runs at a concurrency of 10, even though each training job took a minute or less — so the startup/processing overhead on each job isn’t trivial, and even then it still wasn’t getting close to approaching the same accuracy as ASHA on Lambda.

- Is Google innovation ossifying? Manish Rai Jain on Why Google Needed a Graph Serving System.

- During this shuffle, Dgraph was considered too complex by management who supported Spanner, a globally distributed SQL database which needs GPS clocks to ensure global consistency. The irony of this is still mind-boggling. This meant that the new serving system would not be able to do any deep joins.

- A curse of a decision that I see in many companies because engineers start with the wrong idea that “graphs are a simple problem that can be solved by just building a layer on top of another system.”

- Google’s inability to do deep joins is visible in many places. For one, we still don’t see a marriage of the various data feeds of OneBoxes: [cities by most rain in asia] does not produce a list of city entities despite that weather and KG data are readily available (instead, the result is a quote from a web page); [events in SF] cannot be filtered based on weather; [US presidents] results cannot be further sorted, filtered, or expanded to their children or schools they attended. I suspect this was also one of the reasons to discontinue Freebase.

- I saw how averse companies were to graph databases because of the perception that they are "not reliable." So, we built Dgraph with the same concepts as Bigtable and Google Spanner, i.e. horizontal scalability, synchronous replication, ACID transactions, etc. Once built, we engaged Kyle and got Jepsen testing done on Dgraph.

- throwawaygoog10: I'm sorry your efforts failed internally. Our infrastructure is somewhat ossified these days: the new and exotic are not well accepted. Other than Spanner (which is still working to replace Bigtable), I can't think of a ton of really novel infrastructure that exists now and didn't when you were around. I mean, we don't even do a lot of generic distributed graph processing anymore. Pregel is dead, long live processing everything on a single task with lots of RAM. mrjn: As Google is growing, there are many T7s around. So, you really need T8 and above to actually get something out of the door, when it comes to impacting web search. RestlessMind: And that has backing from Jeff Dean. Which underscores point mentioned elsewhere in this thread and TFA - with right kind of political backing, you can do wonders.

- Heavy Networking 430: The Future Of Networking With Guido Appenzeller. 15-20% of enterprise workloads are running in public clouds right now. The cloud is not a zero sum game. The budget for public clouds comes out of business units so you don't see a reduction in IT. The overall effect of IT being easier to consume is the expansion of IT. IT is not shrinking, the cloud is just growing faster. The way cloud applications are being built is diverging away from how on-prem applications are being built. The future will be VMs (secure) with a container interface (convenient).

- A Useful Coordination Pattern: Here’s the pattern in pseudo-code Initialize the appendable data structure: A Append to it a unique identifier: I. Loop until the data structure has a specific length/size: L While looping make sure our unique identifier I is still in A and re-add it if it's not Do the rest of the work The above works because the unique identifiers and the length/size of the data structure guarantee that we will converge to a stable state without losing data. It is a little bit like a CRDT because we avoid any conflicts by making sure the data is additive after an initial period of instability.

- The one second problem. How do you drill down on your system and visualize what happens in less than a second. Brendan Gregg created subsecond offset heat maps for just that purpose. Cloud Performance Root Cause Analysis at Netflix (slides). Imagine a graph made up of column slices where each column is one second. The color depth can mean something like how many CPUs were busy for the time range. Time is on both axis. There are about 50 rows from top to bottom. Each pixel is about 20 msecs. You can now look at patterns within a second or patterns that spread across the visualization. He goes through an example of how you use the graph to tell that only one thread is active on a CPU, so there might be global lock being held. He then goes through more examples of how you can use flame graphs to diagnose problems. Though don't you now have a 20 msec problem?

- The “strict serializability” guarantee eliminates the possibility of the system returning stale/empty data. Serializability vs “Strict” Serializability: The Dirty Secret of Database Isolation Levels: The gold standard in isolation is “serializability”. A system that guarantees serializability is able to process transactions concurrently, but guarantees that the final result is equivalent to what would have happened if each transaction was processed individually, one after other (as if there were no concurrency)...The first limitation of serializability is that it does not constrain how the equivalent serial order of transactions is picked...The second (but related) limitation of serializability is that the serial order that a serializable system picks to be equivalent to doesn’t have to be at all related to the order of transactions that were submitted to the system...Strict serializability adds a simple extra constraint on top of serializability. If transaction Y starts after transaction X completes (note that this means that X and Y are, by definition, not concurrent), then a system that guarantees strict serializability guarantees both (1) final state is equivalent to processing transactions in a serial order and (2) X must be before Y in that serial order.

- You can always count on lots of numbers and pictures in a Jeff Atwood post. The Cloud Is Just Someone Else's Computer. Colocating a mini-PC costs $2,044 for three years of hosting. A similar machine on Digital Ocean would be $5,760. So you are spending almost three times as much for a cloud server. Of course the comparison is apples and oranges. In colocation there are no higher level managed services that shield you from the hardships and vicissitudes of the underlying infrastructure. It's just a box you must manage totally. Something Jeff can do without breaking a sweat, but it's not something most people can do or even want to do. Cloud users are also paying a premium for optionality. You can walk away from a cloud resource. Those colocated machines are yours forever. Also, Tesla V100 Server: On-Prem vs AWS Cost Comparison and the inevitable thread debating how to calculate TCO.

- The golden age of pirating is in front of us. I just added up how much in subscriptions I would need to pay to access the content I want to watch when I want to watch it—$1 billion. Maybe someone can bundle content together somehow? We'll call it cable. Will there be other downstream consequences? Add friction, make something hard to consume, people will consume less of it. Games might be the big winner here.

- Still no Linux on the desktop. IBM researchers predict 5 innovations will change our lives in 5 years: Farming’s digital doubles will help feed a growing population using fewer resources; Blockchain will reduce food waste; Mapping the microbiome will protect us from bad bacteria; AI sensors will detect foodborne pathogens at home; VolCat will change plastics recycling.

- Who’s Down with CPG, DTC? (And Micro-Brands Too?). Consumer packaged goods companies have become like big pharama, incapable of innovation, they must buy innovation to stay competitive. Which also sounds a lot like the end state for giant tech companies like Cisco.

- Space, the final frontier. Little Free Radio Firmware. Libre Space Foundation. Crowd-sourced satellite operations. The first open source satellite. Planet Money Goes to Space. Spacefab. STANDARD MATERIALS AND PROCESSES REQUIREMENTS FOR SPACECRAFT. Kubos - Flight software framework for satellites.

- Reliable Microservices Data Exchange With the Outbox Pattern: The idea of this approach is to have an "outbox" table in the service’s database. When receiving a request for placing a purchase order, not only an INSERT into the PurchaseOrder table is done, but, as part of the same transaction, also a record representing the event to be sent is inserted into that outbox table. The record describes an event that happened in the service. An asynchronous process monitors that table for new entries. If there are any, it propagates the events as messages to Apache Kafka. This gives us a very nice balance of characteristics: By synchronously writing to the PurchaseOrder table, the source service benefits from "read your own writes" semantics. A subsequent query for purchase orders will return the newly persisted order, as soon as that first transaction has been committed. At the same time, we get reliable, asynchronous, eventually consistent data propagation to other services via Apache Kafka.

- apache/incubator-openwhisk-composer: Composer is a new programming model for composing cloud functions built on Apache OpenWhisk. With Composer, developers can build even more serverless applications including using it for IoT, with workflow orchestration, conversation services, and devops automation, to name a few examples.

- Service fabric: a distributed platform for building microservices in the cloud: We describe Service Fabric (SF), Microsoft's distributed platform for building, running, and maintaining microservice applications in the cloud. SF has been running in production for 10+ years, powering many critical services at Microsoft. This paper outlines key design philosophies in SF. We then adopt a bottom-up approach to describe low-level components in its architecture, focusing on modular use and support for strong semantics like fault-tolerance and consistency within each component of SF. We discuss lessons learned, and present experimental results from production data.

- Non-Volatile Memory Database Management Systems: This book explores the implications of non-volatile memory (NVM) for database management systems (DBMSs). The advent of NVM will fundamentally change the dichotomy between volatile memory and durable storage in DBMSs. These new NVM devices are almost as fast as volatile memory, but all writes to them are persistent even after power loss