The email sent will contain a link to this article, the article title, and an article excerpt (if available). For security reasons, your IP address will also be included in the sent email.

This is a guest post by Benjamin Manes, who did engineery things for Google and is now doing engineery things for a new load documentation startup, LoadDocs.

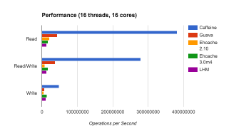

Caching is a common approach for improving performance, yet most implementations use strictly classical techniques. In this article we will explore the modern methods used by Caffeine, an open-source Java caching library, that yield high hit rates and excellent concurrency. These ideas can be translated to your favorite language and hopefully some readers will be inspired to do just that.

Eviction Policy

A cache’s eviction policy tries to predict which entries are most likely to be used again in the near future, thereby maximizing the hit ratio. The Least Recently Used (LRU) policy is perhaps the most popular due to its simplicity, good runtime performance, and a decent hit rate in common workloads. Its ability to predict the future is limited to the history of the entries residing in the cache, preferring to give the last access the highest priority by guessing that it is the most likely to be reused again soon...

Return to Article

Return to Article