Friday

Mar182016

Stuff The Internet Says On Scalability For March 18th, 2016

Friday, March 18, 2016 at 8:56AM

Friday, March 18, 2016 at 8:56AM

- 500 petabytes: data stored in Dropbox; 8.5 kB: amount of drum memory in an IBM 650; JavaScript: most popular programming language in the world (OMG); $20+ billion: Twitch in 2020; Two years: time it took to fill the Mediterranean;

- Quotable Quotes:

- Dark Territory: The other bit of luck was that the Serbs had recently given their phone system a software upgrade. The Swiss company that sold them the software gave U.S. intelligence the security codes.

- Alec Ross~ The principle political binary of the 20th century is left versus right. In the 21st century the principle political binary is open versus closed. The real tension both inside and outside countries are those that embrace more open economic, political and cultural systems versus those that are more closed. Looking forward to the next 20 years the states and societies that are more open are those that will compete and succeed more effectively in tomorrows industry.

- @chrismaddern: "Population size: 1. Facebook 2. China 🇨🇳 3. India 🇮🇳 4. Whatsapp 5. WeChat 6. Instagram 7. USA 🇺🇸 8. Twitter 9. Indonesia 🇮🇩 10. Snapchat"

- @grayj_: Assuming you've already got reasonable engineering infrastructure in place, "add RAM or servers" is waaay cheap vs. engineering hours.

- @qntm: "I love stateless systems." "Don't they have drawbacks?" "Don't what have drawbacks?"

- jamwt: [Dropbox] Performance is 3-5x better at tail latencies [than S3]. Cost savings is.. dramatic. I can't be more specific there. Stability? S3 is very reliable, Magic Pocket is very reliable. I don't know if we can claim to have exceeded anything there yet, just because the project is so young, and S3s track record is long. But so far so good. Size? Exabytes of raw storage. Migration? Moving the data online was very tricky!

- Facebook: because we have a large code base and because each request accesses a large amount of data, the workload tends to be memory-bandwidth-bound and not memory-capacity-bound.

- fidget: My guess is that it's pretty much just BigQuery. No one else seems to be able to compete, and that's a big deal. The companies moving their analytics stacks to BQ and thus GCP probably make up the majority (in terms of revenue) of customers for GCP

- outside1234: There is no exodus [from AWS]. There are a lot of companies moving to multi-cloud, which makes sense from a disaster recovery perspective, a negotiating perspective, and possibly from cherry picking the best parts of each platform. This is what Apple is doing. They use AWS and Azure already in large volume. This move adds the #3 vendor in cloud to mix and isn't really a surprise.

- @mjpt777: At last AMD might be getting back in the game with a 32 core chip and 8 channels of DDR4 memory. http://www.

- phoboslab: Can someone explain to me why traffic is still so damn expensive with every cloud provider? A while back we managed a site that would serve ~700 TB/mo and paid about $2,000 for the servers in total (SQL, Web servers and caches, including traffic). At Google's $0.08/GB pricing we would've ended up with a whooping $56,000 for the traffic alone. How's that justifiable?

- Joe Duffy: First and foremost, you really ought to understand what order of magnitude matters for each line of code you write.

- mekanikal_keyboard: AWS has allowed generation of developers to focus on their ideas instead of visiting a colo at 3am

- @susie_dent: 'Broadcast' first meant the widespread scattering of seeds rather than radio signals. And the 'aftermath' was the new grass after harvest.

- @CodeWisdom: "Simplicity & elegance are unpopular because they require hard work & discipline to achieve & education to be appreciated." - Dijkstra

- @vgr: In fiction, a 750 page book takes 5x as long to read as 150 page book. In nonfiction, 25x. Ergo: purpose of narrative is linear scaling.

- @danudey: and yes, for us having physical servers for 2x burst capacity is cheaper than having AWS automatically scaling to our capacity.

- @grayj_: "How do you serve 10M unique users per month" CDN, caching, minimize dynamics, horizontal scaling...also what's really hard is peak users.

- @cutting: "Improve BigQuery ingestion times 10x by using Avro source format"

- jamwt: Both companies control the technology that most impacts their business. Dropbox stores quite a bit more data than Netflix. Data storage is our business. Ergo, we control storage hardware. On the other hand, Netflix pushes quite a bit more bandwidth than almost everyone, including us. Ergo, Netflix tightly manages their CDN.

- james_cowling: We [Dropbox] were pushing over a terabit of data transfer at peak, so 4-5PB per day.

- Dark Territory: communications between Milosevic and his cronies, many of them civilians. Again with the assistance of the NSA, the information warriors mapped this social network, learning as much as possible about the cronies themselves, including their financial holdings. As one way to pressure Milosevic and isolate him from his power base, they drew up a plan to freeze his cronies’ assets.

- The Epic Story of Dropbox’s Exodus From the Amazon Cloud Empire. Why? Follow the money. Dropbox operates at scale where they can get “substantial economic value” by moving. And it's an opportunity to specialize. Dropbox is big enough now that they can go the way Facebook and Google and build their own custom most everything. Dropbox: designed a sweeping software system that would allow Dropbox to store hundreds of petabytes of data...and store it far more efficiently than the company ever did on Amazon S3...Dropbox has truly gone all-in...created its own software for its own needs...designed its own computers...each Diskotech box holds as much as a petabyte of data, or a million gigabytes...The company was installing forty to fifty racks of hardware a day...in the middle of this two-and-half-year project, they switched to Rust (away from Go). Excellent discussion on HackerNews.

- So you are saying there's a chance? AlphaGo defeats Lee Sedol 4–1. From a battling Skynet perspective it's comforting to remember any AI has an exploitable glitch somewhere in it's training. Those neural nets are very complex functions and we know complexity by it's very nature is unstable. We just need to create AIs that can explore other AIs for patches in their neural net that can be exploited. Like bomb sniffing dogs.

- Here's a cool game that doesn't even require a computer. Do These Liquids Look Alive? Be warned, it involves a lot of tension, but that's just touching the surface of all the fun you can have.

- 10M Concurrent Websockets: Using a stock debian-8 image and a Go server you can handle 10M concurrent connections with low throughput and moderate jitter if the connections are mostly idle...This is a 32-core machine with 208GB of memory...At the full 10M connections, the server's CPUs are only at 10% load and memory is only half used.

- Yes, hyperscalers are different than the rest of us. Facebook's new front-end server design delivers on performance without sucking up power: Given a finite power capacity, this trend was no longer scalable, and we needed a different solution. So we redesigned our web servers to pack more than twice the compute capacity in each rack while maintaining our rack power budget. We also worked closely with Intel on a new processor to be built into this design.

- A good example of how Google makes the most out of all their compute resources by deploying an active-active design rather than a resource wasting active-passive design. Google shares software network load balancer design powering GCP networking: Maglev load balancers don't run in active-passive configuration. Instead, they use Equal-Cost Multi-Path routing (ECMP) to spread incoming packets across all Maglevs, which then use consistent hashing techniques to forward packets to the correct service backend servers, no matter which Maglev receives a particular packet. All Maglevs in a cluster are active, performing useful work.

- There's money in controlling both sides of a market. See the Moments You Care About First. Instagram is moving to an algorithmic feed.

- Isn't this backwards? Why Haven't We Met Aliens Yet? Because They've Evolved into AI. If you've transcended couldn't you package your brain in some sort of vessel and launch a light weight version of yourself out into the universe? Those are exactly the beings we would be seeing.

- Yahoo with a good look at how APIs can work at scale. Elements of API design: Delivering a flawless NFL experience: During the 4 hours of the NFL game, the API platform fielded over 215 million calls, with a median latency of 11ms, and a 95th percentile latency of 16ms. The APIs showed six 9s of availability during this time period, despite failure of dependencies during spikes in the game.

- You got that right. It's nuts. The Deep Roots of Javascript Fatigue: I recently jumped back into frontend development for the first time in months, and I was immediately struck by one thing: everything had changed.

- But if you just have to frontend here's an awesome resource: State of the Art JavaScript in 2016. It provides a curate list of technology choices with satisfying explanations for why they were chosen. The drum roll please: Core library: React; Application life cycle: Redux; Language: ES6 with Babel. No types (yet); Linting & style: ESLint with Airbnb; Dependency management: It’s all about NPM, CommonJS and ES6 modules; Build tool: Webpack; Testing: Mocha + Chai + Sinon; Utility library: Lodash is king, but look at Ramda; and more.

- We don't need no stinkin' developers. Twitter shut down Emojitracker's access.

- Our schizophrenic present. Amazon Echo, home alone with NPR on, got confused and hijacked a thermostat.

- Why did Dropbox use Rust instead of Go? jamwt: The reasons for using rust were many, but memory was one of them...Primarily, for this particular project, the heap size is the issue. One of the games in this project is optimizing how little memory and compute you can use to manage 1GB (or 1PB) of data. We utilize lots of tricks like perfect hash tables, extensive bit-packing, etc. Lots of odd, custom, inline and cache-friendly data structures. We also keeps lots of things on the stack when we can to take pressure off the VM system. We do some lockfree object pooling stuff for big byte vectors, which are common allocations in a block storage system...In addition to basic memory reasons, saving a bit of CPU was a useful secondary goal, and that goal has been achieved...The advantages of Rust are many. Really powerful abstractions, no null, no segfaults, no leaks, yet C-like performance and control over memory and you can use that whole C/C++ bag of optimization tricks.

- What a great story. A modern version of The Great Train Robbery. The Incredible Story Of How Hackers Stole $100 Million From The New York Fed: As it turns out there is much more to the story, and as Bloomberg reports today now that this incredible story is finally making the mainstream, there is everything from casinos, to money laundering and ultimately a scheme to steal $1 billion from the Bangladeshi central bank.

- Here's a good explanation of how NTP works. Meet the Guy Whose Software Keeps the World’s Clocks in Sync. Didn't think about how IoT might bring the whole thing down.

- A heart warming use of big data. Global Fishing Watch claims success in big data approach to fighting illegal catches: big data analyses of satellite tracking data for fishing ships can be used to monitor fishing activity, and to spot illegal catches in marine protected areas.

- And here's a less heart warming use of big data. JPMorgan Algorithm Knows You’re a Rogue Employee Before You Do: We’re taking technology that was built for counter-terrorism and using it against human language, because that’s where intentions are shown.

- With Overcast 2.5 patrons can upload their own files to play in Overcast. This is the beauty of having a recurring revenue model. It means you can actually do things that cost money, like upload files to S3, store them, and then download them to stream into the app. The design is interesting too. Files are uploaded directly to S3 and streamed directly from S3, which saves a lot of load on Overcast servers. The upload feature is also a high value add calculated to encourage users to become patrons.

- In the early days the always fighting the last war US military didn't think much of cyber warfare precisely because it didn't involve traditional tropes like soldiers, tanks, and bullets. That's now all changed of course. So the answer is yes. Can There Be War Without Soldiers? But it won't be like that Star Trek episode where people off themselves in a simulated war. The damage will be very real.

- doxcf434: We've been doing tests in GCE in the 60-80k core range: What we like: - slightly lower latency to end users in USA and Europe than AWS - faster image builds and deployment times than AWS - fast machines, live migrations blackouts are getting better too - per min billing (after 10mins), and lower rates for continued uses vs. AWS RIs where you need to figure out your usage up front - project make it easy to track costs w/o having to write scripts to tag everything like in AWS, down side is project discovery is hard since there's no master account

- Lessons Learned From A Year Of Elasticsearch In Production: 1. Watch Your Thread Pools; 2. Ensure You Have An Adequate Heap Size But Leave Plenty Of Off-heap Memory Too; 3. Use Doc Values Liberally; 4. Use SSDs; 5. Turn On Slow Query Logging and Regularly Review It.

- Some great data structure posts: Leapfrog Probing and A Resizable Concurrent Map. And How does perf work? (in which we read the Linux kernel source).

- A wonderful (and disputed) exploration of How to do distributed locking. You get some in-depth musings on Redis as well. Conclusion: I think the Redlock algorithm is a poor choice because it is “neither fish nor fowl”: it is unnecessarily heavyweight and expensive for efficiency-optimization locks, but it is not sufficiently safe for situations in which correctness depends on the lock.

- Joe Armstrong goes all Yoda. Managing two million web servers: "We do not have ONE web-server handling 2 millions sessions. We have 2 million webservers handling one session each. The reason people think we have one webserver handling a couple of million users is because this is the way it works in a sequential web server. A server like Apache is actually a single webserver that handles a few million connections. In Erlang we create very lightweight processes, one per connection and within that process spin up a web server. So we might end up with a few million web-servers with one user each. If we can accept say 20K requests/second - this is equivalent to saying we can create 20K webservers/second." Keep in mind Erlang processes are not the same as OS processes.

- Here's Getting More Performance Out of Kafka Consumer. Some discoveries: Cache is our rare resource; Java hash map is a dependable cache miss generator; A data structure that uses x in main memory can use 4x in cache, while a different data structure for the same function that uses y in cache can use +1000y in main memory; Efficiency is better than speed at the level of a component service, if what you want is speed at the level of the complete app

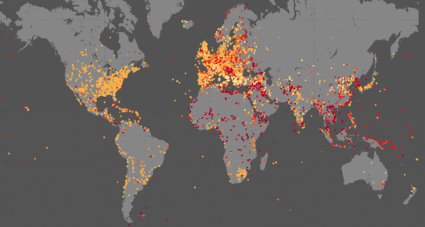

- Our metrics just aren't keeping up with new economic realities. Digital globalization: The new era of global flows: Remarkably, digital flows—which were practically nonexistent just 15 years ago—now exert a larger impact on GDP growth than the centuries-old trade in goods, according to a new McKinsey Global Institute (MGI) report, Digital globalization: The new era of global flows.

- Thanks For the Memories: Touring the Awesome Random Access of Old: Like a hard drive, a drum memory was a rotating surface of ferromagnetic material. Where a hard drive uses a platter, the drum uses a metal cylinder. A typical drum had a number of heads (one for each track) and simply waited until the desired bit was under the head to perform a read or write operation. A few drums had heads that would move over a few tracks, a precursor to a modern disk drive that typically has one head per surface.

- RRAM vendor inks deal with semi foundry: I’ve been a fan of RRAM – resistive random access memory – for years. It is much superior to NAND flash as a storage medium, except for cost, density and industry production capacity. Hey, you can’t have everything!

- If you learn better by implementing something in code then here's how to learn probability using Python. A Concrete Introduction to Probability (using Python). It's from Peter Norvig. Enough said.

- Twitter goes into great detail about their distributed storage system. Strong consistency in Manhattan.

- An Unprecedented Look into Utilization at Internet Interconnection Points: One of the most significant findings from the initial analysis of five months of data—from October 2015 through February 2016—is that aggregate capacity is roughly 50% utilized during peak periods (and never exceeds 66% for any individual participating ISP, as shown in the figure below. Moreover, aggregate capacity at the interconnects continues to grow to offset the growth of broadband data consumption.

- Erasure Coding in Windows Azure Storage: In this paper we introduce a new set of codes for erasure

coding called Local Reconstruction Codes (LRC). LRC reduces the number of erasure coding fragments that need to be read when reconstructing data fragments that are offline, while still keeping the storage overhead low. The important benefits of LRC are that it reduces the bandwidth and I/Os required for repair reads over prior codes, while still allowing a significant reduction in storageoverhead.

-

MacroBase: Analytic Monitoring for the Internet of Things: a data analytics engine that performs analytic monitoring of IoT data streams. MacroBase implements a customizable pipeline of outlier detection, summarization, and ranking operators. For efficient and accurate execution, MacroBase implements several cross-layer optimizations across robust estimation, pattern mining, and sketching procedures, allowing order-of-magnitude speedups. As a result, MacroBase can analyze up to 1M events per second on a single core. On GitHub.

Reader Comments