What do you believe now that you didn't five years ago? Centralized wins. Decentralized loses.

Wednesday, August 22, 2018 at 8:57AM

Wednesday, August 22, 2018 at 8:57AM

Decentralized systems will continue to lose to centralized systems until there's a driver requiring decentralization to deliver a clearly superior consumer experience. Unfortunately, that may not happen for quite some time.

I say unfortunately because ten years ago, even five years ago, I still believed decentralization would win. Why? For all the idealistic technical reasons I laid out long ago in Building Super Scalable Systems: Blade Runner Meets Autonomic Computing In The Ambient Cloud.

While the internet and the web are inherently decentralized, mainstream applications built on top do not have to be. Typically, applications today—Facebook, Salesforce, Google, Spotify, etc.—are all centralized.

That wasn't always the case. In the early days of the internet the internet was protocol driven, decentralized, and often distributed—FTP (1971), Telnet (<1973), FINGER (1971/1977), TCP/IP (1974), UUCP (late 1970s) NNTP (1986), DNS (1983), SMTP (1982), IRC(1988), HTTP(1990), Tor (mid-1990s), Napster(1999), XMPP(1999), and SETI@home(1999).

We do have new decentalized services: Bitcoin(2009), Minecraft(2009), Ethereum(2014), IPFS(2015), Mastadon(2016), Dat (2018), and PeerTube(2018). We're still waiting on Pied Piper to deliver the decentralized internet.

On an evolutionary timeline decentralized systems are neanderthals; centralized systems are the humans. Neanderthals came first. Humans may have interbred with neanderthals, humans may have even killed off the neanderthals, but there's no doubt humans outlasted the neanderthals.

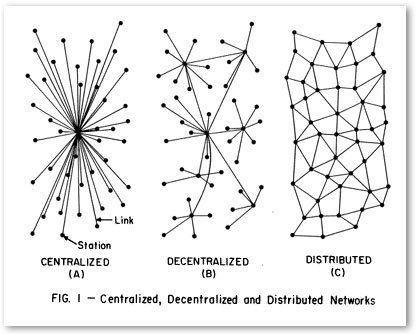

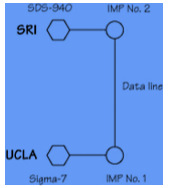

The reason why decentralization came first is clear from a picture of the very first ARPA (Advanced Research Projects Agency) network, which later evolved into the internet we know and sometimes love today:

Everyone had a vision of the potential for intercomputer communication, but no one had ever sat down to construct protocols that could actually be used. It wasn’t BBN’s job to worry about that problem. The only promise anyone from BBN had made about the planned-for subnetwork of IMPs was that it would move packets back and forth, and make sure they got to their destination. It was entirely up to the host computer to figure out how to communicate with another host computer or what to do with the messages once it received them. This was called the “host-to-host” protocol.

-- Where Wizards Stay Up Late

All that existed were hosts talking directly to each other over a primitive network. Centralization didn't exist. TCP/IP didn't exist. Nothing we take for granted today existed.

This fit the design goals. The early internet was all about sharing data:

Taylor had been the young director of the office within the Defense Department’s Advanced Research Projects Agency overseeing computer research, and he was the one who had started the ARPANET. The project had embodied the most peaceful intentions—to link computers at scientific laboratories across the country so that researchers might share computer resources.

...

Building a network as an end in itself wasn’t Taylor’s principal objective. He was trying to solve a problem he had seen grow worse with each round of funding. Researchers were duplicating, and isolating, costly computing resources. Not only were the scientists at each site engaging in more, and more diverse, computer research, but their demands for computer resources were growing faster than Taylor’s budget. Every new project required setting up a new and costly computing operation.

...

And none of the resources or results was easily shared. If the scientists doing graphics in Salt Lake City wanted to use the programs developed by the people at Lincoln Lab, they had to fly to Boston.

-- Where Wizards Stay Up Late

Back in those days of high adventure hosts were far more than mere pets, they were golden temples where crusaders came to worship speaking prayers of code.

Today, servers aren't even cattle, servers are insects connected over fast networks. Centralization is not only possible now, it's economical, it's practical, it's controlable, it's governable, it's economies of scalable, it's reliable, it's walled gardenable, it's monetizable, it's affordable, it's performance tunable, it's scalable, it's cacheable, it's securable, it's defensible, it's brandable, it's ownable, it's right to be forgetable, it's fast releasable, it's debuggable, it's auditable, it's iterable, it's easier to usable, it's easier to onboardable, it's copyright checkable, it's GDPRable, it's safe for China searchable, it's machine learnable, it's monitorable, it's spam filterable, it's value addable.

Depending on your point of view, decentralization is few of those things. And many of those "features" are exactly why we like decentralization in the first place.

What's more, consumers simply do not care. Users use. Only a small percentage have the technical sophistication to understand why they may want to preferentially use decentralized applications for technical reasons. Saying "It's like X, but decentralized", does not resonate, especially when the services are not as good. We had decentralized Slack way before Slack...yet there's Slack. You know it's bad when GitHub managed to recentralize an inherently distributed system like git.

There are certainly niche reasons to use decentralized systems, permissionless anonymity being the primary use case. Can you trust the likes of Facebook or Google? History says absolutely not. But most people don't care.

What might constitute a turning point back to decentralization? I can think of several:

- Complete deterioration of trust such that avoiding the centralization of power becomes a necessity.

- Radically cheaper cost basis.

- It becomes fashionable.

- The decentralization community manages to create clearly superior applications as convenient and reliable as centralized providers.

- Geographical isolation.

- Neanderthals live alongside humans. Parallel, separate, not worrying about who's equal.

(1) Seems more possible than I'd like to admit. See China's Digital Dystopia.

(2) Still on the horizon. Cloud computing will follow the same downward cost curves as everything else.

(3) We'll have to get the Kardashians on that.

(4) Will be difficult. By their very nature iterably improving decentralized applications is like herding cats. It's much easier to add features to centralized applications. Sure, Napster was a great way to share music, but isn't Spotify simply better? Yes, I know, Spotify can shutdown tomorrow and then where are we? I'm with you. But most aren't.

(5) The problem is the earth is too small. Global centralized applications are buildable today. Something I missed on completely. When we go to space that won't be the case. Applications in the space age will have to redecentralize...at least until the ansible is invented.

(6) We have genetic material from the neanderthals. We can rebuild them. We have the technology. This time maybe it's enough that neanderthals survive alongside humans, not going extinct, ready for the time when humans need a fresh infusion of genetic material...or when neanderthals become alpha.

So that's what I believe now that I didn't five years ago. How about you?

Related Articles

- On Hacker News. It wasn't my intention to spark a decentralization vs. centralization debate. I was actually interested in what people no longer believe. Anyone?

- Centralized Vs Distributed Systems Publishing And Owning Our Own Content.

- ssbc/patchwork

- Google Finds: Centralized Control, Distributed Data Architectures Work Better Than Fully Decentralized Architectures

- Definitions of centralized, decentralized, distributed, federated are here.

Reader Comments (15)

Centralized is easier and faster to develop, so it shines brighter... but it also burns out sooner. Decentralized is much more robust and resilient, so it tends to stay forever (even if it runs out of users at some point, anyone can resurrect it in the future). They're just different stages of a given concept.

Can you define what you mean by centralised vs de-centralised? Amazon, Googles Architecture would seem to be de-centralised, that how they scale?

arendtio has a good definition https://news.ycombinator.com/item?id=17695808:

Here is my shot at defining various terms in this context:

- Centralized: Centralized networks have one central point which controls the network. That doesn't have to be a single server sometimes it is just that there is just one company controlling the network (e.g. WhatsApp).

- Decentralized: Is the opposite of 'centralized' meaning there is more than one central point. So all following types are 'decentralized'.

- Distributed: In general terms, it means that the network is (more or less evenly) distributed upon all participants. All participants have the same role and responsibility. There are various kinds of distributed networks. Git for example stores a full copy of all information in every node. Distributed Hash Tables use a different approach where every node is responsible for one explicit part of the information to store.

- Peer-to-peer (p2p): Is one form of a distributed network, which works (in general) without servers. So all the participants connect directly to each another (Tox).

- Federated: Is sometimes called a 'distributed network of centralized networks'. Two popular examples are e-mail and XMPP. All participant use their own centralized server, but that server cooperates with other servers to transfer messages across the network. Sometimes their implementations make a distinction between client-to-server and server-to-server protocols.

Then there's: Google Finds: Centralized Control, Distributed Data Architectures Work Better Than Fully Decentralized Architectures http://highscalability.com/blog/2014/4/7/google-finds-centralized-control-distributed-data-architectu.html

What about Bitcoin? It's decentralized and seems to be winning everything.

Two decentralizing forces you failed to mention: the value of user data and user sensitivity to privacy.

As the amount, quality, and awareness that a person's aggregate user data across services is valuable, people will be incentivized to guard or sell their data. Theoretically you could trust a "centralized" 3rd party to guard your data or sell it for you, but if the 3rd party is just getting a simple flat rate for that service and your data were really portable then it seems likely that many 3rd parties of that kind would pop-up, resulting in a decentralized system.

The other thing is privacy. I'd like to wake up one day and have an AI in my phone that I trust completely with 100% of my data, so that it might return the most value to me in my daily life. I'd like it to know my location at all times, my biometric data, my emails, calendar, text messages, voice memo recorded social interactions, my social network. If all the AI happens on-device and my data is extremely secure and never leaves my phone, then why shouldn't I? The more data my AI has the better its insights and suggestions are, and the better my life goes. On a personal level, I go from decentralization (some data in google's servers, some data in amazon's, some in netflix's) to centralization (all of my personal data in one secure place). From a corporate level, user data is going from being centralized to being decentralized.

So I think increasing centralization is a constant, just like increasing entropy is a constant. But as users realize the value of their data I think data will begin centralize around users, and compute value-add will continue to centralize in the corporate cloud.

Twobtc, I refer you to David Gerard, author of Attack of the 50 Foot Blockchain: Bitcoin, Blockchain, Ethereum & Smart Contracts, who has a different take on how much bitcoin is "winning".

https://davidgerard.co.uk/blockchain/

Teddy,

Privacy is something I stuff in option #1.

The data market is something I've given a lot of thought to and would love to see. The problem is we've not seen users care about the value of their data as an intrinsic property of their identity. Users routinely trade their data for services, which are centralized and monetized. All that data held by the different aggregators won't hit an open market under a shared licensing model because of rent seeking. So how could a decentralized market ever evolve? When and why would users ever realize the value of their data? Convenience rules all. It would take a legal remedy—that a user's data is a person's private property—to come about.

It's not about scalability, it's about trust.

In decentralized cryptographic system, a real trust can emerge bottom-up via science and engineering...

In centralized systems, the trust is a word without substance fabricated by marketing campaigns...

Scalability will be sorted out at some point, but the fact that people/organizations in power always break promises and turn evil (even when you don't know about it) is a historical evidence repeating from the beginnings of time...

Good post, Todd.

>We do have new decentalized services: Bitcoin(2009), Minecraft(2009), Ethereum(2104), IPFS(2015), Mastadon(2016), and PeerTube(2018). We're still waiting on Pied Piper to deliver the decentralized internet.

You could add the promising Dat Project and Beaker Browser, which uses the dat protocol to create and distribute webpages.

The Hackerfleet's central premise - building a free, opensource service infrastructure for nautical people - lead us to building a federated/decentralized system.

We immediately recognized Freifunk's potential to deliver the network parts for that endeavour to begin - yes, on ships! And yes, we did the math behind that.

On the software side though, we quickly realized that most of the opensource application frameworks are not really suitable to build such a solution.

That is, why HFOS (The Hackerfleet Operating System) was conceived:

It allows modular development of the necessary aspects and is geared towards decentralized administration and operation, as well as data-safety, -privacy and -exchange (..the latter mostly for science and opendata of course).

The currently provided modules are somewhat focused on maritime tasks (e.g. chart navigation, sensor dashboard, anchorwatcher, etc), but some newer modules - like the project planning package - are of a quite different nature, albeit very usable by sailors, too.

This year, the core framework (dubbed 'Isomer') will be isolated from the provided module packages; To allow hackers & developers around the world to construct their own featuresets.

I'd be very happy to get constructive feedback from everyone and would be thrilled, if fellow Hackers would like to join the project. To do that, send me an email or play around with our repository. The project is also represented on hackaday.io

Also: thank you Todd, for the very insightful article!

Lots of metaphors in this article, but I see no definition of "winning". I suspect his metric is performance. There are other metrics that are just as meaningful. This greybeard has seen the switch from peer2peer to client-server, and back, several times. It will continue. "Fashion" is part of that, a primary motivator for many PHBs.

Sarcastic Fringehead,

I won't define love, beauty, intelligence, the good life, or justice either. As I'm sure you know "winning" means different things to different people and we'd just spend more time trying to define each word in any definition of winning...which is why we turn to metaphors.

The pendulum will eventually swing, as even here on Earth their are applications such as autonomous machines that can't handle the latencies associated with centralized systems. Indeed if I had one overall criticism of your blog entry it is that it is a web-focused and somewhat consumer-oriented view of computing. If I'm trying to keep the balance of chemicals in a manufacturing process within a narrow range, with the consequences of failure being an explosion, I'm not relying on a Cloud datacenter. I very well may, and likely will, stream the resulting data (or some summarization) to the Cloud for further analysis. But not for actual process control. So in your little taxonomy of decentralization, #6 it is :-)

One other point, you are showing your relative youth. Centralization, aka classic Mainframe computing, came first and remained dominant decades past the invention of the Arpanet. Batch, terminal-based OLTP, and Timesharing are all essentially centralized computing. Limited decentralization via minicomputers was certainly a factor in the 70s, and the 80s brought networks of minicomputers to the fore. But it isn't until the 90s that the swing to decentralization goes full stream with client/server and then the Internet.

Thanks exmsde, I'll take "relative youth' where I can get it. :-)

> I'm not relying on a Cloud datacenter.

Perhaps we're talking about a different thing. Centralization doesn't only refer to where computation happens, it also refers to a boundary, a domain of control.

Facebook, Netflix, Google are all distributed on a world-wide basis, but they are still centralized because control is centralized. You go to facebook.com, netflix.com, google.com, you don't enter the URL for the independent shard you want to access.

This control is shifting to the edge as well. As I wrote in Stuff The Internet Says On Scalability For July 27th, 2018:

With cloud based edge computing we've entered a kind of weird mushy mixed centralized/decentralized architecture phase. Amazon let's you put EC2 instances at the edge. Microsoft has Azure IoT Edge. Google has Cloud IoT Edge and GKE On-Prem and Edge TPU. The general idea is you pay cloud providers to put their machines on your premises and let them manage what they can. You aren't paying other people to manage you're own equipment, the equipment isn't even yours. Outsourcing with a twist. Since the prefix de- means "away from" and centr- means "middle", maybe postcentralization, as in after the middle, would be a good term for it? There are more things in heaven and earth, Developer, than are dreamt of in your cloud.

This post is interesting but fails to recognize that current software development is largely decentralized: the vast majority of software development is based on open source software principles which are decentralized. Linux is the best known example of a "decentralized" system that beat the more centralized proprietary development system such as UNIX or Windows. According to a report in 2017, new software projects are made up of 60 to 80% open source software. Even the "centralized" winners discussed in the blog post such as Google and Facebook are highly dependent on the use of open source software. Yet open source software is only 20 years old so I think that you should not despair. As a lawyers who has worked on software licensing for over 30 years in Silicon Valley (and the pro bono outside general counsel for the Open Source Initiative), I have been fortunate to watch the move to decentralized software development from the front row. However, the success of open source software was based in part on its adoption by business. This adoption brought more a "real world" approach to software development and use for open source software developers. I think (and hope) that blockchain technology will undergo a similar evolution.