Friday

Nov012019

Stuff The Internet Says On Scalability For November 1st, 2019

Friday, November 1, 2019 at 9:06AM

Friday, November 1, 2019 at 9:06AM Wake up! It's HighScalability time:

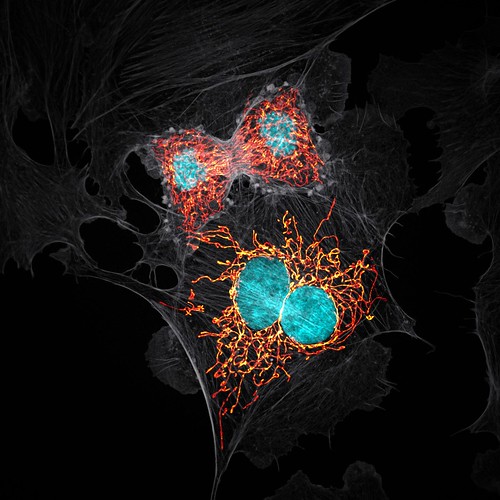

Butterfly? Nope, cells in telophase stage of mitosis (Jason M. Kirk)

Do you like this sort of Stuff? I'd greatly appreciate your support on Patreon. I also wrote Explain the Cloud Like I'm 10 for all who need to understand the cloud. On Amazon it has 61 mostly 5 star reviews (136 on Goodreads). Please recommend it. You'll be a cloud hero.

Number Stuff:

- 3B: Apple Pay transactions in 4Q19, exceeding PayPal and growing four times as fast.

- $10B: Azure wins the JEDI cloud contract. Or has the laser sword fight has just begun?

- 20%: organization that have crossed the chasm to become elite DevOps performers. Ascension is the next level. Elite performers are more likely to use the cloud; smaller companies perform better; retail performs better; low performers use more proprietary software; elites focus on structural solutions that build community, which fall into one of these four patterns: Community Builders, University, Emergent, and Experimenters.

- 120 billion: lower bound on the number years until the universe dies, unless the True Vacuum kills us sooner.

- $250,000: Virgin Galactic's per person cost for touring the final frontier. Hopefully it's a PORT side seat.

- 13: Managed Service Providers hit by ransomware attacks.

- 2.45B: Facebook users, up 2%.

- 1: KeyDB node (1,000,000 ops/sec) equals the throughput of a 7 node Redis cluster.

- 20,000: global Riot game servers.

- $1B: AMD's datacenter business run rate.

- $2B: in tips given to Uber drivers in the last two years. Drivers were tipped in just 16 percent of rides, and just 1 percent of riders tipped on every trip. Sixty percent of riders never tipped at all.

- 20: layers in Google's production microservices.

- 5: years it takes the number of programmers to double. Who trains them?

- 70%: drop in collaboration in open offices.

- 23%: Google's profit decline. Headcount up to 114K from 94K a year ago. CapEx up 26%. Paid clicks are up 18% vs 32% last year.

Quotable Stuff:

- Time Wagner: For its entire history, distributed computing research modeled capacity as fixed but time as unlimited. With serverless time is limited, but capacity is effectively infinite. This only changes everything.

- @patrickc: We've been building an ML engine to automatically optimize the bitfields of card network requests. (Stripe-wide N is now large enough to yield statistical significance even in unusual cases.) It will soon have generated an incremental $1 billion of revenue for @stripe businesses.

- Geoff Huston: The dark traffic rate has escalated in recent times, and the traffic levels observed in 2019 appear to be some four times greater than what was observed in 2016. If the Internet was ever a benign place, it is certainly not so today. Any and every device that is exposed to the Internet will be continuously and comprehensively scanned. Any known vulnerability in an exposed host will be inevitably exposed through this concentrated scanning.

- Oribital Index: Interestingly, since light travels faster in a vacuum than in glass, satellite links could provide better latency than terrestrial fiber for distances greater than ~3,000 km.

- @MagisterIR: Most people don't realize that Apple currently operates an investment management division in Nevada that is almost half the size of Goldman Sachs and effectively the world's largest hedge fund:

- tpetry: The funny part is many people here reported being banned because of „automated clicks“. When we used adsense for advertising purpose we paid for many clicks which lasted less than 50 milliseconds. So google is letting advertiser pay for fake clicks, but website owners will not get this money, google keeps it by blocking their accounts.

- @stuartsierra: "Do you use containers?" "Yes, JAR files."

- @Ana_M_Medina: @Black_Isis #LISA19: - start with events, not the reasons, create a timeline of raw data (chat logs, config changes, deployments)

- Matthew Garrett~ I don’t think I’m very good with computers, I’m just bad at knowing when to give up

- @zehicle: wow... Facebook SREs explaining the cascade of how a 2% fault domain failure can ripple into a full regional failure. It's about 1) the connection graph impacts and 2) loss of resilience changing the risk profile. deep thinking w/ scale. #LISA19

- @unixgeekem: Unfortunately "Cloudiness" is inversely proportional with being able to predict cost. Finance loves to predict things, which means that Engineering needs to be able to talk with them about it. #LISA19

- @unixgeekem: Storing a petabyte of data for a month can cost $112,000 for EBS, $23,000 in S3, down to $1400 in Glacier Deep Archive. But they're not all equally accessible. #LISA19

- @unixgeekem: And then there are Managed NAT Gateways: "they're amazing except where they're freaking awful"... 4.5 cents per GB passing through it, and that adds up. #LISA19

- @GossiTheDog: I predict a major MSP will ransomware their clients en mass, it’s pretty much inevitable at this point as by design they have network access, documentation, passwords etc. You’ll also see cloud service MSPs continue to shit the bed. There was an event a few years ago which frankly got covered up, where APT10 hacked into TCS, NTT etc and gained access to their client’s networks to mass exfil data. They did this using *Office macros*. Sooner or later crimeware will get in.

- Rui Costa: The tail shows the truth: wireless latency sucks, globally. TLDR: Recent results by Uber show that, for mobile apps, latency is high everywhere across the globe, killing user experience even in regions like the US and Europe. If you’re thinking you don’t have this problem, you’re wrong. In this post, I’ll show why you probably haven’t yet noticed that you have latency issues in your mobile app, why what you’re doing is not enough, and what can be done to overcome latency.

- @sfiscience: Why do controversies so often result in 50/50 stalemates? "If an agent is repelled instead of convinced in at least 1/4 interactions, the frequencies of both opinions equalize."

- Billy Tallis: Intel had to make substantial changes to their CPU's memory controller to make their 3D XPoint DIMMs possible. If Micron had collaborated with AMD to add support for something similar to Rome's memory controller, we would have heard about it earlier. I think it'll be another product cycle or two before Micron's 3D XPoint stuff can move beyond NVMe.

- Timothy Prickett Morgan: After two and a half quarters of tightening the purse strings, the world’s largest consumers of infrastructure – the eight major hyperscalers and cloud builders – plus their peers in the adjacent communications service provider space all started spending money on servers and storage again, and Intel can breathe a sigh of relief as it works to get its 10 nanometer manufacturing on track for the delivery of “Ice Lake” Xeon SP processors sometime in the second half of next year.

- @BenBajarin: Tim Cook announced a new feature to Apple Card to buy new iPhones over 12 mo period with 0 interest. So if you wanted to know how Apple would eventually offer all hardware as a payment plan there you go.

- Allie Conti: But Airbnb, which plans to go public next year, seemed to have little interest in rooting out the rot from within its own platform. When I didn’t hear back from the company after a few days, and saw that the suspicious accounts were still active, I took it upon myself to figure out who exactly had ruined my vacation.

- Ben Sigelman: If we escape from the idea of tracing as “a third pillar,” it can solve this problem, but not by sprinkling individual traces on top of metrics and logging products. Again, tracing must form the backbone of unified observability: only the context found in trace aggregates can address the sprawling, many-layered complexity that deep systems introduce.

- Sundar Pichai: The reason I’m excited about a milestone like [achieving quantum supremacy] is that, while things take a long time, it’s these milestones that drive progress in the field. When Deep Blue beat Garry Kasparov, it was 1997. Fast-forward to when AlphaGo beat [Lee Sedol in 2016]—you can look at it and say, “Wow, that’s a lot of time.” But each milestone rewards the people who are working on it and attracts a whole new generation to the field. That’s how humanity makes progress.

- Alison Gopnik: I had a conversation with a young man at Google at one point who was very keen on the singularity, and I said, "One of the ways that we achieve immortality is by having close relationships with other people—by getting married, by having children." He said that was too much trouble, even having a girlfriend. He’d much rather upload himself into the cloud than actually have a girlfriend. That was a much easier process.

- Dr. Ian Cutress: Within a few weeks, Intel is set to launch its most daring consumer desktop processor yet: the Core i9-9900KS, which offers eight cores all running at 5.0 GHz.

- @koehrsen_will: Develop new algorithms as a PhD student: $30k/year. Use pre-built sklearn models as a data scientist: $120k/year. Build regression models in excel as a hedge fund analyst: $200k/year. Make pie charts as a CEO: $14 million/year

- @fayecloudguru: ...The ACG website handles nearly 8 million Lambda invocations per day costing < $10,000 USD a month! Only possible with #serverless👍

- Evan Jones: Auto scaling changes performance bugs from an outage into a cost problem

- Aiko Hibino: Emerging topics tend to generate emerging topics. Emerging keywords that achieved great success in the long run first appeared with other preceding emerging keywords.

- David Yaffe-Bellany: The company has tested algorithms at its drive-throughs that capture license-plate numbers, so the restaurant can list recommended purchases personalized to a customer's previous orders, as long as the person agrees allow the fast-food chain to store that data. McDonald's also recently tested voice recognition at certain outlets, with the goal of deploying a faster order-taking system. Regarding the use of new technologies, the company’s CIO, Daniel Henry, said, “You just grow to expect that in other parts of your life ... We don’t think food should be any different than what you buy on Amazon."

- Seth Lloyd: My prediction would be that there’s not going to a singularity. But we are going to have devices that are more and more intelligent. We’ll gradually incorporate them in our lives. We already are. And we will learn about ways to help each other. I suspect that this is going to be pretty good. It’s already the case that when new information processing technologies are developed, you can start using your mind for different things. When writing was developed—the original digital technology—that put Homer and other people who memorized gigantic long poems out of a job. When printing was developed and texts were widely available, people complained that the skills they had for memorizing large amounts of things and poetry—which is still a wonderful thing to do—deteriorated.

- Paul Johnston: Serverless is first, foremost and always will be about the business. It’s a relatively simple thing to understand. It’s also why serverless isn’t about technology choices. Because while I have never come across this, it is possible to argue that a “serverless startup” may have containers and servers within it. Why? Because running servers or instances may be the most efficient way of achieving the business value you are trying to achieve. Which is why a “serverless architecture” based upon the criteria that many put onto it — FaaS or “on demand” — is incorrect.

- James Shine: Our prediction was that performing the more difficult versions of the task would lead to a reconfiguration of the low-dimensional manifold. To return to the highway analogy, a tricky task might pull some brain activity off the highway and onto the back streets to help get around the congestion. Our results confirmed our predictions. More difficult trials showed different patterns of brain activation to easy ones, as if the brain’s traffic was being rerouted along different roads. The trickier the task, the more the patterns changed. What’s more, we also found a link between these changed brain activation patterns and the increased likelihood of making a mistake on the harder version of the Latin Squares test.

- @shaunnorris: Another emerging theme from the first morning of #DOES19 - Platform Engineering teams. The industry continues to move away from legacy ITIL-bound teams to teams running infra platforms as a product. @optum and @adidas talks this am both good examples of this.

- @Carnage4Life: This is an amazing excerpt from Grubhub's letter to shareholders which basically points out that food delivery apps are a commodity business and scale doesn't bring down the fundamental cost of having to pay a driver enough to make delivering food worth the effort.

- Ethan Bernstein: But let’s see, how do I block out noise? I put on headphones. How do I block out vision? I focus my eyesight somewhere. How do I avoid interruption? I go somewhere else. I work from home. I work from Starbucks. There are ways that we can achieve the same function that those previous walls provided. And we just do so unfortunately at the cost of what the company’s trying to make us do, collaborate.

Useful Stuff:

- Hybrids (gene/component flow).

- With genetic sequencing we've come to learn hybridization—within species and between species—is far more common than once thought. And far from being a weakness, hybridization is now understood to be a strength by driving adaptation. Since genes being exchanged have already been tested by selection, gene sharing often makes for stronger organisms, but sometimes organisms do become less fit.

- At one time humans mated with partners 10km apart. In 1970 the range grew to 100km. Now it's probably 200 or 300km. What you find is individuals from parents of married farther apart are taller and slightly more intelligent those those from more inbred populations.

- If you think of components from a repository like npm (a hybrid zone where genes can be exchanged) as a gene bank and sharing components as gene exchange, then building hybrid apps is generally a good strategy for producing fitter systems. Though like genes, components can interact in complicated ways to reduce fitness.

- There's a truism that holds working complex systems evolve from working smaller systems. Nature never makes new organisms from scratch in the same way programmers never make de novo apps.

- It seems over time apps have grown narrower genetically. The potato famine occurred because potatoes are not grown from seed, they grown from bits of a potato. A "fungus" wiped out all the potatoes because all the potatoes were essentially the same potato. Starvation prompted the revolutions in 1848. Now, every year, scientists check what the fungus is likely to be and they change the potatoes to bring in new resistances.

- Perhaps we need to ensure there's less inbreeding and more diversity in the components used. Components need to have sex. We need to bring in new resistances. Overall app fitness could be raised by sequencing app genes—analyzing an apps component make up—and recommending alternative components to use. Or even suggesting new components be built to increase diversity.

- You can look at something like GitHub as an ecosystem whose fitness landscape can be managed at a cross-repository level. Or is that too much like code eugenics?

- What's this quantum computer stuff all about? Dr. Scott Aaronson explains it better than anyone. Why Google’s Quantum Supremacy Milestone Matters:

- Quantum computing is different. It’s the first computing paradigm since Turing that’s expected to change the fundamental scaling behavior of algorithms, making certain tasks feasible that had previously been exponentially hard. Of these, the most famous examples are simulating quantum physics and chemistry, and breaking much of the encryption that currently secures the internet. In my view, the Google demonstration was a critical milestone on the way to this vision.

- The goal, with Google’s quantum supremacy experiment, was to perform a contrived calculation involving 53 qubits that computer scientists could be as confident as possible really would take something like 9 quadrillion steps to simulate with a conventional computer.

- In everyday life, the probability of an event can range only from 0 percent to 100 percent (there’s a reason you never hear about a negative 30 percent chance of rain). But the building blocks of the world, like electrons and photons, obey different, alien rules of probability, involving numbers — the amplitudes — that can be positive, negative, or even complex (involving the square root of -1). Furthermore, if an event — say, a photon hitting a certain spot on a screen — could happen one way with positive amplitude and another way with negative amplitude, the two possibilities can cancel, so that the total amplitude is zero and the event never happens at all. This is “quantum interference,” and is behind everything else you’ve ever heard about the weirdness of the quantum world. Now, a qubit is just a bit that has some amplitude for being 0 and some other amplitude for being 1. If you look at the qubit, you force it to decide, randomly, whether to “collapse” to 0 or 1. But if you don’t look, the two amplitudes can undergo interference — producing effects that depend on both amplitudes, and that you can’t explain by the qubit’s having been 0 or by its having been 1. Crucially, if you have, say, a thousand qubits, and they can interact (to form so-called “entangled” states), the rules of quantum mechanics are unequivocal that you need an amplitude for every possible configuration of all thousand bits. That’s 2 to the 1,000 amplitudes, much more than the number of atoms in the observable universe. If you have a mere 53 qubits, as in Google’s Sycamore chip, that’s still 2 to the 53 amplitudes, or about 9 quadrillion.

- We have a new term. Functionless. It's a thing. Don’t wait for Functionless. Write less Functions instead. The basic idea is instead of writing functions to handle events you can just treat the cloud like a giant patch panel, connecting event sources to event sinks. Presumably you still have to tell the system what you want done, so you have to replace code with a lot of configuration and aspect-oriented-programming style insertions of lines of code into other frameworks. Is this really simpler? How does anyone grok what a system is anymore when it's plastered in configuration files and 3rd party components?

- What does it take to run a world-wide eSports event? An incredible amount. It's an IT challenge like you can't imagine. And it's all explained in a non-technical way that should be accessible to most any audience. One wonders when they'll have permanent stadiums like other sports? Engineering Esports: The Tech That Powers Worlds:

- The biggest technical challenges we face for esports events fall into three main categories - networking, hardware, and broadcasting, and these get more complex when you consider the remote and local implementations of each.

- To support a stable connection that won’t drop out right before a sweet Baron steal, our teams conduct fiber surveys to discover nearby fiber optic backhauls a full year before we even set foot at a venue. Our shows have very specific network bandwidth needs that can only be satisfied by select providers. We generally look for at least two high bandwidth circuits per venue with diverse paths to ensure redundancy, because our shows happen all over the world, we work with local partners to make sure we’re finding the right providers that satisfy our requirements. And with some shows spread across 4 or more stages, we regularly find ourselves coordinating 8 or more circuits with several providers.

- we’re not just looking for internet circuits. We’re looking for very specific, high capacity, reliable point-to-point circuits that can get us onto Riot’s private internet backbone: Riot Direct.

- Essentially, we have to stand up a network capable of supporting a medium-sized business a few days before the show starts. Once the show ends, we have to be able to efficiently tear it down right after the closing ceremonies in just a few hours.

- It all starts with fiber optic cables running throughout the venue to every conceivable access location. These fibers run from our central network hub buried deep within the venue. From this location, we deploy dozens of fiber optic cables and copper cables. This allows us to build a fully functioning, specialized, high capacity infrastructure at any venue in the world.

- A typical League of Legends shard - the ones that you connect to when you fire up a game of League at home - contains hundreds of subsystems, microservices, and features designed to cope with the massive scale of our global player base. When a player logs in, most of these subsystems and microservices exist within the “platform” portion of our game (where you authenticate, enter the landing hub of the client, choose your champion and skin, shop, and manage your account). However, once a player starts a game and completes champion select, they connect to a game server. Game servers are located all across the world in data centers. At last count there were more than 20,000 game servers globally.

- Professional players at our major events play on a hybrid type of shard connected to our offline game servers. This ensures no risk of network lag or packet loss during competitive play.

- Game servers, for example, are highly protected and therefore exist in a guarded tier with as little inbound access as possible allowed. For example only a small, select group of users can access these game servers.

- Our offline game servers run a very highly modified operating system. Due to the nature of our game, deterministic performance of our hardware is vital. To this end, we disable any CPU clock boosting functionality as it can lead to non-deterministic performance during processor throttling states. Additionally, we disable any chip-level power-saving or hyper-threading. Until 2018, we ran those offline game servers virtually, but due to a recent change in League’s backend protocols, we made the move to bare metal for our portable game servers.

- A typical practice room at Worlds contains seven player stations, each consisting of an Alienware Aurora R8, a 240-Hz gaming monitor, and a Secret Lab gaming chair. We drop a shared network switch to connect all the machines and allow teams to either scrim on the offline game servers or online on a regional shard.

- 50+ cameras, hundreds of audio sources, miles of fiber optic cabling, and not a truck in sight. The footprint has decreased from having several production trucks onsite with all the operators and creative personnel, alongside several satellite trucks, to today’s iteration consisting of about 10 high density video, audio, encoding, and network racks in one onsite engineering room. This is all coordinated via our real-time, global communications infrastructure, spanning several continents and time zones, including the flagship studio in Los Angeles and partner facilities in places like Brazil, China, Korea, and Germany.

- Every millisecond saved brings Worlds action to players’ screens faster and at a higher quality. As part of our test we’ll have nine paths of JPEG-XS between our Los Angeles production facilities and each of our venues throughout Worlds. The total bandwidth dedicated to this test is an impressive 2.7 gigabits per second between LA and Worlds. This test is the first step on our path to 4K, and eventually 8K broadcasts.

- RiotEmil: We get a list of potential venues from our Events team and start conducting the surveys as soon as possible. Although it's very rare that a venue is completely "dark", there have been times were we've had to just abandon one of the choices because of it. We will always try to figure out solutions that could work, though. As an example, for one venue we were going to have to get them to dig up streets in the middle of the city in order to get fiber. Instead, we ended up using point to point wireless links to get connectivity from a nearby high rise.

- Facebook with a 2019 @Scale Conference recap. You might like AI: The next big scaling frontier, 6 technical challenges in developing a distributed SQL database, Kafka @Scale: Confluent’s journey bringing event streaming to the cloud, Leveraging the type system to write secure applications.

- a16z Podcast: Free Software and Open Source Business.

- Skip on-prem, go straight to SaaS. It's hard to shift from on-prem to SaaS. Plus Wall Street likes SaaS—you get higher multiples.

- Big cloud vendors are not just ripping off open source software, they are really good at running services in the cloud, nobody else can do it at scale, it's really hard to do, that's what they are getting paid for.

- One big mistake companies are making is not aligning their salesforce compensation model. Customers should get two bills, one from the cloud vendor (hadrware, storage, watts) and one for the software service. The bill customers get from the cloud vendor is paying the sales compensation of the cloud sales people. That makes the cloud vendor like you and want to partner with you. If you change your compensation model so the cloud sales people don't get paid then they won't like you and block you from accounts.

- SaaS prevents commoditization. Open source has zero intrinsic value. The value is in the support which quickly becomes commoditized. So if you want a higher valuation open source is not the way to go.

- There was an era when everything moved from hardware to software, hardware companies struggled. When your core competency is hardware it doesn't translate well to software. As you go from being a software vendor to being a cloud service provider the skill set of writing software doesn't translate that well to operating a cloud service, it's a completely different skill set. It's very hard to do both software and cloud services.

- Most open source projects are lead by just one company...most open source projects are brittle, if you look closely there's 5 or 6 people doing the development...most companies that say they are contributing are just packaging the project up and selling it...contribution is at the edge around integration/extension points...open source degrades when many companies are arguing over direction, Open Stack is one example.

- Open sourcing part of your software is an amazing lead gen machine, it get's you visibility in the community so they recognize you when you walk in the door.

- Build amazing software that you can monetize with enterprise companies...SaaS software is very different than the Red Hat support model, what is open sourced or not open sourced doesn't matter for a SaaS company, it matters for on-premise, because it's in the cloud customers are renting the software, they just want it to work in the cloud and be managed, a complication is if someone takes the software and tries to run the service as a competitor...nobody asks Amazon if S3, EC2, or RedShift are open sourced, no one seems to care...renting services in the cloud is a different dynamic...the reason you want to go SaaS instead of on-premise is so you don't have to worry about open source, open source is harder to monetize.

- There are kinds of software that are compelling for the clouds to want to run, Terraform allows you to consume more cloud so the cloud's like it...how aligned are you to what the cloud cares about?

- It's exremely hard to offer a cloud service, it takes many years to get good at it, you don't need to be afraid of cloud vendors if you know how to run a cloud service, but it's hard to run a cloud based SaaS service...on-prem vendors don't have to worry so much about support, they aren't on pager duty because it's the responsibility of the private IT vendor running the software.

- You can partner with clouds. All the workloads on the planet are moving into the cloud. There's enough for everyone to eat. Figure out what cloud vendors are good at, let them at value there. Look at whre the clouds are not adding value and go there, focus on that, and partner with them.

- The on-prem Red Hat open source support and services model is dead. SaaS is the future, it has taken over the whole planet. SaaS vs Non-SaaS makes a huge difference. Churn is higher if your're non-SaaS, net expansion rates are lower, everything is worse if you're not SaaS. SaaS prevents customers from moving to a cheaper service that doesn't do the actual development, but just offers lower support costs.

- Amazon, Google, Microsoft each have $100 billion in cash sitting around. They each have printing press that are not the cloud. Google has ads. Amazon has retail. Microsoft has windows/office. You can't compete. Move up the stack. They have the lower stack taking care of. Move into a vertical. They can't dominate everything.

- Nice trip report. My key takeaways from ServerlessDays Stockholm: Serverless is a mindset; Power of asynchronous event-driven architectures; The Strangler Pattern mentioned by Simon Aronsson provides a safe track of incremental applization modernization. Things like AWS Lambda ALB triggers are a great way to achieve this — building “stairs” for existing workloads towards serverless.

- AWS’s data transfer pricing documentation has thus been misunderstood for a long period of time. WS Cross-AZ Data Transfer Costs More Than AWS Says: the cheapest data transfer in all of AWS is between Virginia and Ohio...That last “$0.01/GB in each direction” is the misleading bit. Effectively, cross-AZ data transfer in AWS costs 2¢ per gigabyte and each gigabyte transferred counts as 2GB on the bill: once for sending and once for receiving.

- The reason for agile is to save the world. Back to Agile's basics. Great interview with Uncle Bob. Lots of agile talk as you might expect, but there are some more strategic topics of interest discussed as well.

- Let's say for arguments sake that the number of new programmers doubles every five years, who is teaching them how to program? The reason this is important is because software rules the world. Everything runs on software. Chances are there will be a huge software related accident that will kill tens of thousands and that will invite in regulation. Regulation will ruin the software business as we know it. One option is to do as doctors have done in the US, they created their own regulations as an industry, which keeps the government at a greater distance than they otherwise would be.

- The software industry could get ahead of the threat and self-regulate before the disaster happens. But we're cats that won't be herded. Chances are we won't do anything. And when the politicians ask us how we could let this disaster happen—we won't have an answer. This is an End of History style argument for the inevitability of regulation.

- But what if we created a set of practices that would give plausible deniability? We could say any disaster was an accident rather than from willful negligence. What practices might those be? This is where Uncle Bob's Clean series of books comes in. It teaches the exponentially growing crop of young programmers what the future of programming ought to look like. Though I personally think programming will have to look a lot different in the future.

- I loved this one: where did all the old programmers go? Nowhere. They're still here, it's just the number of young programmers is always growing and the majority of programmers will always be young because of the growth curve.

- How does an on-prem product move to serverless? First, you need a motivating disaster to drive change. And then you do it one brick at a time. The Serverless Journey of LEGO.com:

- The old: So that time, all on-prem. And so we had Oracle ATG, an old version of Oracle ATG ecommerce platform hosted on-prem and we had an Oracle database talking to, the platform itself talking to a bunch of other services within Lego. And at some point, there was an initiative that happened to make it more API-based and even that time they first APIs around. But still, it was on-prem and a monolith, but the front-end moved on from being a JSP onto a JavaScript based, hosted on Elastic Beanstalk from AWS. That's pretty much what we had two years ago out in that time frame. So we had, you know, they did a number of issues associated with the typical monolith platform maintaining or releasing our new features and fixes and things like that.

- The bad: At the same time, we wanted to migrate from the old platform, so we were not thinking of serverless. So a typical microservice, put everything in a Docker, or, you know, containerize an instance-base — those were the different ideas floating around. Then came this Black Friday. And we had this catastrophic failure of the platform so that triggered some of the conversations internally to get to a point that we must break up things.

- The trial: Okay, so at that point, so you know, the Black Friday failure happened and that gave us the opportunity to try out something simple when we wanted to decouple small part of our system. And that's where we introduced serverless, because not knowing anything about serverless, we didn't want to take a huge risk... let's go serverless and let’s use the managed services where available so that we don't need to bring anything else and reinvent the wheel.” And then, “Let’s use AWS Cloud, because lots of people use AWS Cloud and all the, you know, the services and features that we looked at, they’re all there and all that. You know, so there was a great community around it, especially and then there are plenty of meet-ups, and the talks, and the user groups are awesome.

- That was an important position being made which paid off really well for us because we pulled the AWS skills and serverless skills in one team. We started out with two or three engineers with some of the knowledge, then slowly grew the team or the squad.

- Now initially we started out with the infra team, then soon that became a bottleneck for us because the engineering team was able to come up with the service implementation faster, whereas it relied on an engineer to set up all the, you know, scripting and everything else for the department. So we took some time off and we discussed and said, “Okay, let's kind of merge these responsibilities.

- One additional thing I do is that when I finish the solution detailing, it is like a Confluence space that I give to the engineering team or engineer who will be working on and say that, “Now you own it. If you make any changes, you don't need to talk to me unless you get change the entire architecture,”

- We tried, we thought of using step functions and we thought of splitting into different Lambda functions, but those approaches didn't give us the fast response that we were looking for.

- we need to be open, so there is no right or wrong way. You choose the approach required for that particular situation

- So that means between the cloud and serverless, we get this sort of opportunity to practice that thing. So that means so we can come together with the quick solution implementation. Then we can kind of run it through. You don't need to wait to get to production to do that. Because all environments are the same, you have your DEV, or TEST, or PROD. They are just AWS environments, so you can basically try it a number of times in a way off in sports, say, for example, in rehearsal or you know, that sort of thing. So you you practice that again and again and make sure that that particular solution is now performing and solid production ready. All you need is just take it and deploy it to production. So that means, so I always say that we need to have the vision off the entire architecture, but we need to focus closely on a particular piece at the time.

- Because obviously the services these days communicate with events or messages, and if API calls, so that means we don't need to tightly integrate many things together. So we have that loosely coupled or a decoupled upwards, so that we can focus and make one bit perfect before we, you know, look at the other thing. I'm slowly then bring together one by one into their architectural walls.

- we are having the EventBridge as a core component. Event buses, a core component, at start up, because, as you know, that the filtering capabilities are far, far better

- Then I realized that that a number of ways, we can reduce the use off Lambda functions. So API Gateway being one, and then obviously, we talked about the approach of fat Lambda versus slim Lambdas. And there are a number of other areas and now with EventBridge where we can avoid having custom Lambda functions written.

- I think things like API Gateway service integrations, they're they're not a silver bullet. I mean, obviously, there are a lot of things you still need Lambda functions for. If you have business logic, then yes, Lambda functions are needed. But sometimes you're just moving date around or doing some transformations even, there are ways to do that without even touching Lambda. And it reduces a ton of complexity, right? It makes your architectural a little bit simpler.

- What’s needed to enable a Serverless Supercomputer? #1 Distributed networking; #2 Fine-grained, low-latency choreography; #3 High-speed key-value stores; #4 Immutable inputs and outputs associated with function invocations; #5 Ability to customize code and data at scale; #6 Execution dataflows; #7 General purpose and domain-specific algorithms customized to the “size and shape” of Serverless functions. Also, Approaching Distributed Systems.

- I know it will never happen, but some day I'd like to be as smart and articulate as Cory Doctorow. Cory Doctorow on Technology, Monopoly, and the Future of the Internet.

- And that they’ll say to these firms, “Since we can’t imagine any way to make you smaller, and therefore to make your bad decisions less consequential, we will instead insist that you take measures that would traditionally be in the domain of the state, like policing bad speech and bad actions.” And those measures will be so expensive that they will preclude any new entrants to the market. So whatever anticompetitive environment we have now will become permanent. And I call it the constitutional monarchy. It’s where, instead of hoping that we could have a technological democracy, where you have small holders who individually pitch their little corner of the web, and maybe federate with one another to build bigger systems, but that are ultimately powers devolved to the periphery, instead what we say is that the current winners of the technological lottery actually rule with the divine right of kings, and they will be our rulers forever.

- But the pre-Reagan era had a pretty widespread prohibition on merging with major competitors, acquiring potential future competitors as they were getting started, or cornering vertical markets. And if you look at Apple, Google, Facebook, Microsoft, it’s all they’ve ever done, it’s all… [chuckle] Yahoo’s the poster child for this. And Yahoo’s the poster child for what the terminal condition of it is, or one of the terminal conditions, which is that you can raise a ton of capital in the markets, then you can buy up and destroy every promising tech startup for 25 years, cash your investors out several times over, and still end up with nothing to show for it.

- You can imagine this just going on forever, walled gardens within walled gardens, where you escape one and end… Only to end up in the next one over.

- So I would like for there to be a stable set of what for want of better terms we might call constitutional principles for federation. So if you think about US federalism as maybe an example...And then we have a set of governing principles that dictate with a minimum set of personal freedoms those regulators have to give to the people who are under their… Within their remit. And first among them is the freedom to go somewhere else. And if you could imagine that we would have a set of rules about distributing malicious software, denial of service attacks, certain kinds of incitements to violence, and discriminatory conduct related to protected categories of identity, including race and gender and so on, and that people who agree to adhere to those federate with other people who agree to adhere to those.

- And she is the target of harassment by a small group of really terrible men on Twitter who have a method for gaming Twitter’s anti-harassment policies. And what they do is they send you just revolting, threatening direct messages, which they delete as soon as you read them, because Twitter won’t accept screenshots ’cause they’re so easy to doctor. And Twitter, to its credit, when you actually delete something, Twitter can’t readily access it; it’s pretty much deleted.

- Now imagine that Twitter could not avail itself of any legal tools to prevent a third party from making an interoperable Twitter service, a rival to Twitter, like a Mastodon instance that you could use to both read and write Twitter. And my friend and her dozen friends who are targeted by these 100 men could make a Twitter-alike that they could use to be part of the public discourse that takes place on Twitter but that would have a rule that all of these shitty men were blocked, and that would allow you to recover DMs for the purposes of dealing with this kind of harassment, and so on. You could now have a broad set of… Broad latitude within a decentralized, local means of communicating with everybody else that would allow people who were targeted for harassment to deal with this one-in-a-million use case, ’cause remember Facebook’s got 2.3 billion users. That means they’ve got 2300 one-in-a-million use cases every day.

- So I think that Larry Lessig’s framework for change really works here, that the four drivers of change are code, what’s technologically possible, law, what’s lawfully permissible, norms, what’s socially acceptable, and markets, what’s profitable. And clearly, competing with Facebook could be profitable, that under normal circumstances, markets would actually go a long way to correcting the worst excesses of Facebook, but there are legal impediments to those corrections

- When truth seeking becomes an auction, you are cast into an epistemological void where literally you could die because you don’t know what’s true and what isn’t, pharma being a really good example.

- Now, cryptography can help you defend yourself against a legitimate state, to organize to make that law a reality, but not forever, right? And so really, there is no substitute for the legal code that sits alongside the technological code.

- Are you having a hard time convincing your org to adopt DevOps? Then listen to this podcast: State of Devops 2019. You'll find all the arguments you need—backed by data. That data is in the form of the The 2019 Accelerate State of DevOps: Elite performance, productivity, and scaling. You want to be 'lite, don't you?

- No matter what people say, we still do really want transactions. eBay with GRIT: a Protocol for Distributed Transactions across Microservices: GRIT is able to achieve consistent high throughput and serializable distributed transactions for applications invoking microservices with minimum coordination. GRIT fits well for transactions with few conflicts and provides a critical capability for applications that otherwise need complex mechanisms to achieve consistent transactions across microservices with multiple underlying databases.

- Six cars aced new pedestrian detection tests. Were these tests fuzzed? Are they regression tested before every software release? Continuous verification is required for any car company to be trusted.

- A review of uDepot - keeping up with fast storage: The implementation raises two interesting questions. What is the best way to do fast IO? What is the best way to implement a thread per core server? For fast IO uDepot uses SPDK or Linux AIO...In figures 7 and 8 the paper has results that show a dramatic improvement from using async IO with thread/core compared to sync IO with many threads. For thread per core uDepot uses TRT -- Task Run Time. This provides coroutines for systems programming. TRT uses cooperative multitasking so it must know when to reschedule tasks. IO and synchronization is done via TRT interfaces to help in that regard. Under the covers it can use async IO and switch tasks while the IO or sync call is blocked. One benefit from coroutines is reducing the number of context switches.

- Saving money by automatically shutting down RDS instances using AWS Lambda and AWS SAM: While production databases need to be up and running 24/7, this usually isn’t the case for databases in a development or test environment. By shutting them down outside business hours, our clients save up to 64%. E.g. for only one db.m5.xlarge RDS instance in a single availability zone this already means a saving of $177/month or $2,125/year. While production databases need to be up and running 24/7, this usually isn’t the case for databases in a development or test environment. By shutting them down outside business hours, our clients save up to 64%.E.g. for only one db.m5.xlarge RDS instance in a single availability zone this already means a saving of $177/month or $2,125/year.

- Can Meteor scale? Yes, according to ergiotapia: In 2015, my business implemented a Meteor-based real-time vehicle tracking app utilising Blaze, Iron Router, DDP, Pub/Sub. Our Meteor app runs 24hrs/day and handles hundreds of drivers tracking in every few seconds whilst publishing real-time updates and reports to many connected clients. Yes, this means Pub/Sub and DDP. This is easily being handled by a single Node.js process on a commodity Linux server consuming a fraction of a single core’s available CPU power during peak periods, using only several hundred megabytes of RAM. How was this achieved? We chose to use Meteor with MySQL instead of MongoDB. When using the Meteor MySQL package, reactivity is triggered by the MySQL binary log instead of the MongoDB oplog. The MySQL package provides finer-grained control over reactivity by allowing you to provide your own custom trigger functions. Accordingly, we put a lot of thought into our MySQL schema design and coded our custom trigger functions to be selective as possible to prevent SQL queries from being needlessly executed and wasting CPU, IO and network bandwidth by publishing redundant updates to the client. In terms of scalability in general, are we limited to a single Node.js process? Absolutely not - we use Nginx to terminate the connection from the client and spread the load across multiple Node.js processes. Similarly, MySQL master-slave replication allows us to spread the load across a cluster of servers.

- Be careful out there. Don't work for free. My company sold for $100 million and I got Zilch. How can that be?

- Will A.I. Kill Music? From a muscians point of view. Prompted by Logic offering an AI driven drum kit that offers more variation than the previous methods. Recapitulates the discussions we hear whenever AI encroaches on an industry. The thought/hope is people will always want to see people perform music. As we've discussed for programming, AI could help with specific tasks, like help with mixing. AI is automating what people used to do by hand, like beat detection. The comments are more thoughtful than the video. Manny Prego: I remember when people were lamenting synthesizers, they were going to destroy music. Whole orchestras wiped out by the ominous machines. Obviously it didn't happen. Albert Moreno: Major labels are already using algorithms to make "hits" or to listen if the song can potentially be a hit.

- MITRE ATT&CK™ is a globally-accessible knowledge base of adversary tactics and techniques based on real-world observations. You'll find attacks for all the clouds and operating systems. A fun read.

- Excellent low level explanation of what schedulers do to keep CPUs as busy as possible performing meaningful work. Tokio is more like "node.js for Rust" or "the Go runtime for Rust". Making the Tokio scheduler [Rust’s asynchronous runtime] 10x faster. The optimizations covered are: The new std::future task system; Picking a better queue algorithm; Optimizing for message passing patterns; Throttle stealing; Reducing cross thread synchronization; Reducing allocations; Reducing atomic reference counting. Also, runtime: non-cooperative goroutine preemption.

- It never occurred to me before that a TrafficOS could make use of the entire highway when scheduling traffic. Lane lines and even dividers aren't needed at all. Here's Why Ants Are Practically Immune to Traffic Jams, Even on Crowded Roads: By cooperating in a self-organised system, researchers have found that Argentine ants (Linepithema humile) can adapt to different road conditions and prevent clogging from ever occurring...In fact, compared to humans, these ants could load up the bridge with twice the capacity without slowing down. When humans are walking or driving, the flow of traffic usually begins to slow when occupancy reaches 40 percent. Argentine ants, on the other hand, show no signs of slowing, even when the bridge occupancy reached 80 percent...And they do this through self-imposed speed regulation. When it's moderately busy, for instance, the authors found the ants actually speed up, accelerating until a maximum flow or capacity is reached...Whereas, when a trail is overcrowded, the ants restrained themselves and avoided joining until things thinned out. Plus, at high density times like this, the ants were found to change their behaviour and slow down to avoid more time-wasting collisions...when an ant trail started to clog, individuals returning to the nest blocked the leaving ants, forcing them to find a new route...While ants spread out evenly over lanes, even in emergency situations, humans are more likely to trample each other in our own urgent desires.

- AWS Lambda customer testimonial: Using AWS Lambda and AWS Step Functions, Mercury cut customer onboarding times from 20 minutes to 30 seconds and their “expected costs are $20 USD per 10,000 orders...We follow a serverless-first approach, so almost all of our new services use serverless technologies...Our serverless approach is far cheaper from a scalability and monitoring perspective. With serverless, we have fewer on-call incidents as the service is able to recover on its own, which helps a huge amount with developer fatigue...Using Lambda-based serverless applications, Resnap can run multiple Machine Learning models on an average of 600 photos “which results in thousands of invocations” and still generates a photo book “within one minute.”...[We] relied on AWS Lambda to get [our] platform on the market in under four weeks...Within six months, [we] had scaled to 40,000 users without running a single server...[Our] serverless-based approaches allow [us] to serve ads to audiences 60% faster than with instance-based approaches."...[Thanks to a serverless architecture], Openfit’s front-end latency is about 15 milliseconds."

Soft Stuff:

- pubkey/rxdb: RxDB (short for Reactive Database) is a NoSQL-database for JavaScript Applications like Websites, hybrid Apps, Electron-Apps and NodeJs. Reactive means that you can not only query the current state, but subscribe to all state-changes like the result of a query or even a single field of a document.

- lyft/amundsen (article): a metadata driven application for improving the productivity of data analysts, data scientists and engineers when interacting with data. It does that today by indexing data resources (tables, dashboards, streams, etc.) and powering a page-rank style search based on usage patterns (e.g. highly queried tables show up earlier than less queried tables). Think of it as Google search for data.

Pub Stuff:

- AT&T Archives: The UNIX Operating System: This film "The UNIX System: Making Computers More Productive", is one of two that Bell Labs made in 1982 about UNIX's significance, impact and usability. Even 10 years after its first installation, it's still an introduction to the system. The other film, "The UNIX System: Making Computers Easier to Use", is roughly the same, only a little shorter. The former film was geared towards software developers and computer science students, the latter towards programmers specifically.The film contains interviews with primary developers Ritchie, Thompson, Brian Kernighan, and many others. Also, Vintage Computer Festivals.

- Delay is Not an Option: Low Latency Routing in Space: SpaceX has filed plans with the US Federal Communications Committee (FCC) to build a constellation of 4,425 low Earth orbit communication satellites. It will use phased array antennas for up and downlinks and laser communication between satellites to provide global low-latency high bandwidth coverage. To understand the latency propertes of such a network, we built a simulator based on public details from the FCC filings. We evaluate how to use the laser links to provide a network, and look at the problem of routing on this network. We provide a preliminary evaluation of how well such a network can provide low-latency communications, and examine its multipath properties. We conclude that a network built in this manner can provide lower latency communications than any possible terrestrial optical fiber network for communications over distances greater than about 3000 km.

- The Five-Minute Rule 30 Years Later and Its Impact on the Storage Hierarchy: In this article, we revisit the five-minute rule three decades after its inception. We recomputed break-even intervals for each tier of the modern, multi-tiered storage hierarchy and use guidelines provided by the five-minute rule to identify impending changes in the design of data management engines for emerging storage hardware. We summarize our findings here: HDD is tape. The gap between DRAM and HDD is increasing as the five-minute rule valid for the DRAM-HDD case in 1987 is now a four-hour rule. This implies the HDD-based capacity tier is losing relevance for not just performance sensitive applications, but for all applications with a non-sequential data access pattern. Non-volatile memory is DRAM. The gap between DRAM and SSD is shrinking. The original five-minute rule is now valid for the DRAM-SSD case, and the break-even interval is less than a minute for newer non-volatile memory (NVM) devices like 3D-XPoint.23 This suggests an impending shift from DRAM- based database engines to flash or NVM-based persistent memory engines. Cold storage is hot. The gap between HDD and tape is also rapidly shrinking for sequential workloads. New cold storage devices that are touted to offer second-long access latency with cost comparable to tape reduce this gap further. This suggests the HDD-based capacity tier will soon lose relevance even for non-performance-critical batch analytics applications that can be scheduled to run directly over newer cold storage devices.

- Real-time optimal control via Deep Neural Networks: study on landing problems (article): Recent research on deep learning, a set of machine learning techniques able to learn deep architectures, has shown how robotic perception and action greatly benefits from these techniques. In terms of spacecraft navigation and control system, this suggests that deep architectures may be considered now to drive all or part of the on-board decision making system.

- The Post-Incident Review: Featured postmortems from Honeycomb: “You Can’t Deploy Binaries That Don’t Exist”; Monzo: “We Had Issues With Monzo On 29th July. Here's What Happened, And What We Did To Fix It.”; Stripe: “Significantly Elevated Error Rates on 2019‑07‑10”; Google Cloud: “Networking Incident #19009”

- On the Classical Hardness of Spoofing Linear Cross-Entropy Benchmarking (article): In this short note, we adapt an analysis of Aaronson and Chen [2017] to prove a conditional hardness result for Linear XEB spoofing. Specifically, we show that the problem is classically hard, assuming that there is no efficient classical algorithm that, given a random n-qubit quantum circuit C, estimates the probability of C outputting a specific output string, say 0^n, with variance even slightly better than that of the trivial estimator that always estimates 1/2^n. Our result automatically encompasses the case of noisy circuits.

Reader Comments (1)

"23%: Google's profit decline. Headcount up to 114K from 94K a yaer ago."

In this sentence, the word "YEAR" has a typo