Friday

Mar222019

Stuff The Internet Says On Scalability For March 22nd, 2019

Friday, March 22, 2019 at 9:07AM

Friday, March 22, 2019 at 9:07AM Wake up! It's HighScalability time:

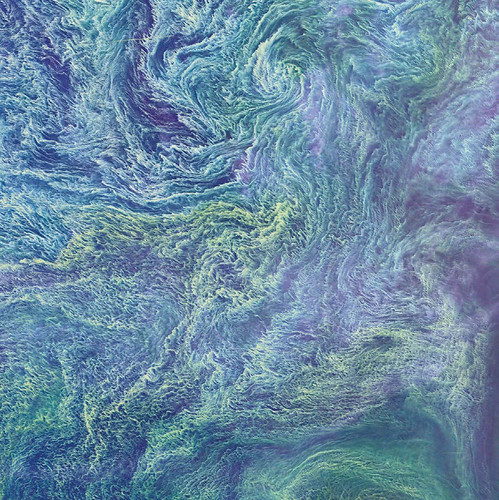

Van Gogh? Nope. A satellite image of phytoplankton populations or algae blooms in the Baltic Sea. (NASA)

Do you like this sort of Stuff? I'd greatly appreciate your support on Patreon. Know anyone who needs cloud? I wrote Explain the Cloud Like I'm 10 just for them. It has 41 mostly 5 star reviews. They'll learn a lot and love you even more.

- .5 billion: weekly visits to Apple App store; $500m: new US exascale computer; $1.7 billion: EU's newest fine on Google; flat: 2018 global box office; up: digital rentals, sales, and subscriptions to streaming services; 43%: Dutch say let AI run the country; 2 min: time from committing an AWS key to Github to first attack; 40: bugs in blockchain platforms found in 30 days; 100%: Norwegian government 2025 EV target;

- Quotable Quotes:

- @raganwald: You know what would really help restore confidence? Have members of the executive leadership team, and Boeing’s board of directors, fly on 737 Max’s, every day, for a month. Let them dogfood the software patch. I am 💯 serious.

- GPT-2: My heart, why come you here alone? The wild thing of my heart is grown. To be a thing, Fairy, and wild, and fair, and whole.

- skamille: I worry that the cloud is just moving us back to a world of proprietary software. Hell, many of these providers are just providing open source API compatibility with custom-built backends! What happens when no new open source comes out of the smaller companies, and the big-3 decide they don't really need or want to play nice anymore?

- @adriancolyer: "eRPC (efficient RPC) is a new general-purpose remote procedure call (RPC) library that offers performance comparable to specialized systems, while running on commodity CPUs in traditional datacenter networks based on either lossy Ethernet or lossless fabrics… We port a production grade implementation of Raft state machine replication to eRPC without modifying the core Raft source code. We achieve 5.5 µs of replication latency on lossy Ethernet, which is faster than or comparable to specialized replication systems that use programmable switches, FPGAs, or RDMA."

- @matthewstoller: I just looked at Netflix’s 10K. The company is burning through cash. $3B this year, $4B next year. Debt skyrocketing. It all looks good when capital is cheap I guess.

- @BrentToderian: What city went from 14% of all trips by bike in 2001, to 22% by 2012, then leaped to 30% in 3 years by 2015, & 35% by 2018? Thanks to #Ghent, Belgium’s Deputy Mayor @filipwatteeuw for sharing the secrets of their urban biking transformation (with jumps in walking & transit too).

- @slobodan_: "It is serverless the same way WiFi is wireless. At some point, the e-mail I send over WiFi will hit a wire, of course"

- Clive Thompson: The thrust of Silicon Valley is always to take human activity and shift it into metabolic overdrive. And maybe you’ve wondered, why the heck is that? Why do techies insist that things should be sped up, torqued, optimized? There’s one obvious reason, of course: They do it because of the dictates of the market. Capitalism handsomely rewards anyone who can improve a process and squeeze some margin out. But with software, there’s something else going on too. For coders, efficiency is more than just a tool for business. It’s an existential state, an emotional driver.

- @steren: The function abstraction is perfect until you hit its limit (language, system libs, custom binaries...). Containers give you this flexibility. Developers shouldn't have to lose benefits of serverless (pay per use, autoscale, managed) to get flexibility.

- @chrisalbon: First they ignore you, then they laugh at you, then they release your exact product but with 1000x the market reach and 100x the resources because of the sheer scale of their platform, then you pivot.

- Rita Liao: Meanwhile, cloud revenues doubled to 9.1 billion yuan in 2018, thanks to Tencent’s dominance in the gaming sector as its cloud infrastructure now powers over half of the China-based games companies and is following these clients overseas. Tencent meets Alibaba head-on again in the cloud sector. For comparison, Alibaba’s most recent quarterly cloud revenue was 6.6 billion yuan. Just yesterday, the ecommerce leader claimed that its cloud business is larger than the second to eight players in China combined.

- @awsgeek: This weekend I was rendering a 3D scene with Blender on EC2. Per frame GPU render time was about 6 seconds, back of napkin math said the job wouldn't finish for 32 hours. While I waited, I switched to AWS Lambda & the same job finished in 11 minutes. 11 minutes! I setup an Lambda function to generate a task per frame, an SQS queue for pending tasks, and another Lambda to do the rendering. Here's how difficult it was to use Blender in Lambda: $ pip install bpy_lambda All the hard work has already been done! Sure, I could have spun up a fleet of EC2 spot instances, optimized instance rendering, & parallelized rendering (similar to Lambda), but the speed and convenience of using AWS Lambda was just too attractive.

- Kai Polsterer: Coming up with a theory, with reasoning, I think demands creativity. Every time you need creativity, you will need a human. To be creative, you have to dislike being bored. And I don’t think a computer will ever feel bored.

- Ben Kehoe: The point is not functions, managed services, operations, cost, code, or technology. The point is focus — that is the why of serverless. Serverless is a consequence of a focus on business value. It is a trait. It is a direction, not a destination. Climb the never-ending serverless ladder.

- Crosby: The real world is stateful. Stateless and databases have allowed the cloud to scale out superbly, but is massively wasteful of CPU. For every cycle of useful edge compute at memory speed, a stateless centralized Architecture wastes a billion cycles. That's enough for a raspberry pi at the edge to outrun a powerful cloud instance! So the future of the edge is stateful, and computation is driven by data in real time. An inference cycle at the edge takes less time than getting one packet of data from the edge to the cloud! Swim is a 2MB extension to the JVM. The developer simply defines a schema on the data and the methods on the digital twins of each type that are called when data arrives. She also defines the computational objects that will analyze the digital twins in the model. The powerful capabilities in swim put the bleeding edge of analytics, learning and inference in the palm of the dev, who can deliver deep insights and predictions in a few lines of java. Swim also has an in-browser implementation that can link to digital twins to deliver real time UIs in a few lines of js.

- Diego Pacheco: there is a culture SHIFT focusing on being a user-centric company, running experiments in order to discover new business models, new customer experience and new features. Don't forget that how we do things in TECH matters a lot and we need to pay attention to TECH decision so STOP getting the cheapest vendors and worst develops.

- Gené Teare: dollars for below $5 million is projected to be $8.5 billion, close to the height in 2015 of $8.6 billion. Deal counts are down from the height by a fifth, which does mean less seed-funded startups in the U.S. Provided that capital allocation is greater than $5 million continues to grow, less seed funded startups will die before raising a Series A. More companies have a chance to succeed, which is good for seed funds, and ultimately for the whole ecosystem.

- @aripalo: A #serverless enthusiast walks into a bar, refactors the bartender as a Lambda function: Bartender nowhere to be seen, 1000 customers come in, first customer orders a beer, bartender arrives a bit slowly, next 999 beers delivered really fast, bartender dissapears again.

- Mark D. Hill: Bottleneck Analysis gives the maximum throughput of a system from the throughputs of the system’s subsystems with two rules that can be applied recursively: The maximum throughput of K sub-systems in parallel is the sum of the subsystem throughputs. The maximum throughput of K sub-systems in series is the minimum of the subsystem throughputs.

- AWS: Amazon ElastiCache for Redis adds dynamic network processing to enhance I/O handling in Redis 5.0.3. By utilizing the extra CPU power available in nodes with four or more vCPUs, ElastiCache transparently delivers up to 83% increase in throughput and up to 47% reduction in latency per node.

- @adriancolyer: Why wait 2 RTTs for consistent, durable operations across a set of machines when you can do it in one? CURP exploits operation commutativity to bring an impressive performance boost to replication. I think you'll be seeing a lot more of this...

- @ben11kehoe: #serverless architectures render a good chunk of the monolith/microservice question moot. You've got a big infrastructure graph of services and functions. You can choose to draw deployment boundaries where it's best for you. It isn't a question of building one way or the other.

- Ashwin Bhat: When all was said and done, our average daily [DynamoDB] cost was down about 90%! From our testing, we were unable to detect any hits to performance either. Overall, [On-Demand Pricing is] a huge win. DynamoDB allows you to switch back and forth once per day, so a well-timed switchover could save you a lot of money.

- @ryanhuber: Kubernetes: Because every problem can be solved with a Google sized solution.

- @benedictevans: Last week: Google moves ML speech-to-tech from the cloud to the edge. This week: Google moves gaming from the edge to the cloud. We have vast computing power at both ends and are working out what goes where.

- Low-tech Magazine: The web server uses between 1 and 2 watts of power (depending on the number of visitors), meaning that it requires between 24 Wh and 48 Wh of electricity per day. The web server is now powered by a 50 Wp solar panel and a 24 Wh Li-Po battery (3.7 V, 6.6 A). That’s enough energy storage to run the site for 12 to 24 hours.

- @ben11kehoe: #serverless sevice mesh: when you have a constellation of independently-deployed microservices, each with a set of resources it wants to expose (API GW, S3 bucket, Kinesis stream either as source or sink), how do they dynamically discover and connect?

- @copyconstruct: My top 3 papers on serverless 1. Serverless Computing: One Step Forward, Two Steps Back https://twitter.com/copyconstruct/status/1075086886610169857 …2. Cloud Programming Simplified: A Berkeley View on Serverless https://twitter.com/copyconstruct/status/1105880256643108864 … 3. Peeking Behind the Curtains of Serverless Platforms

- @cloud_opinion: "how do I reach Google if needed urgently?" - this is the biggest question on the minds of many GCP customers

- Fauna: A good way to think about isolation is in terms of the breadth of potential anomalies. The lower the isolation level, the more types of anomalies can occur, and the harder it is to reason about application behavior both at steady-state and under faults.

- Lily Hay Newman: The hackers would exploit flaws in how SPEI validated sender accounts to initiate a money transfer from a nonexistant source like “Joe Smith, Account Number: 12345678.” They would then direct the phantom funds to a real, but pseudonymous account under their control and send a so-called cash mule to withdraw the money before the bank realized what had happened.

- Mark Schwartz: All of serverless’s implications can be summarized by stating that serverless is bringing business value back into the focus of software. Serverless is indeed much more than a simple “abstraction level over a company’s IT infrastructure with an increased speed of delivery.” It’s the focus on business value, the serverless pay-per-use business model, FinDev practices, “Build vs Outsource,” “Build vs Optimize,” lower business risk, and a lower break-even point, along with the variable business model, that might change the tide of business software.

- @withfries2: 1/ It's astounding + awesome that @NASANewHorizons is sending back images from beyond Pluto. Earlier this year, at >4.4 billion miles from home, it started transmitting data from its flyby of MU46 2/ We're not exactly getting LTE speeds at that distance, so we'll keep on receiving data until summer 2020

- @BrianRoemmele: Apple AirPods H1 chip (SOCs) has the processing power of an iPhone 4—in each ear! Class 1 Bluetooth 5. H1 die size is ~12mm2.

- Zack Whittaker: Norsk Hydro, one of the largest global aluminum manufacturers, has confirmed its operations have been disrupted by a ransomware attack.

- Richard Sutton: We have to learn the bitter lesson that building in how we think we think does not work in the long run. The bitter lesson is based on the historical observations that 1) AI researchers have often tried to build knowledge into their agents, 2) this always helps in the short term, and is personally satisfying to the researcher, but 3) in the long run it plateaus and even inhibits further progress, and 4) breakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning. The eventual success is tinged with bitterness, and often incompletely digested, because it is success over a favored, human-centric approach. One thing that should be learned from the bitter lesson is the great power of general purpose methods, of methods that continue to scale with increased computation even as the available computation becomes very great. The two methods that seem to scale arbitrarily in this way are search and learning.

- Rule11: Moving data ultimately still takes time, still takes energy; the network still (often) needs to be tuned to the data being moved. Serverless is a great technology for some solutions—but there is ultimately no way to abstract out the hard work of building an entire system tuned to do a particular task and do it well. When you face abstraction, you should always ask: what is gained, and what is lost?

- @robot_wombat: Have you ever had a vending machine drop an extra item? I once read a manual and found it that they can be programmed with a "winning mode" that gives out a free item occasionally to attract people to the "lucky" vending machine. That's some unexpected social engineering...

- @QuinnyPig: Counterpoint: 1 petabyte costs $12k a year or so in Glacier Deep Archive.

- David Rosenthal: The use of lossless compression by architects of the lower layers of the preservation function should not cause concern. It is extremely unlikely to impair overall preservation system reliability, though it is equally unlikely to provide significant cost savings because almost all the data being stored will already have been compressed at the top of the preservation stack.

- Adrian Colyer: Inspired by these insights, the authors define a new class of topologies called FatClique, which combine the hierarchical structure of Clos with the edge expansion capabilities of expander graphs. There are three levels in the hierarchy. A clique of switches form a sub-block. Cliques of sub-blocks come together to form blocks. And cliques of blocks come together to from the full FatClique topology

- Ed Sperling: Taken as a whole, any of these developments could have a significant impact on chip manufacturing. Consolidation at the leading edge into one giant pure-play foundry (TSMC) and two IDMs (Samsung and Intel) could give way to a much more diverse collection of foundries, with specialization based on materials. And while none of the most advanced-node foundry operations are in jeopardy, materials are likely to make the future of chip manufacturing far more heterogeneous and diverse than it is today.

- Nikunj Raghuvanshi: our main idea is, actually, to look at the literature in lighting again and see the kind of path they’d followed to kind of deliver this computational challenge of how you do these extensive simulations and still hit that stringent CPU budget in real time. And one of the key ideas is you precompute. You cheat. You just look at the scene and just compute everything you need to compute beforehand, right? Instead of trying to do it on the fly during the game. So, it does introduce the limitation that the scene has to be static. But then you can do these very nice physical computations and you can ensure that the whole thing is robust, it is accurate, it doesn’t suffer from all the sort of corner cases that approximations tend to suffer from, and you have your result. You basically have a giant look-up table. If somebody tells you that the source is over there and the listener is over here, tell me what the loudness of the sound would be. We just say okay, we this a giant table, we’ll just go look it up for you. And that is the main way we bring the CPU usage into control. But it generates a knock-off challenge that now we have this huge table, there’s this huge amount of data that we’ve stored and it’s 6-dimensional. The source can move in 3-dimensions and the listener can move in 3-dimensions. So, we have the giant table which is terabytes or even more on data.

- Maybe some truisms no longer apply at some system change complexity inflection point. Truism 1: Complex systems evolve from simpler systems. Truism 2: Rebuilding a new system from scratch is riskier than changing an existing system. When do changes to a system transform it into something fundamentally new? Does human psychology make us danger blind because we can't see when an old transformed thing has become a new different thing?

- @Satcom_Guru: I favor incompetence as the cause for bad decisions, before leaping to impropriety or to malicious intent. Incompetence can thrive within any layer of an organization. Sometimes all that is missing is just one inspired individual to shine the light. Ignorance wins in the shadows.

- Peter Lemme: Any assertion that the EUMs are not doing their best to do the right thing is particularly galling. Please don't disparage these individuals categorically. Please accept that they are humans that can make mistakes. There are more layers between the EUM and anyone on the FAA that has pertinent system knowledge than in days gone by. It could be better, but the FAA needs to take the lead on that, and our government seems wholly focused on abandoning regulation. In the meantime, take more faith on the EUMs and the ODA integrity.

- Peter Lemme: Finally, Boeing changed the 737 MAX cutout switches. There appears to be no awareness of the change, yet they function differently. Before, there was a switch that disabled the autopilot stab trim command alone. The flight crew could continue to use electric trim with the autopilot trim disabled.

- The 737Max and Why Software Engineers Might Want to Pay Attention: If you stand back and replace “737" with “system” and “pilot” with “ops engineer,” the situation with the 737Max is a case of new, market-driven business requirements prompting a redesign to a widely-considered stable and reliable production system. Those requirements necessitated engineers to change fundamental handling characteristics of the system. To cope, they added additional monitoring and control systems. One of these systems was added “for safety,” but it was also capable of overriding operator input during critical operational phases, where activity is high tempo and high consequence. This new safety automation is capable of overriding operator input in silence and in ways that were poorly documented by designers, unclear to operators, and promised by developers that nobody had to get new training on — a selling point — and this safety automation proved to cause the system to become critically unrecoverable in, at least, one case.

- @trevorsumner: Some people are calling the 737MAX tragedies a #software failure. Here's my response: It's not a software problem. It was an * Economic problem that the 737 engines used too much fuel, so they decided to install more efficient engines with bigger fans and make the 737MAX. * Airframe problem. They wanted to use the 737 airframe for economic reasons, but needed more ground clearance with bigger engines.The 737 design can't be practically modified to have taller main landing gear. The solution was to mount them higher & more forward. This led to an * Aerodynamic problem. The airframe with the engines mounted differently did not have adequately stable handling at high AoA to be certifiable. Boeing decided to create the MCAS system to electronically correct for the aircraft's handling deficiencies. During the course of developing the MCAS, there was a * Systems engineering problem. Boeing wanted the simplest possible fix that fit their existing systems architecture, so that it required minimal engineering rework, and minimal new training for pilots and maintenance crews. Nowhere in here is there a software problem. The computers & software performed their jobs according to spec without error. The specification was just shitty. Now the quickest way for Boeing to solve this mess is to call up the software guys to come up with another band-aid.

- B789: Supposedly, Boeing already has a software fix in place and it takes about an hour to apply to each jet. 350ish MAX's are in fleets, so that's a solid 15-20 days of repair time to split of between a lot of people. The issue with the Lion Air crash is faulty AOA data from one sensor failing and the MCAS system relies solely on AOA. The problem there is the 737 only has two AOA sensors, so if one goes bad, MCAS has to make a decision on which one is right. Conversely, the A320 has a similar MCAS type system, but has 3 AOA sensors. It picks the best two out of three, so if one goes bad, then there are still 2 left to give accurate data. The software fix is to have the MCAS system consider more inputs than just AOA so there is not a single failure mode.

- Gnascher: The competitive pressure is that Airbus was building a competitive airplane targeting a similar market space. Boeing wanted to compete by delivering a 737 upgrade that was more efficient, capable of longer range and larger payload, and didn't require a new "Type Certification" for pilots (which is a major cost for an airline). That last point is important because if an airline already has a Boeing fleet, they are offering a strong incentive to buy Boeing (even if it comes out after the Airbus) because they'll be saving a huge amount of money just in training budget.

- Charles_Dexter_Ward: Reminds me of the [Ariane 5 rocket failure. They reused some of the guidance software from the Ariane 4 even though the new rocket had much greater horizontal acceleration capabilities which exceeded what the Ariane 4 could do and also exceeded the software's specified inputs. The software threw an exception when it encountered the out-of-bounds input as was indicated per the specification. No amount of software tools, methodologies, languages, &c. will help in these cases as they are rooted in bad specification.

- possessed_flea: I have worked in this exact environment for a competitor to boeing. Software engineers have exactly 0 say in the initial designs of any interface, that all falls on the SYSENG team, adding an additional 'whitespace' or piece of punctuation to a user interface causes an absolute massive shitshow where you end up in a meeting with a minimum of 3 different managers and 5 people from syseng and 2 from V&V all wondering how a deviation from the User Requirements Specification got that far into testing. ) What is the most likely situation was that syseng put together a list of requirements, which included nothing about feedback.

- Four Key Product Principles from WeChat’s Creator: #1 The Golden Principle: The user is your friend. Avoid all monetization that might negatively impact daily platform use. Product design should not be reduced to “processes” that can be continuously optimized by data-driven teams. #2 Technology is for efficiency. The mission of technology should be solely to improve a user’s efficiency, and that the greater industry’s focus on time spent in app is flawed. #3 KPIs (key performance indicators) are secondary. Too many are distracted and misled by this focus on traffic. Our teams have developed the habit of thinking about the deeper meaning behind each function and service. #4 Decentralized ecosystem. WeChat’s bottom navigation bar has only four tabs: ‘chats’, ‘contacts’, ‘discover’, and ‘me’. The catch however, is that each user has to actively search for and discover all of these 3rd-party services themselves. Zhang pushes WeChat instead to build a many-sided system, where WeChat resides as the “caretaker” of the overall environment. In this decentralized ecosystem, developers are forced to earn their own growth ideally by providing the most value they can to end users. If you surround yourself with metrics, you probably will never meet them.

- Looking for your next app? Sports betting might be a good niche. The sports data revolution will not be televized: Indications all point the demise of the traditional TV game in favor of a faster, more dynamic and ultimately more involved sports experience. TV viewing figures for this year’s Superbowl reached historic lows at 98.2m, dropping 12% from 2017, while streaming audiences and those watching across multiple devices jumped 20%; the average digital sports fan represents 40% of a global online gambling market with 9.8% CAGR, set to be worth upwards $73.45bn by 2024; There is also the Chinese government’s campaign to grow its sports sector into a £647 billion industry by 2025; ust 17% of respondents placed bets over mobile in the US. Once regulations are loosened this figure could likely double; almost 70% willing to change bet-provider at the first sign of inconvenience. Also, Gambling on the go

- Protocols should be studied and practiced more. Protocols are Important: Martin Thompson at QCon London (slides): Thompson highly recommends that we document our protocols. When comparing an API with a protocol he describes the API as a one-dimensional and anaemic view compared to a protocol; To deal with this Thompson refers to a paper from 1997 by Floyd et al: A Reliable Multicast Framework for Light-weight Sessions and Application Level Framing, where they introduced an algorithm for doing a very short random delay before a client returns a NAK, but only if no other client already has returned a NAK. This minimizes the number of NAKs sent, while still resending all data needed; Encoding. Don’t use text protocols, use binary protocols; Sync vs Async. Synchronous protocols are very slow, and they block. With increased latency they get even slower and you get less done. Using an asynchronous protocol, you can get so much more done, and it still works fine with increased latency.; Guaranteed Delivery, something that has been proven to be wrong so many times. For Thompson applications should not rely on the underlying transport to deal with all errors that can occur, they should have their own feedback and recovery protocols.

- Serverless is not just functions. There's an intricate enfolded web of configuration, permissions, deployment, monitoring, billing, and coordination involved. The platonic ideal of pure solutions running in an idealized form space is still very far away. Why I, A Serverless Developer, Don’t Care About Your Containers.

- How does this new memory tier perform? Researchers Scrutinize Optane Memory Performance: When Intel starts shipping its “Cascade Lake” Xeons in volume soon, it will mark a turning point in the server space. But not for processors – for memory. The Cascade Lake Xeon SP will be the first chip to support Intel’s Optane DC Persistent Memory, a product that will pioneer a new memory tier that occupies the performance and capacity gap between DRAM and SSDs...Using internal benchmarking, Intel claims Optane DC can deliver 11 times the write performance and 9 times the read performance for Spark SQL workloads, compared to a DRAM-only system...at least for Memcached and Redis, two common in-memory frameworks. Compared to a DRAM-only set-up, throughput decreased by 8.6 percent for Memcached and 19.2 percent for Redis, when Optane DC modules were used as the memory, with DRAM as cache. Also, NVM-Express Storage Goes Mainstream Over Ethernet Fabrics

- Nicely done. How Does a Database Work?

- Netflix mounts cloud objects as local files via FUSE. Stream the right bits of a remote object efficiently and expose those bits as a file on the filesystem. MezzFS — Mounting object storage in Netflix’s media processing platform: We’ve been using MezzFS in production for 5 years, and have validated it at scale — during a typical week at Netflix, MezzFS performs ~100 million mounts for dozens of different use cases and streams about ~25 petabytes of data...Stream objects — MezzFS exposes multi-terabyte objects without requiring any disk space...Assemble and decrypt parts — Our object storage service splits objects into many parts and stores them in S3. MezzFS knows how to assemble and decrypt the parts...Mount multiple objects — Multiple cloud objects can be mounted on the local filesystem simultaneously...Disk Caching — MezzFS can be configured to cache objects on the local disk...Mount ranges of objects — Arbitrary ranges of a cloud object can be mounted as separate files on the local file system. This is particularly useful in media computing, where it is common to mount the frames of a movie scene as separate files...Regional caching — Netflix operates in multiple AWS regions. we only pay the transfer costs for one worker, and the rest use the cached object.

- How did Apex grow to handle 1 million concurrent players within 72 hours? Mostly GCP. Apex Legends: "Apex Legends does things on a much smaller scale – it features 60 people per single game world. Apex offers something called twitch gameplay - a reaction-based process where a fraction of a second can mean the difference between victory and defeat. In this scenario, responsiveness is paramount, and any technical issues will see players leave...The game wears its infrastructure credentials on its sleeve: as you log in, a tab called ‘Data Centers’ is one of the first things you will see. It shows a total of 44 different facilities around the world: Google Compute Engine sites are easy to identify by the ‘GCE’ tag – the rest are a combination of facilities from AWS and Microsoft Azure, plus some bare metal servers." Their networking team only had 3 people in it. Google's new Stadia streaming game service relies heavily on custom AMD data center GPUs to power its 7,500 edge nodes.

- How many times have we been warned not to rewrite TCP in UDP? Is QUIC the exception that proves the rule? Or a hack that changes everything for little gain over proven technology? Why Fastly loves QUIC and HTTP/3: Google saw a 16.7% reduction in end-to-end search latency for the highest latency desktop connections; 14.3% reduction for the highest latency mobile ones; 8.7% reduction in rebuffering...QUIC is much better placed to evolve rapidly because it is built on top of UDP and will commonly run in userspace, making it possible to deploy rapid changes at both servers and clients...QUIC addresses this problem by moving the stream layer of HTTP/2 into a generic transport, to avoid head-of-line blocking both at the application and transport layers. It does that by building a new set of services like TCP’s on top of UDP...QUIC incorporates an encryption scheme that reuses the machinery of TLS 1.3 to ensure that applications’ connections are confidential, authenticated, and integrity-protected...QUIC introduces significant flexibility by divorcing a sender’s view of traffic from a receiver’s view. Without getting into the weeds here, separating these two essentially means that we can optimize our serving without requiring changes to the receivers. Also, Heavy Networking 436: Will QUIC Collapse The Internet?

- No Reservations: A Definite Guide to EC2 Reserved Instances: RIs are a billing concept. When you purchase an RI, AWS will automatically apply a discount to your EC2 bill for an instance that matches the configuration of your RI...My experience with the RI marketplace is that you shouldn’t expect to make much of a profit or score much cost savings when buying or selling. Use it to offload excess RIs for a minimal cost or to buy RIs at shorter term lengths...RIs have tiered pricing meaning that it gets cheaper the more you spend. These discounts start taking into effect once you hold more than $500,000 in RIs and result in a 5% discount on both upfront and hourly RI payments at the lowest tier. They go up to 10% once you surpass $4 million in RI payments. If you hit more than $10 million, you get to contact AWS and negotiate a super secret large customer discount...RIs can also reserve capacity but at the cost of AZ flexibility - to keep both, consider purchasing capacity reservations with Regional RIs...if you are part of an organization, use consolidated billing and a central account to buy RIs for all linked accounts

- How to Reindex One Billion Documents in One Hour at SoundCloud: in total, we have approximately 2TB RAM and 1,000 cores available to serve more than 1,000 search queries per second at peak time...we use what is canonically known as Kappa Architecture for our search indexing pipeline. The main idea here is: have an immutable data store and stream data to auxiliary stores...Concurrent bulk API calls and large bulk size...Optimal primary shards count...Async translog setting...Turned off Elasticsearch refresh...Merging of Lucene segments...Faster replication settings...Including the long tail, the primary shard indexing takes around 30 minutes for more than 720 million documents, whereas it previously took three hours...Our search latency for 95 percent of all requests dropped to roughly 90ms5 after rolling out the 30 primary shards configuration.

- 7 Commandments for Event-Driven Architecture: Services will drop, well, out of service. Events will be delivered out of order. And off schedule. Plan accordingly; a consumer with no knowledge of when or where an event was produced will necessarily be better isolated, more resilient, and easier to change than one tightly coupled to a producer upstream; Maintaining an append-only registry ensures that event types are clearly defined in the present–but also that any legacy events lingering in forgotten logs or data stores will still have meaning; Sharing an event registry across the entire service ecosystem saves collisions and simplifies discovery for interested downstream services; providing a unique identifier at creation time is an absolute requirement for consumers that should discard already-processed events; Without knowing how or when an event will be consumed, assume any state inside it is stale.

- Good example of sprinkling ML dust on a product making a "it just works" auto mode that also increases battery life. Dyson's latest V11 cordless vacuum cleaner uses AI to clean: using complex algorithms to determine when you need more suction power as you clean your house...In terms of tech, the biggest change of all is Dyson’s decision to embrace sensors on a new brush bar. They relay back to the main unit, telling it what surface you are cleaning. That then changes the V11's power usage accordingly.

- Everyone wants to store data on DNA, but it may not be a slam dunk. Cost-Reducing Writing DNA Data: $56,000/TB is still an extraordinarily expensive storage medium. 10TB hard disks retail at around $300, so are nearly 2000 times cheaper...At current prices demand for bytes of hard disk is growing steadily but more slowly than the Kryder rate, so unit shipments are falling...The total market is probably less than $1B/yr...while Catalog may be able to demonstrate a significant advance in the technology of DNA storage, they will still be many orders of magnitude away from a competitive product in the archival storage market.

- SOA vs. EDA: Is Not Life Simply a Series of Events? No, life is a combination of goal directed behaviours coupled with event driven interventions. But, it's still an excellent analysis—pull vs. reactive; coupling; service availability; process modification and extension; consistency; retaining exact state transitions; streaming analytics; timing of consistency and intelligence—of the SOA/EDA dualism, which like most dualisms is a particular quantization of an as yet hidden unity.

- Brendan Gregg's home page is a honeypot of performance wisdom. A productive place to spend a rainy day.

- Good example of how to think through building an application. I can serverless, and you can too!: Throughout this exercise we haven’t mentioned scaling, load balancers or provisioning — instead, we have chosen to use massively scalable services that handle all this for us. This design can easily accommodate the traffic levels the zoo expects, and we will eventually write maybe only 1000 lines of code on the back-end to support the entire application.

- You need different maps to understand different territories. The map we need if we want to think about how global living conditions are changing.

- The Deep Space Network is a suite of different telescopes. Each facility has one 34 meter high efficiency antenna, 2 or more 34 meter wave guide antennas, some 26 meter dishes, and one 70 meter antenna (the big one). For Goldstone the 70 meter antenna is also a radar dish that incinerates bees on ignition, it measures where stuff is in space.

- pdlfs/deltafs (article): Transient file system service featuring highly paralleled indexing on both file data and file system metadata. "We designed DeltaFS to enable the creation of trillions of files. Such a tool aids researchers in solving classical problems in high-performance computing, such as particle trajectory tracking or vortex detection."

- kubeedge/kubeedge: an open source system extending native containerized application orchestration and device management to hosts at the Edge. It is built upon Kubernetes and provides core infrastructure support for networking, application deployment and metadata synchronization between cloud and edge

- mikifi/RL-Quadcopter-2: In this project, you will design an agent to fly a quadcopter, and then train it using a reinforcement learning algorithm of your choice!

- vlang-io/V: Simple, fast, safe, compiled language for creating maintainable software. Supports translation from C/C++. V compiles 1.5 million lines of code per second per CPU core. No global state; No null; No undefined values ;Option types; Generics; Immutability by default; Partially pure functions.

- Sim-to-(Multi)-Real: Transfer of Low-Level Robust Control Policies to Multiple Quadrotors. Jack Clark on why this matters: Approaches like this show how people are increasingly able to arbitrage computers for real-world (costly) data; in this case, the researchers use compute to simulate drones, extend the simulation data with synthetically generated noise data and other perturbations, and then transfer this into the real world. Further exploring this kind of transfer learning approach will give us a better sense of the ‘economics of transfer’, and may allow us to build economic models that let us describe the tradeoffs between spending $ on compute for simulated data, and collecting real-world data.

- Computer Networks: A Systems Approach: Suppose you want to build a computer network, one that has the potential to grow to global proportions and to support applications as diverse as teleconferencing, video on demand, electronic commerce, distributed computing, and digital libraries. What available technologies would serve as the underlying building blocks, and what kind of software architecture would you design to integrate these building blocks into an effective communication service? Answering this question is the overriding goal of this book—to describe the available building materials and then to show how they can be used to construct a network from the ground up.

- Security Overview of AWS Lambda: An In-Depth Look at Lambda Security. This whitepaper presents a deep dive of the AWS Lambda service through a security lens. It provides a well-rounded picture of the service, which can be useful for new adopters, as well as deepening understanding of AWS Lambda for current users. @MarcJBrooker: Yes, it can reduce latency. The majority case, though, is that MicroVM startup time is entirely hidden by caching and pooling internally. Most of startup time is the language VM and code loading (plus whatever your code does), for most uses.

- Stretch: Balancing QoS and Throughput for Colocated Server Workloads on SMT Cores: Based on this insight, we introduce Stretch, a simple ROB partitioning scheme that is invoked by system software to provide one hardware thread with a much larger ROB partition at the expense of another thread. When Stretch is enabled for latency-sensitive workloads operating below their peak load on an SMT core, co-running batch applications gain 13% of performance on average (30% max) over a baseline SMT colocation and without compromising QoS constraints

- Particle robotics based on statistical mechanics of loosely coupled components: Despite the stochastic motion of the robot and lack of direct control of its individual components, we demonstrate physical robots composed of up to two dozen particles and simulated robots with up to 100,000 particles capable of robust locomotion, object transport and phototaxis (movement towards a light stimulus). Locomotion is maintained even when 20 per cent of the particles malfunction. These findings indicate that stochastic systems may offer an alternative approach to more complex and exacting robots via large-scale robust amorphous robotic systems that exhibit deterministic behaviour.

Reader Comments