Friday

Nov062020

Stuff The Internet Says On Scalability For November 6th, 2020

Friday, November 6, 2020 at 9:11AM

Friday, November 6, 2020 at 9:11AM Hey, no power outages this week, so it's finally HighScalability time!

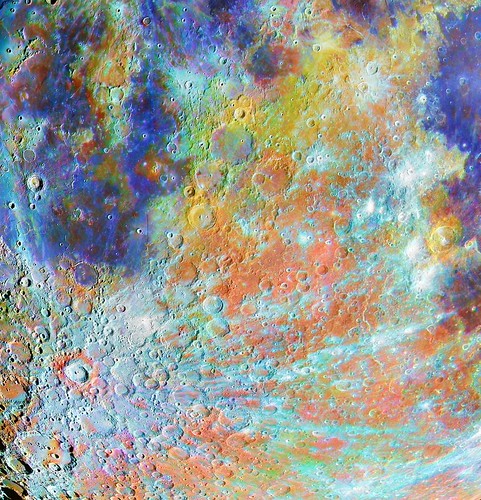

Stunning: Tycho Crater Region with Colours by Alain Paillou

Do you like this sort of Stuff? Without your support on Patreon this Stuff won't happen.

Know someone who could benefit from becoming one with the cloud? I wrote Explain the Cloud Like I'm 10 just for them. On Amazon it has 189 mostly 5 star reviews. Here's a 100% lactose-free review:

Number Stuff:

- 16.4 trillion: calls made to DynamoDB during 66 hours of Amazon's Prime day from Alexa, Amazon.com, and all Amazon fulfillment centers. Peaked at 80.1 million requests per second. EBS handled 6.2 trillion requests per day and transferred 563 petabytes per day. CloudFront handled over 280 million HTTP requests per minute, a total of 450 billion requests across all of the Amazon.com sites.

- 20x: Microsoft's Apache Spark speed improvement by rewriting it from the ground up in C++ to take advantage of modern hardware and capitalize on data-level and CPU instruction-level parallelism.

- 12: minimum length of a good password.

- 45%: of professionals want to work remotely all of the time.

- 300 million: habitable planets in the milky way.

- 195+ million. Netflix subscribers.

- $10.60: per person allocation for three meals a day for everyone on aboard a US submarine.

- 18: crashes for Waymo's autonomous vehicle operations in Phoenix, Arizona. 29 near-miss collisions.

- 29%: AWS revenue increase. Estimates from analysts suggest that Microsoft’s cloud is about 54% the size of AWS. Around 57% of Amazon’s operating income came from AWS in the third quarter, and Amazon derived 12% of its revenue from AWS. Amazon now has 1,125,300 employees, up 50% in one year.

- 300%: improvement in a WordPress site using Cloudflare's Automatic Platform Optimization.

- 11%: of firms that have deployed artificial intelligence are reaping a “sizable” return on their investments.

- 247 zeptoseconds: how long it takes for a photon to cross a hydrogen molecule

- $1,000: per day cost for processing 250 billion events-per-day, which translates to 55 terabytes (TB) of data. The system automatically scales up and down, thanks to the capabilities of serverless architectures, processing from 1TB to 6TB of data per hour.

- 1.1 million: gigabytes per second of data stored by enterprises by 2024. Data gravity warps compute.

- ~ $1 billion: Silk Road bitcoin seized by authorities from Individual X.

- 18 million: requests per day to Uber's platform from over 10,000 cities.

- 11: critical bugs foun in Apple's corporate network. 55 vulnerabilities found. Nobody is safe.

- 6: molecules needed to build life. So says an algorithm.

- $44.75 million: awarded in bug bounties last year by HackerOne, an 86% year-on-year increase. Nine people have earned over $1 million.

Quotable Stuff:

- Ransbotham: We’re seeing that this blending of humans and machines is where companies are performing well. It’s also that these companies have different ways of combining humans and machines...The idea that either humans or machines are going to be superior, that's the same sort of fallacious thinking.

- Vinton Cerf: The internet doesn’t invent or discover anything. It’s simply the medium through which people can do collaborative work and can discover new things.

- @tef_ebooks: did you know: the first computers were built for government use? that's right, they were state machines

- Gabriel Popkin: Practically everywhere you look, nature chooses complexity over simplicity, medleys over monocultures. The world is full of species, but it’s not clear why: In any given environment, why doesn’t one species (or at most a few) gain an advantage and out compete all the rest?

- @NoSQLKnowHow: To me the even more amazing thing is while those 80.1 million RPS by done for Prime Day are going on, every other DynamoDB customer is doing their thing too and not having performance problems.

- Kent Beck: The more perfectly a design is adapted to one set of changes, the more likely it is to be blind-sided by novel changes.

- @BrandonRich: I used to build systems out of code. Now I'm building solutions out of systems -- and data, and relationships.

- @muratdemirbas: I am happy to see that Blockchains permeated our lives, and dominate the banking, financial markets, healthcare, government, and electronics. Once again IBM was right calling this at 2017

- Charlie Demerjian: What SemiAccurate heard was what the backup plan for ARM going to Nvidia was, or at least part of it. The news comes from one major phone SoC supplier, lets call it a top 5 vendor, and at this point the plans to make RISC-V SoCs are going forward regardless of the deal being approved or not.

- Gandalf (via personal email): Perilous to us all are the devices of an art deeper than we possess ourselves.

- Stefan Jockusch: Depending on what product I throw at this factory, it will completely reshuffle itself and work differently when I come in with a very different product. It will self-organize itself to do something different.

- @Carnage4Life: The longer I’m in tech, the more I’m convinced microservices were a technical solution to an organizational problem.

- @JeffDean: Round trips have been getting cheaper and cheaper due to the exponential improvement in the speed of light, while bandwidth is very hard to improve year over year...? Oh, no, wait.

- Dan Hurley: Next, Figueroa hopes to teleport his quantum-based messages through the air, across Long Island Sound, to Yale University in Connecticut. Then he wants to go 50 miles east, using existing fiber-optic cables to connect with Long Island and Manhattan.

- @wintersweet: possibly we should stop saying “the algorithm” and start saying “the way people programmed the app”

- @theburningmonk: Launched a new app for client 3 weeks ago on AppSync, 5,000 users later, 100,000+ API calls per day, 99% cache hit rate, 0 problems, and 90% of AWS cost is on AppSync cache (T2.Small). Easily one of the most complex things I've ever built (in a few weeks). Gotta love #serverless! Can't stress how liberating it is to NOT have to worry about capacity planning, tuning auto-scaling configurations and all that crap. Built everything on my own, working part-time, in a few weeks and it just works! Everything scales itself, and only pay for what we use. Caching seems expensive right now, but is soaking up 99% of the load and it's gonna pay for itself 10 times over in a few weeks when we hit 10s of thousands users.

- vlfig: “Microservices” was a good idea taken too far and applied too bluntly. The fix isn’t just to dial back the granularity knob but instead to 1) focus on the split-join criteria as opposed to size; and 2) differentiate between the project model and the deployment model when applying them.

- Vinton Cerf: It’s one thing to get agreement on the technical design and to implement the protocols. It’s something else to get them in use where they’re needed. There’s a lot of resistance to doing something new because “new” means: “That might be risky!” and “Show me that it works!” You can’t show that unless you take the risk...That way, you can be more ambitious and take advantage of the assumption that we have an interplanetary backbone. If you start out on the presumption that you don’t, then you’ll design a mission with limited communications capability.

- Jon Hamilton: But even though time cells are critical in creating sequences, Buzsáki says, they really aren't like clocks, which tick at a steady pace. Instead, the ticks and tocks of time cells are constantly speeding up or slowing down, depending on factors like mood.

- Jon Holman: we moved from AWS ECS Fargate to AWS Lambda reducing the yearly cost from $1,730 to approximately $4.

- alexdanco: This paper presents a really satisfying idea: the fundamental essence of individuality, and the units in which individuality ought to be measured, is information. You’re dealing with an individual if you’re dealing with “the same thing” between today and tomorrow, and where that sameness isn’t just passive inertia, but is actively propagated. Individuals maximally propagate information from their past to their future. This propagation is measurable, at least in theory. Therefore, individuality ought to also be measurable.

- @Brecii: If I try to summarize the results single table design is at least 1/3 faster than multi tables design going up for more complex queries?

- Geoff Huston: If we can cost efficiently load every recursive resolver with a current copy of the root zone, and these days that’s not even a remotely challenging target, then perhaps we can put aside the issues of how to scale the root server system to serve ever greater quantities of “NO!” to ever more demanding clients!

- Ed Sperling: the whole chip industry is beginning to rethink how chips are put together. Moore’s Law will continue, but only for some digital logic by the time designs head into the 2/1nm realm. The rest of those designs will be pushed onto other chips, or chiplets.

- DSHR: The idea of imposing liability on software providers is seductive but misplaced. It would likely be both ineffective and destructive. Ineffective in that it assumes a business model that does not apply to the vast majority of devices connected to the Internet. Destructive in that it would be a massive disincentive to open source contributors, exacerbating the problem

- @Carnage4Life: 31% of Google engineers felt they were highly productive in Q2 versus 39% in Q1. Numbers still looking bad this quarter. Key challenge for companies is that it’s hard to tease apart remote work vs global pandemic vs home schooling impact on productivity.

- @benedictevans: There are close to 6bn adults on Earth, over 5bn people have a mobile phone, 4-4.5bn have a smart phone, and a bit over 1bn of those are iPhones

- Ben Evans: Hence, there are all sorts of issues with the ways that the US government has addressed Tiktok in 2020, but the most fundamental, I think, is that it has acted as though this is a one-off, rather than understanding that this is the new normal - there will be hundreds more of these. You can’t one-at-a-time this - you need a systematic, repeatable approach. You can’t ask to know the citizenship of the shareholders in every popular app - you need rules that apply to everyone.

- @peterc: Our Google Firebase spend is, amazingly, £0.01 per month for a critical business system we run here. And every card I give them results in "Transaction declined". If we get cut off over 1p I will meltdown.

- Dexter Johnson: In this latest research, the University of Washington team has been able to leverage this Wi-Fi backscatter technology to 3D geometry and create easy to print wireless devices using commodity 3D printers. To achieve this, the researchers have built non-electronic and printable analogues for each of these electronic components using plastic filaments and integrated them into a single computational design.

- Twirrim: It's not really surprising. From what I've heard from former co-workers there [AWS], there's a big push on all services to evaluate and ideally migrate to ARM by default for their control plane services. That says quite a bit about the savings Amazon is seeing from them, given how notoriously conservative they are over tech (I know they produce bleeding edge stuff, but internal tech approaches are super conservative, with good reason)

- @benbjohnson: My rule of thumb is that you’ll probably spend at least $1M to move to Kubernetes. That’s new ops hires, training, initial productivity loss, etc. If you don’t have a $1M problem that Kubernetes is solving then I don’t think you should even consider it.

- @keachhagey: Craziest stat in DOJ's Google suit: Google's payments to Apple to be default search amount to 15-20% of Apple's global profits.

- Animats: Over a decade ago I used to argue this with the C++ committee people. Back then, they were not concerned about memory safety; they were off trying to do too much with templates. The C++ people didn't get serious about safety until Rust came along and started looking like a threat. Now they're trying to kludge Rust features into C++ by papering over the unsafe stuff with templates, with some success. But the rot underneath always comes through the wallpaper. Too many things still need raw pointers as input.

- Backblaze: The Q3 2020 annualized failure rate (AFR) of 0.89% is slightly higher than last quarter at 0.81%, but significantly lower than the 2.07% from a year ago. Even with the lower drive failure rates, our data center techs are not bored. In this quarter they added nearly 11,000 new drives totaling over 150PB of storage, all while operating under strict Covid-19 protocols.

- @tomgara: "Bitcoin and Ethereum are now using up the same amount of electricity as the whole of Austria. Carrying out a payment with Visa requires about 0.002 kilowatt-hours; the same payment with bitcoin uses up 906 kWh, more than half a million times as much"

- @jeremy_daly: Yup. Had to build a lightweight auth system for a consumer site with over 500,000 users. For SaaS, I think Cognito/Auth0 are no brainers, but once you get out of the free tier, it gets pricey very quickly...Fewer system calls are faster (~5% faster).

- Evan Jones: Overwriting is faster than appending (~2-100% faster): Appending involves additional metadata updates, even after an fallocate system call, but the size of the effect varies. My recommendation is for best performance, call fallocate() to pre-allocate the space you need, then explicitly zero-fill it and fsync.

- eric_h: > quibi doesn't have anything share-driven. and that right there is why quibi failed - the only quibi clip I've seen was taken by someone using another phone to record it and post it to twitter. By not allowing viral hits to be easily shared on any platform they shot themselves in the foot.

- @tmclaughbos: What if, and hear me out, instead of building a brand new under documented abstraction over a well documented library you just extended the documentation with examples for that library?

- @HammadH4~ 1. Don't get into a space that you don't know of. Solve a problem in a domain you are familiar with so you know what value you provide and who the target customers are. 2. Make sure you build for the right type of people. In other words, choose your customers carefully because this matters more than you think. 3. Don't build nice to have products. You need to deliver solid business value to build a long term sustainable business. 4. Do not start building without research. 5. Don't start a SaaS business *just* for making money. 6. Don't build a business that "seems" like a good business. 7. Don't think of the product first, think of the market. 8. Spend more time marketing, less time building. 9. Measure the right metrics. 10. When building the product get the basics right and keep the tech simple.

- ajuc: There's 2 approaches to programming: - make it easy to extend (lets's call it SOLID in this context) - make it easy to rewrite (let's call it KISS). OO developers think that the first approach is The Only Way but both can be right, especially with high-level declarative languages and with tasks small enough (relative to the language expressive power). SOLID makes it easier to modify programs (in predetermined directions) without understanding the whole. KISS makes it easier to understand the whole program. SOLID accumulates inefficiencies over time because people program with tunnel vision. KISS takes longer to modify as it grows and eventually exceeds the capacity of human brain. SOLID is mostly OO, KISS is mostly declarative.

- Ian Banks: The easiest way to explain Contact is to say that it operates on exactly the opposite principle of the Star Trek Federation’s “Prime Directive.” The latter prohibits any interference in the affairs of “pre-Warp” civilizations, which is to say, technologically underdeveloped worlds. The Culture, by contrast, is governed by the opposite principle; it tries to interfere as widely and fulsomely as possible. The primary function of its Contact branch is to subtly (or not-so subtly) shape the development of all civilizations, in order to ensure that the “good guys” win.

- Charles Fan: Our expectation is that all applications will live in memory. Today, because of the limitations on the DRAM side and on the storage side, most applications need both to have mission critical reliability. But we believe because of new and future developments in hardware and software over the next ten years, we think most of the applications will be in-memory. I think that is a bold statement, but we think it is a true statement as well.

- bluetidepro: the biggest downfall of IFTTT was how many services started locking them out of useful hooks. Early on, I felt like I could do anything in IFTTT with any platform that was on there. There were so many hooks available as triggers, but as time went on and services removed many useful hooks, it lost a lot of value. Spotify is one that comes to mind, originally there was so many triggers on Spotify that were useful, but now there is only like 2 boring triggers. It really killed the IFTTT platform when there was less options from so many of the services. They went from having less services, but a very deep amount of triggers/hooks to hundreds of services with very little depth. From a deep pond to a wide shallow ocean.

- politelemon: The ICO is as toothless as our nation. They had initially announced a fine against British Airways of 183M, and reduced it to about 20M. Marriot's original was 99M, now reduced to 18.4M. Cathay Pacific was fined... 500K. In most cases, each fine has been announced as a large amount (presumably for publicity) after which it goes through appeals, tribunals, some private meetings and a substantially reduced fine.

- @muratdemirbas: So much of what I've enjoyed about Rust is how much more productive it has made me. That time, which would otherwise be spent hitting my head against frustrating bugs, has instead been applied to new features, new algorithms, and progress generally.

- koeng: I've been in synthetic biology / DIYbio for the last ~8 years, and recently worked 3 years in Drew Endy's lab and got a decent perspective on how things went down throughout the years. If there is something the computer hackers should know about synthetic biology, it's that it is in its infancy (ie, there are pretty few players who are leading all those projects listed). It is really early. Joining open source software projects to help synthetic biologists will non-trivially affect the future of the field (they don't know how to program) If I had to predict what is coming in the next 10 years, I'd say that improvements in biotech networks are going to enable cool stuff. The BioBricks Foundation's Bionet Project (worked on that a little) was a good foray into this idea. Basically, networks of materials and software are going to cause the price to participate to start dropping into early home-computer hobbyist realm...One thing I have to note though - the field is fundamentally different than software engineering. In software, it isn't unusual for someone to maintain a software package for a long time, and for other people to contribute. In biology, typically software projects are made by individuals (only supported through their graduate school) and are never contributed to by outsiders (most don't know how Git development and PRs work). There are lots of projects in the normal bio category, but probably aren't useful.

- William Gallagher: Terry Guo, then chairman of Foxconn, reportedly told Apple that his firm's assembly lines would contain one million robots within two years. Seven years on, in 2019, Foxconn was using just 100,000 robots across all of its manufacturing. Neither Foxconn nor Apple would comment publicly about why the automation was so much lower than predicted, but according to The Information, sources say it's down to dissatisfaction by Apple.

- @QuinnyPig: "Cloud is a mixed bag; we're happy with performance. Travel and hospitality are down, entertainment is up. A majority of companies are looking to cut down on expenses, and moving to the cloud is a great way to do that long term" lies AWS. "In the short term we're helping tune."

- rufius: I actually worked in an org that took the Tick/Tock model and applied it to rewrites. As a big monolith got too unwieldy, we’d refactor major pieces out into another service. Over time, we’d keep adding a piece of functionality into the new service or rarely, create another service. The idea wasn’t to proliferate a million services to monitor but rather to use it as an opportunity to stand up a new Citadel service that encapsulated some related functionality. It’s worked well but it requires planning and discipline.

- 8fingerlouie: We looked very hard at microservices some 5-6 years ago, and estimated we'd need ~40000 of them to replace our monoliths, and given a scale like that, who'd keep track of what does what ? Instead we opted for "macroservices". It's still services, they're still a lot smaller units than our monoliths, but they focus on business level "units of work".

- J. Pearl: But while all that was going on, Galileo also managed to quietly engineer the most profound revolution that science has ever known. This revolution, expounded in his 1638 book “Discorsi” [12], published in Leyden, far from Rome, consists of two maxims: One, description first, explanation second – that is, the “how” precedes the “why”; and Two, description is carried out in the language of mathematics; namely, equations. Ask not, said Galileo, whether an object falls because it is pulled from below or pushed from above. Ask how well you can predict the time it takes for the object to travel a certain distance, and how that time will vary from object to object and as the angle of the track changes.

- titzer: Unfortunately security and efficiency are at odds here. We faced a similar dilemma in designing the caching for compiled Wasm modules in the V8 engine. In theory, it would be great to just have one cached copy of the compiled machine code of a wasm module from the wild web. But in addition to the information leak from cold/warm caches, there is the possibility that one site could exploit a bug in the engine to inject vulnerable code (or even potentially malicious miscompiled native code) into one or more other sites. Because of this, wasm module caching is tied to the HTTP cache in Chrome in the same way, so it suffers the double-key problem.

-

candiddevmike: In my opinion, microservices are all the rage because they're an easily digestible way for doing rewrites. Everyone hates their legacy monolith written in Java, .NET, Ruby, Python, or PHP, and wants to rewrite it in whatever flavor of the month it is. They get buy in by saying it'll be an incremental rewrite using microservices. Fast forward to six months or a year later, the monolith is still around, features are piling up, 20 microservices have been released, and no one has a flipping clue what does what, what to work on or who to blame. The person who originally sold the microservice concept has left the company for greener pastures ("I architected and deployed microservices at my last job!"), and everyone else is floating their resumes under the crushing weight of staying the course. Proceed with caution.

Useful Stuff:

- Open sourcing a project is hard, don't do it unless you're ready to do the work. Maintaining the massive success of Envoy. Matt Klein, creator of Envoy, shares some surprisingly interesting and useful lessons about his experience at Lyft open sourcing Envoy. The whole world uses Envoy now. How did they do it?

- Envoy is a software proxy. Started over 5 years ago to help Lyft move to microservices. When you adopt micoservices there a set of common problems that go with it. Matt has worked at Twitter and AWS, so he had experience with microservices and solving those type of problems.

- Envoy started as an edge proxy as an API gateway. It replaced load balancers so they could get better observability, better load balancing, better access logs, and get a better understanding of how the traffic was flowing in the microservice architecture. Then they implemented some rate limiting, MongoDB protocol parsing, which was the beginning of Envoy as a service mesh. They ran it at the edge and for their monolith. The rest is incremental history. Soon everything at Lyft ran through Envoy.

- The biggest technical difference between Envoy and something like NGINX, at the time, is that it was built to handle elastic cloud-native loads. It was autoscaling, used containers, and could handle failures. It used an eventually consistent configuration system, and uses a set of APIs to dynamically fetch things like router configuration. All of its config could be changed on the fly without having to reload. That's historically a big difference. It was also built from the ground up to be extensible. They have filters plugins, metrics plugins, stats plugins, tracing plugins, etc.

- One reason Envoy was so successful is that it was created by an end user for an explicit use case. By the time it was open sourced it was feature rich, rock solid, reliable, etc.

- In hindsight they were naive about the effort it would take to open source Envoy. It takes a lot of work to create a successful open source project. Matt came close to complete and total burnout working what was essentially two jobs: his Lyft job and Envoy.

- The success of Envoy has made Lyft far less likely to open source projects because of the huge time commitment it takes.

- Most open source projects are a net negative. You'll put out more effort than you will be returned in community development. Very few projects become successful enough to justify the effort.

- Starting a successful open source company requires the same effort as starting actual company. It requires engineering, PR, marketing, hiring maintainers and contributors, blog posts, build tooling, documentation, talking at conferences, talking at meetups—all the things that make something successful.

- Google's adoption helped a lot because they didn't just contribute code, they created hype by talking at conferences.

- VCs said Envoy would never become a success unless a company was started around it. Matt didn't think that was true and he was right. Having not be vendor driven, having it be community first, technology first, was a big reason the project was successful. Nobody had to worry features would be denied because it would conflict with the paid version.

- Matt chose for his career path to bias for impact, to solve problems, rather than go for the money. It's satisfying to see how Envoy has succeeded in the industry.

- When you are going to open source something have open and honest conversations with your employer as to the effort required. How much time should you spend on this? Especially given that it's likely the effort won't payoff. Have an honest business conversation to decide if open sourcing is worth the effort.

- Building community is critical. Matt put a lot of effort into making sure the project communication style was welcoming. He's most proud of the community around Envoy. It's not toxic or mean. People love contributing to Envoy.

- Also Introducing NGINX Service Mesh

- Organize around cells, not services. Set business goals, not technology choices. Robinhood’s Kubernetes Journey: A Path More Treacherous Than it Appears.

- Two years and the migration still isn't complete. The mistake they made was saying they wanted k8s.

- What they really wanted were faster releases and immutable infrastructure so it's faster and easier to release a new build with a fix than it is to go back and change anything. They didn't say that though. They said they wanted k8s.

- Don't frame goals in terms of technology. Each team will interpret goals differently. Teams will end up serving themselves rather than the business. Prepackaged solutions tend not to work.

- You need an abstraction that's customized for your specific business. Framing better goals is key.

- They are rediscovering cell architectures. You don't need to scale services independently. Functionality is now organized around accounts, not individual service slices. So an account can include all the services for that account and they won't have to talk to other shards.

- Account based shards are horizontally scalable, which is exactly what you want in an architecture. This limits the number of inter-service connections and the number of hosts that need to talk to each other.

- Under hyper-growth it's the second-order effects that get you. You can almost always figure out a way to scale something by adding more machines or tuning a database. What you don't think of is when you add those machines they'll all go access your service location system. Or they all need to talk to Kafka. It's these connections that ultimately cause problems.

- Cell architectures increase fault isolation and reduce scaling pain points.

- Yes, you do need to know every customer and trade, but those queries can be handled in a different way.

- What's important is cell architectures create a direction for the team. It structures every conversation between teams. It aligns things in a very natural way.

- Lessons from TiDB's No. 1 Bug Hunters Who've Found 400+ Bugs in Popular DBMSs

- > 150 bugs. Non-optimizing Reference Engine Construction (NoREC). NoREC is a simple, but also a non-obvious approach to finding specifically optimization bugs. So the idea that we had was that rather than relying on the DBMS, we could rewrite the query so that the DBMS cannot optimize it, and thus be able to find optimization bugs.

- ~100 bugs. Pivoted Query Synthesis (PQS), which is a more powerful technique, but also more elaborate. Generate a query for which it is ensured that a randomly selected row, the pivor row, is fetched.

- > 150 bugs. Ternary Logic Query Partitioning (TLP) is work-in-progress, and this is the approach that we have actually used to find the bugs that we reported for TiDB. Partition the query into several partitioning queries, which are then composed.

- Twitter has a strong cache position. A large scale analysis of hundreds of in-memory cache clusters at Twitter.

- We collected around 700 billion requests (80 TB in rawfile size) from 306 instances of 153 Twemcache clusters, which include all clusters with per-instance request rate more than 1000 queries-per-sec (QPS)

- a cache with a low miss ratio most of the time, but sometimes a high miss ratio is less useful than a cache with a slightly higher but stable miss ratio. We observe that most caches have this ratio lower than 1.5. In addition, the caches that have larger ratios usually have a very low miss ratio

- when the request rate (top blue curve) spikes, the number of objects accessed in the same time interval (bottom red curve) also has a spike, indicating that the spikes are triggered by factors other than hot keys. Such factors include client retry requests, external traffic surges, scan-like accesses, and periodic tasks.

- More than 35% of all Twemcache clusters are write-heavy, and more than 20% have a write ratio higher than 50%.

- Having the majority of caches use short TTLs indicate that the effective working set size (W SSE) — the size of all unexpired objects should be loosely bounded. In contrast, the total working set size (W SST ), the size of all active objects regardless of TTL, can be unbounded.

- Measuring all Twemcache workloads, we observe majority of the cache workloads still follow Zipfian distribution. However, some workloads show deviations in two ways. First, unpopular objects appear significantly less than expected (Figure 8a) or the most popular objects are less popular than expected

- the mean value size falls in the range from 10 bytes to 10 KB, and 25% of workloads show value size smaller than 100 bytes, and median is around 230 bytes

- As real-time stream processing becomes more popular, we envision there will be more caches being provisioned for caching computation results. Because the characteristics are different from caching for storage, they may not benefit equally from optimizations that only aim to make the read path fast and scalable, such as optimistic cuckoo hashing

- Background crawler Another approach for proactive expiration, which is employed in Memcached, is to use a background crawler that proactively removes expired objects by scanning all stored objects. Using a background crawler is effective when TTLs used in the cache do not have a broad range. While scanning is effective, it is not efficient.

- Our work shows that the object popularity of in-memory caching can be far more skewed than previously shown

- for a significant number of workloads, FIFO has similar or lower miss ratio performance as LRU for in-memory caching workloads.

- Graviton has gravitas.

- Amazon: Graviton2 instances provide up to 35% performance improvement and up to 52% price-performance improvement for RDS open source databases

- mcqueenjordan: These graviton2 instances are no joke. As soon as they released, it probably became the new lowest hanging fruit for many people (from a cost efficiency standpoint). The increased L1 cache has an incredible effect on most workloads.

- foobiekr: Most multithreaded software has concurrency bugs and many of those bugs have been covered up for years by the relatively friendlier x86 memory model vs weaker models like ARM. It is not surprising at all that supported products are going to be slow to support other architecture.

- new23d: We have. We are an egress firewall company and have been testing our product on Graviton2, privately, for some time now. The ARM builds will be GA in a couple of months but so far we've concluded that: 1. These offer an excellent price to performance ratio for an egress firewall like purpose because of low instance pricing and high network throughput.

- 0x202020: We’ve been testing a few Graviton instances since before GA and have gone “all in” with our latest release targeted for EOY.

- stingraycharles: It’s a fairly boring use case, but at QuasarDB we also target ARM (some of our customers run our database on embedded devices), and AWS’ graviton processors are a godsend for CI: we can just use on-demand EC2 instances as we do with all the other architectures and OS’es.

- @copyconstruct: A mere skim through some of these benchmarks/cost savings of workloads on AWS Graviton2 makes me think “ARM in the data center” is shaping up to be the biggest tech trend of 2020.

- @lizthegrey: This is what happens when you move from Intel to #Graviton2.

- Load shedding is a favorite strategy for dealing with too much load. The idea is to stop doing certain tasks while load is high. In Keeping Netflix Reliable Using Prioritized Load Shedding Netflix describes their progressive load shedding strategies.

- Consistently prioritize requests across device types (Mobile, Browser, and TV)

- Progressively throttle requests based on priority

- Validate assumptions by using Chaos Testing (deliberate fault injection) for requests of specific priorities

- Requests were characterized by: throughput, functionality, and criticality.

- Requests are categorized as: NON_CRITICAL, DEGRADED_EXPERIENCE, CRITICAL.

- Using attributes of the request, the API gateway service (Zuul) categorizes the requests into NON_CRITICAL, DEGRADED_EXPERIENCE and CRITICAL buckets, and computes a priority score between 1 to 100 for each request given its individual characteristics.

- In one scenario that previously caused users not be able to play a video they were able shed load such playback was not effected. And who doesn't want that?

- NAND gates are all you need to build a computer. See how with The Nand Game!: You are going to build a simple computer. You start with a single component, the nand gate. Using this as the fundamental building block, you will build all other components necessary.

- There is a benefit to have centralized software repositories that can be continuously analyzed, studied, and vetted. GitLab's security trends report – our latest look at what's most vulnerable: Improper input validation; Out of bounds write of intended buffer; Uncontrolled resource consumption.

- Polling FTW! Avoiding overload in distributed systems by putting the smaller service in control:

- Within AWS, a common pattern is to split the system into services that are responsible for executing customer requests (the data plane), and services that are responsible for managing and vending customer configuration (the control plane). In many of these architectures the larger data plane fleet calls the smaller control plane fleet, but I also want to share the success we’ve had at Amazon when we put the smaller fleet in control.

- When building such architectures at Amazon, one of the decisions we carefully consider is the direction of API calls. Should the larger data plane fleet call the control plane fleet? Or should the smaller control plane fleet call the data plane fleet? For many systems, having the data plane fleet call the control plane tends to be the simpler of the two approaches. In such systems, the control plane exposes APIs that can be used to retrieve configuration updates and to push operational state. The data plane servers can then call those APIs either periodically or on demand. Each data plane server initiates the API request, so the control plane does not need to keep track of the data plane fleet.

- To provide fault tolerance and horizontal scalability, control plane servers are placed behind a load balancer like Application Load Balancer. The simplicity of this architecture gives it inherent availability advantages. However, at Amazon we have also learned that when the scale of the data plane fleet exceeds the scale of the control plane fleet by a factor of 100 or more, this type of distributed system requires careful fine-tuning to avoid the risk of overload. In the steady state, the larger data plane fleet periodically calls control plane APIs, with requests from individual servers arriving at uncorrelated times. The load on the control plane is evenly distributed, and the system hums along.

- By examining a number of these situations, we’ve observed that what they have in common is a small change in the environment that causes the clients to act in a correlated manner and start making concurrent requests at the same time. Without careful fine-tuning, this shift in behavior can overwhelm the smaller control plane.

- The biggest challenge with this architecture is scale mismatch. The control plane fleet is badly outnumbered by the data plane fleet. At AWS, we looked to our storage services for help. When it comes to serving content at scale, the service we commonly use at Amazon is Amazon Simple Storage Service (S3). Instead of exposing APIs directly to the data plane, the control plane can periodically write updated configuration into an Amazon S3 bucket. Data plane servers then poll this bucket for updated configuration and cache it locally. Similarly, to stay up to date on the data plane’s operational state, the control plane can poll an Amazon S3 bucket into which data plane servers periodically write that information.

- Because of these advantages, this architecture is a popular choice at Amazon. An example of a system using this architecture is AWS Hyperplane, the internal Amazon network function virtualization system behind AWS services and resources like Network Load Balancer, NAT Gateway, and AWS PrivateLink.

- MongoDB Trends Report 2020:

- SQL databases are being increasingly abandoned by developers.

- NoSQL databases are being moved to the cloud faster than their SQL counterparts.

- GDPR concerns lead Europe (EMEA) to fall behind in cloud adoption.

- Looks like branded silicon is the future. Apple is moving to their own processors as is Amazon. That's what happens when the magic becomes mundane. AWS makes its own Arm CPUs the default for ElastiCache in-memory data store service: ElastiCache now supports Graviton2-powered M6g and R6g instance types and that they will deliver “up to a 45 per cent price/performance improvement over previous generation instances.

- What garbage. How Rust Lets Us Monitor 30k API calls/min

- We hosted everything on AWS Fargate, a serverless management solution for Elastic Container Service (ECS), and set it to autoscale after 4000 req/min. Everything was great! Then, the invoice came

- The updated service now passed the log data through Kinesis and into s3 which triggers the worker to take over with the processing task. We rolled the change out and everything was back to normal... or we thought. Soon after, we started to notice some anomalies on our monitoring dashboard. We were Garbage Collecting, a lot.

- Rust isn't a garbage-collected language. Instead, it relies on a concept called ownership.

- Over the course of these experiments, rather than directly replacing the original Node.js service with Rust, we restructured much of the architecture surrounding log ingestion. The core of the new service is an Envoy proxy with the Rust application as a sidecar.

- What we found was that with fewer—and smaller—servers, we are able to process even more data without any of the earlier issues.

- With the Rust service implementation, the latency dropped to below 90ms, even at its highest peak, keeping the average response time below 40ms.

- Open source musings. When using open source are you ethically required to track down and fix bugs? Or do you just switch to a different system? Doordash went with Eliminating Task Processing Outages by Replacing RabbitMQ with Apache Kafka Without Downtime. That generated an interesting discussion on HN about the rights and responsibilities of open source users. Apreche: What I find really interesting is that their decision making process. At least the way the article is written suggests they thought of five possible solutions. However, they decided to go all-in on a single solution without actually doing any significant investigation or actually trying the others.

- Is the complexity of QUIC worth it? Maybe, if you change your app enough. This is definitely a you can no longer fix your own car moment with HTTP. I remember once whipping up my own HTTP/1 server that worked pretty well. No chance of that these days. You have to take it to the dealer. The Facebook app:

- Today, more than 75 percent of our internet traffic uses QUIC and HTTP/3 (we refer to QUIC and HTTP/3 together as QUIC).

- Our tests have shown that QUIC offers improvements on several metrics. People on Facebook experienced a 6 percent reduction in request errors, a 20 percent tail latency reduction, and a 5 percent reduction in response header size relative to HTTP/2. This had cascading effects on other metrics as well, indicating that peoples’ experience was greatly enhanced by QUIC.

- However, there were regressions. What was most puzzling was that, despite QUIC being enabled only for dynamic requests, we observed increased error rates for static content downloaded with TCP. The root cause of this would be a common theme we’d run into when transitioning traffic to QUIC: App logic was changing the type and quantity of requests for certain types of content based on the speed and reliability of requests for other types of content. So improving one type of request may have had detrimental side effects for others.

- The experiments showed that QUIC had a transformative effect on video metrics in the Facebook app. Mean time between rebuffering (MTBR), a measure of the time between buffering events, improved in aggregate by up to 22 percent, depending on the platform. The overall error count on video requests was reduced by 8 percent. The rate of video stalls was reduced by 20 percent.

- EricBurnett: I hear this argument a lot, and fail to be convinced. Optimizations at scale matter a lot. Let's posit that 100,000 developer-years have been spent implementing HTTP/2 and tools to interact with it. (Hopefully a great over-estimate). Let's say we have to spend all that again to move to HTTP/3. Is it worth it? Well, per random internet stats I haven't validated, there are about 4b internet users, spending about 3h per day on the internet apiece. I could imagine 5% of that is spent on synchronous resource loading. That's about 10m/day/user - or about 28M years/year spent waiting on resources to load. A 1% savings (the minimum of CloudFlare's range) is 280k human years / year. And that's the bottom end - could be as high as 4% (1.1M years/ year), and without BBR (negatively impacting high packet loss and high throughput connections in particular). Is "persons average time on the internet" comparable to "paid developer time"? No. A lot of that user time is timeboxed "wasted" time, in the endless content scroll. With that in mind, is it worth it overall? Probably. I've tried to estimate in favor of developers, but I still get their one-time effort paid back 2.8-11x _each year_ in user time, under unfavorable conditions. And this is growing rapidly, both in user count and time per user. And that says nothing about the privacy benefits of encrypting headers and whatnot, which you should probably ascribe nonzero value.

- Recommendations III — A flavoured tale about similar tastes:

- Some retail businesses even have reported increments up to 30% of their revenue by implementing custom recommendations to their customers.

- Since the release of the recommendations in our home page, our customers have been adding around 4 products in average (products they never had bought before!). And the number of items added from this page have experienced a 15% increase 🚀.

- The case of collaborative filtering is more interesting. This approach allows you to recommend products without having the context that surrounds the product and without needing its description or characteristics. To achieve this we use a traditional approach that makes use of matrix factorization. It consists in converting a matrix into a smaller matrices product and recalculating it again to fill the voids.

- Is there anything technology can't ruin? F1 Insights Powered by AWS: Car Analysis/Car Development

- Let go Luke. Use the Force. Complexity Scientist Beats Traffic Jams Through Adaptation:

- Once you build systems, it turns out that some holes in your concepts start to show up. You are faced with problems that you didn’t foresee. That forces you to refine your understanding, to revise your conceptual system. Answers always bring new challenges. But once you solve those challenges, then you can go back and make more soundly based conceptual contributions. I have always gone from theory to practice and back.

- Self-organizing traffic lights have sensors that let them respond to incoming traffic by modifying the timing of the signals. They are not trying to predict; they are constantly adapting to the changing traffic flow. But if you can adapt to the precise demand, then there is no idling. The only reason for cars to wait is because other cars are crossing. The traffic light tells the cars what to do. But because of the sensors, the cars tell the traffic lights what to do, too. There’s this feedback that promotes the formation of platoons, because it’s easier to coordinate 10 10-car platoons than 100 cars, each with its own trajectory. And that spontaneously promotes the emergence of “green waves,” which are platoons of cars that roll through intersection after intersection without stopping. You are not programming “there will be a green wave and it will slow at this speed” into the system. The traffic kind of triggers the green waves themselves. And it’s all self-organized because there’s no direct communication between the traffic lights in different intersections.

- It’s a not so obvious way of controlling systems because in control theory, we want to be sure about what will happen. And in this case, you don’t tell the system what the solution will be. But you design interactions so that the system will be constantly finding desirable solutions. That’s what you want to do, because you don’t know what the problem will be.

- The problem may be that most engineers are taught traditional methods based on predicting what is to be controlled, and they try to improve on those methods. But for complex systems, prediction is almost hopeless. The moment you achieve optimality, the problem changes. The solution is obsolete already.

- What we have shown is that with self-organization, you can have a completely different approach, which you could summarize as shifting from prediction to adaptation.

- If you've ever had to design hardware/software systems you know you have to generate every possible failure scenario. Ferrari is bricked during upgrade due to no mobile reception while underground. The car behaved exactly as designed, and that's the problem. It's very tempting to have every unexpected double, triple, or quadruple fault map to the "we don't know what's happening, but it must be bad" logic path. In this case: unrecoverable lockdown. Horrible for the user. Why does installing a child's seat trigger the tampering lockdown? Probably because nobody at Ferrari could conceive of anyone having kids. The retry loop then appeared to stop retrying. Why? Some constant somewhere, no doubt. But unless the battery reached a certain min threshold why ever stop retrying? Just backoff the timer. And why does the scenario require factory intervention instead of a local tech? Lazy design.

- Moving BBC Online to the cloud. A huge multi-year, multi-team effort that's just about complete. They give an overall approach that should be instructive for other orgs moving to cloud-native.

- Principles: Don’t solve what’s been solved elsewhere; Remove duplication (but don’t over-simplify); Break the tech silos through culture & communication; Organise around the problems; Plan for the future, but build for today; Build first, optimise later; If the problem is complexity, start over; Move fast, release early and often, stay reliable. Smaller releases, done more often, are also an excellent way to minimise risk.

- 110 releases; 6,249 code builds; ave. build 3.5 minutes; ave. time from PR to live was 1 day 23 minutes;

- High level layering of the system: Traffic management layer (tens of thousands of requests a second); Website rendering layer (AWS using React, 2,000 lambdas run every second); Business layer (Fast Agnostic Business Layer (FABL) allows different teams to create their own business logic without worrying about the challenges of operating it at scale); Platform and workflow layers.

- Servereless is good: As discussed earlier, serverless removes the cost of operating and maintenance. And it’s far quicker at scaling. When there’s a breaking news event, our traffic levels can rocket in an instant; Lambda can handle this in a way that EC2 auto-scaling cannot.

- A cost comparison would be very informative. Most on Hacker News think their solution is way too complex and costly. Nothing new witht that.

- Nicely written. How 30 Lines of Code Blew Up a 27-Ton Generator. This is why I'm against centralized systems. We simply do not need a continuation of old centralized paradigms. The future is systems based on decentralization, redundancy, and dispersal. Also, The Centralized Internet Is Inevitable

- Physicists Discover First Room-Temperature Superconductor: Add too much, and the sample will act too much like metallic hydrogen, metalizing only at pressures that will crack your diamond anvil. Over the course of their research, the team busted many dozens of $3,000 diamond pairs. “That’s the biggest problem with our research, the diamond budget,” Dias said.

- Does Palantir See Too Much?

- The brainchild of Karp’s friend and law-school classmate Peter Thiel, Palantir was founded in 2003. It was seeded in part by In-Q-Tel, the C.I.A.’s venture-capital arm, and the C.I.A. remains a client. Palantir’s technology is rumored to have been used to track down Osama bin Laden

- Essentially, Palantir’s software synthesizes the data that an organization collects. It could be five or six types of data; it could be hundreds. The challenge is that each type of information — phone numbers, trading records, tax returns, photos, text messages — is often formatted differently from the others and siloed in separate databases. Building virtual pipelines, Palantir engineers merge all the information into a single platform.

- Although Palantir is often depicted as a kind of omnipotent force, it is actually quite small, with around 2,400 employees.

- Palantir is pricey — customers pay $10 million to $100 million annually — and not everyone is enamored of the product. Home Depot, Hershey, Coca-Cola and American Express all dropped Palantir after using it.

- These days, around 15,000 Airbus employees use Palantir, and its software has essentially wired the entire Airbus ecosystem through a venture called Skywise, which collects and analyzes data from around 130 airlines worldwide. The information is used for everything from improving on-time performance to preventive maintenance.

- To that end, Palantir’s software was created with two primary security features: Users are able to access only information they are authorized to view, and the software generates an audit trail that, among other things, indicates if someone has tried to obtain material off-limits to them. But the data, which is stored in various cloud services or on clients’ premises, is controlled by the customer, and Palantir says it does not police the use of its products.

- Investors weren’t so sure. In its prospectus, Palantir reported that it was still losing hundreds of millions of dollars: $580 million in 2019, following a similar loss the year before. It also disclosed that just three customers accounted for roughly 30 percent of its revenue.

- What We Have Learned While Using AWS Lambda in Our Production Cycles for More than One Year. I'll skip all the boring benefits, here are the problems they found:

- Lambda can be performed within 15 minutes maximum. Moreover, if a trigger is requested from an API Gateway, the duration must be no more than 30 seconds. A 15-minutes limit for the cron-tasks was way too tight to execute the particular scope of tasks on time.

- when the number of Lambdas mushroomed into more than 30 CloudFormations, the stack started failing with different errors like "Number of resources exceeded", "Number of outputs exceeded"

- Even though AWS provides some basic level of monitoring and debugging, it's still impossible to extend this part and make some custom metrics that could be useful for particular cases and projects.

- It can take random time between 100 milliseconds to a few minutes. Moreover, if you keep your Lambdas under VPC (Virtual Private Cloud), cold starts will take more time

- You cannot just open a connection pool to your MongoDB or MySQL servers at the application startup phase and reuse it during the entire lifecycle.

- Besides, the serverless apps are hard to test locally, because usually there are a lot of integrations between AWS services, like Lambdas, S3 buckets, DynamoDB, etc.

- On top of everything else, you can't implement a traditional caching for classic-like servers.

- The last one worth mentioning is a sophisticated price calculation. Even though AWS Lambda is quite cheap, various supplementary elements can significantly increase the total costs.

- Cross platform development sucks. Netflix shares their experience of using Kotlin Multiplatform for an app to produce TV shows and movies. They didn't run away screaming, so that's something. Given unreliable networks all over the world, they want it to work offline. Kotlin Multiplatform allows you to use a single codebase for the business logic of iOS and Android apps. So far it's working well for them because nearly 50% of their business logic shared between ios and android.

- Comments on: The Unwritten Contract of Solid State Drives: Observation 1 - Application log structure increases the scale of write size. This is expected with an LSM. Observation 2 - The scale of read requests is low. Observation 3 - RocksDB doesn't consume enough device bandwidth. Observation 4 - Frequent data barriers limit request scale. Observation 5 - Linux buffered I/O limits request scale.

- Revolutionizing Money Movements at Scale with Strong Data Consistency:

- Versioning is essential to promote consistency across two asynchronous systems.

- End-to-end integration tests with test tenancy and a staging environment so that we could expose and fix bugs.

- Continuous validation is essential for migration and rollout. With it, you can catch issues right after the rollout starts.

- Comprehensive monitoring and alerts shorten the time it takes to detect and mitigate issues.

- The foundation of a highly reliable payment system includes exponential retries of temporarily failing payments over a long period of time.

- 40 milliseconds of latency that just would not go away: we do know what they discovered: the upstream (open source) release had forgotten to deal with the Nagle algorithm. Yep. Have you ever looked at TCP code and noticed a couple of calls to setsockopt() and one of them is TCP_NODELAY? That's why. When that algorithm is enabled on Linux, TCP tries to collapse a bunch of tiny sends into fewer bigger ones to not blow a lot of network bandwidth with the overhead. Unfortunately, in order to actually gather this up, it involves a certain amount of delay and a timeout before flushing smaller quantities of data to the network. In this case, that timeout was 40 ms and that was significantly higher than what they were used to seeing with their service. In the name of keeping things running as quickly as possible, they patched it, and things went back to their prior levels of performance.

- Very helpful. How to read a log scale

- Even Facebook evolves. I'm always surprised to learn Facebook hasn't always had the best of everything. They make it work and then iterate based on experience. Throughput autoscaling: Dynamic sizing for Facebook.com:

- Our peak load generally happens when it’s evening in Europe and Asia and most of the Americas are also awake. From there, the load slowly decreases, reaching its lowest point in the evening, Pacific Time.

- Before autoscale, we statically sized the web tier, which means that we manually calculated the amount of global capacity we needed to handle the peak load with the DR buffer for a certain projected period of time. This was very inefficient, as the web tier didn’t need all those machines at all hours.

- By using the autoscale methodology and having all these safety mechanisms, we have been dynamically sizing the Facebook web tier for more than a year now. This allows us to free up servers reserved for web at off-peak time and let other workloads to run until web needs them back for the peak time. The number of servers we can repurpose is considerably large because web is a large service with a significant difference between peak and trough. All unneeded servers go into the elastic compute pool at Facebook.

- How you could have come up with Paxos yourself. Probably not. One of my worst bugs ever was a deadlock in a primary-secondary replication protocol on bootup. You can get something to work, sure. But until the protocol is proven to work you may cause the potential loss of millions of dollars.

- You have all this data in multiple data warehouses. Miscroservices, am i right? You need to get all that data into a database where it's useful in an online environment. Here's how Netflix does it. Bulldozer: Batch Data Moving from Data Warehouse to Online Key-Value Stores: Bulldozer is a self-serve data platform that moves data efficiently from data warehouse tables to key-value stores in batches. It leverages Netflix Scheduler for scheduling the Bulldozer Jobs. Netflix Scheduler is built on top of Meson which is a general purpose workflow orchestration and scheduling framework to execute and manage the lifecycle of the data workflow. Bulldozer makes data warehouse tables more accessible to different microservices and reduces each individual team’s burden to build their own solutions.

- We eventually will have decentralized systems. Not for all the nerd knob philosophical reasons we all love, but for the only reason that ever counts: it's required to do the job. What job requires decentralization? Space exploration. Communicating at To Boldly Go Where No Internet Protocol Has Gone Before: A data packet traveling from Earth to Jupiter might, for example, go through a relay on Mars, Cerf explained. However, when the packet arrives at the relay, some 40 million miles into the 400-million-mile journey, Mars may not be oriented properly to send the packet on to Jupiter. “Why throw the information away, instead of hanging on to it until Jupiter shows up?” Cerf said. This store-and-forward feature allows bundles to navigate toward their destinations one hop at a time, despite large disruptions and delays. Also, Moxie Marlinspike On Decentralization

- It's about time...for time series databases. Amazon announced the availabability of Timestream. Aiven for M3 is in beta. There's InfluxDB. It's always seemed odd to me that time is often the most important component of data yet it's not a first class data type. It's a pain. And these really should be paired with a git type model so changes over time are also first class data types.

Soft Stuff:

- NGINX Service Mesh: a fully integrated lightweight service mesh that leverages a data plane powered by NGINX Plus to manage container traffic in Kubernetes environments.

- TimescaleDB 2.0: A multi-node, petabyte-scale, completely free relational database for time-series. hardwaresofton: Clickhouse and Timescale are different types of databases -- Clickhouse is a columnar store and Timescale is a row-oriented store that is specialized for time series data with some benefits of columnar stores[0].

- HiFive: platform for professional RISC-V developers. HiFive Unmatched enables developers to create the RISC-V-based software they need for RISC-V platforms.

- cdk-patterns/serverless: The Lambda Circuit Breaker. This is an implementation of the simple webservice pattern only instead of our Lambda Function using DynamoDB to store and retrieve data for the user it is being used to tell our Lambda Function if the webservice it wants to call is reliable right now or if it should use a fallback function.

- active-logic/activelogic-cs: Easy to use, comprehensive Behavior Tree (BT) library built from the ground up for C# programmers.

- americanexpress/unify-jdocs: a JSON manipulation library. It completely eliminates the need to have model / POJO classes and instead works directly on the JSON document. Once you use this library, you may never want to return to using Java model classes or using JSON schema for JSON document validation.

- facebookincubator/CG-SQL: CG/SQL is a code generation system for the popular SQLite library that allows developers to write stored procedures in a variant of Transact-SQL (T-SQL) and compile them into C code that uses SQLite’s C API to do the coded operations. CG/SQL enables engineers to create highly complex stored procedures with very large queries, without the manual code checking that existing methods require.

Pub Stuff:

- Loon SDN: Applicability to NASA's next-generation space communications architecture: The Loon SDN is a large-scale implementation of a Temporospatial SDN and a cloud service for the interoperation and coordination of aerospace networks. The system schedules the physical wireless topology and the routing of packets across the terrestrial, air, and space segments of participating aerospace networks based on the propagated motion of their platforms and high-fidelity modeling of the relationships, constraints, and accessibility of wireless links between them.

- Ocean Vista: Gossip-Based Visibility Control for Speedy Geo-Distributed Transactions: The paper is about providing strict serializability in geo-replicated databases. The technique it uses can be summarized as "geo-replicate-ahead transactions". First the transaction T is replicated to all the parties across the datacenters. The parties then check to see that the watermark rises above T to ensure that all transactions including and preceding T has been replicated successfully to all the parties. Then, the execution of T can be done asynchronously at each party.

- The case for a learned sorting algorithm: Today’s paper choice builds on the work done in SageDB, and focuses on a classic computer science problem: sorting. On a 1 billion item dataset, Learned Sort outperforms the next best competitor, RadixSort, by a factor of 1.49x. What really blew me away, is that this result includes the time taken to train the model used!

- Software Engineering at Google: We catalog and describe Google's key software engineering practices.

- Designing Event-Driven Systems: Author Ben Stopford explains how service-based architectures and stream processing tools such as Apache Kafka® can help you build business-critical systems. Oh, and it's free.

- The Synchronous Data Center: The goal of this paper is to start a discussion about whether the asynchronous model is (still) the right choice for most distributed systems, especially ones that belong to a single administrative domain. We argue that 1) by using ideas from the CPS domain, it is technically feasible to build datacenterscale systems that are fully synchronous, and that 2) such systems would have several interesting advantages over current designs.

- Orbital edge computing: nano satellite constellations as a new class of computer system: Orbital Edge Computing (OEC) is designed to do just that. Coverage of a ground track and processing of image tiles is divided up between members of a constellation in a computational nanosatellite pipeline (CNP). Also, Introducing Azure Orbital: Process satellite data at cloud-scale - you want to move datacenters as close as possible to the satellite down links.

- Loon SDN: Applicability to NASA's next-generation space communications architecture: The Loon SDN is a large-scale implementation of a Temporospatial SDN and a cloud service for the interoperation and coordination of aerospace networks. The system schedules the physical wireless topology and the routing of packets across the terrestrial, air, and space segments of participating aerospace networks based on the propagated motion of their platforms and high-fidelity modeling of the relationships, constraints, and accessibility of wireless links between them. The Loon SDN is designed to optimize the operational control of aerospace networks; provide network operators with greater flexibility, situational awareness, and control; facilitate interoperability between networks; and to coordinate interference avoidance.

- Architecture Playbook: This playbook is targeted on providing practical tools for creating your IT architecture or design faster and better. So the focus is 100% on the real thing by using the following leading principles.

- Concurrency Control and Recovery in Database Systems. It's free!

- Fast and Effective Distribution-Key Recommendation for Amazon Redshift: a new method for allocating data across servers. In experiments involving queries that retrieved data from multiple tables, our method reduced communications overhead by as much as 97% relative to the original, unoptimized configuration.

Reader Comments (4)

Hi, your link to "How you could have come up with Paxos yourself" is broken, thanks. I like your weekly

Fixed. Thanks

Always appreciate this STUFF. Thank you!

Your "Introducing Azure Orbital: Process satellite data at cloud-scale" link is broken.

I think you meant https://azure.microsoft.com/nl-nl/blog/introducing-azure-orbital-process-satellite-data-at-cloudscale/

Btw: AWS already had AWS Ground Station a year before ;-)

https://aws.amazon.com/about-aws/whats-new/2019/05/announcing-general-availability-of-aws-ground-station-/