Friday

Jul312020

Stuff The Internet Says On Scalability For July 31st, 2020

Friday, July 31, 2020 at 9:25AM

Friday, July 31, 2020 at 9:25AM Hey, it's HighScalability time!

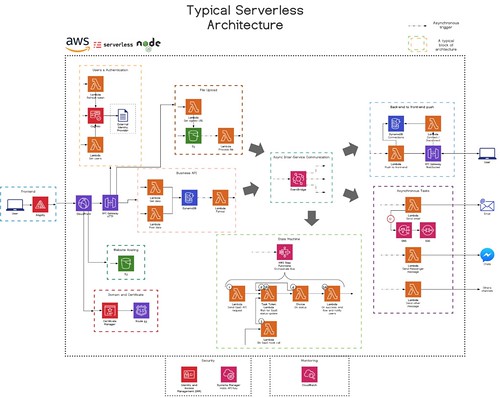

Serverless is really complex. Or is it? @paulbiggar sparked a thoughtful Twitter thread.

Do you like this sort of Stuff? Without your support on Patreon this kind of Stuff won't happen.

Usually I pitch my book Explain the Cloud Like I'm 10 here, but if you're an author with books on Amazon I'd like to ask you to give Best Sellers Rank a try. I started it to send data to me about my own books, but in the best bootstrapping tradition I extended it to work for the entire planet.

Number Stuff:

- 10 billion: breached records in Troy Hunt's Have I Been Pwned database. Up from 1 billion in 2016.

- 2.7x: Google TPUs faster than the previous generation.

- 98%: of international internet traffic is ferried around the world by subsea cables. Google will build a new subsea cable—Grace Hopper—which will run between the United States, the United Kingdom and Spain.

- 41%: of Google's search results refer to Google products. Does this break Google's social contract of no cost data scraping for the benefit of all?

- 1.5 years: half life of a microservice at Uber.

- 5%: of world's websites hosted by Wix. 700 million uniques per month. 1.2 million tests executed in 5 days using lambda to execute browser based testing.

- 300 req/s: needed to service Stack Exchange, StackOverflow, etc. Peak: 450 req /s. 55 TB data / month. 4 SQL SERVERS. 2 REDIS SERVERS. 3 TAG ENGINE SERVERS. 3 ELASTICSEARCH SERVERS. 2 HAPROXY SERVERS. 600, 000 sustained web socket connections. 12.2 ms to render the home page. 18.3 ms to render a questions page. 1.3 billion page views per month.

- 40: bills per 23 seconds dispensed from hacked ATM systems. Some incidents indicate that the black box contains individual parts of the software stack of the attacked ATM.

- 1st: milestone in warehouse scale computing was the VAXcluster.

- 47%: growth in the Azure cloud, down from 59% in the previous quarter.

- millionth of a billionth of a billionth of a billionth of a second: the smallest possible increment of time.

- 82 Million: hours watched in a week on top 5 twitch games. Twitch has 2.2 million average weekly viewers.

- $97,000: how much you make from 150 million YouTube views.

- 15.8%: increase in US mobile internet speeds and 19.6% on Fixed Broadband.

- $7.2 billion: record US online grocery sales in June.

- 23 million: Apple developers.

Quotable Stuff:

- @tsimonite: Larry Page in 2004: "We want to get you out of Google and to the right place as fast as possible" Google in 2020: Google is the right place, no outward links for you. Larry Page in 2004: "We want to get you out of Google and to the right place as fast as possible"Google in 2020: Google is the right place, no outward links for you

- @TwitterSupport: To recap: 130 total accounts targeted by attackers. 45 accounts had Tweets sent by attackers. 36 accounts had the DM inbox accessed. 8 accounts had an archive of “Your Twitter Data” downloaded, none of these are Verified.

- Charles Fitzgerald: Hyperclown status is best thought of as the ratio of cloud rhetoric to CAPEX spending. The most efficient hyperclowns maximize their marketing while minimizing actual spend.

- Dylan: They said, well, you’ve been in the App Store for eight years and you haven’t contributed anything. That is like, wow, this is a couple days before they’re about to have their really feel-good WWDC where they tell all the developers how much we appreciate you. No, we have not contributed to App Store revenue. However, Apple sells $1000 phones. And these $1000 phones they can only sell because of this entire ecosystem so to say that we’ve added no value to their system, that’s offensive. It was a situation where both of us benefitted.

- DSHR: So even long before 2017 no-one could have gained access to Google's systems by phishing an employee. Twitter's board should fire Jack Dorsey for the fact that, after at least seven years of insider attacks, their systems were still vulnerable.

- @Steve_Yegge: Using Google APIs is like a Choose Your Own Adventure where *almost* every path continues for 2 years before it is deprecated and dies. I will never EVER use Google Cloud Endpoints again, for instance, nor App Engine Java. It's insane how they neglect their docs and libraries.

- @alistairmbarr: Some Googlers pushed back against adding a 4th ad to the top of search results because it was such low quality next to the first organic result. Google went ahead anyway because it was under pressure to meet Wall St expectations

- Jared Short: When building our application using serverless and cloud-native services, the building blocks we use have greater shared context among individuals; access to a richer vocabulary of pieces. A byproduct of having discrete components means our diagrams communicate intention and purpose with more clarity and less abstraction...What many of us are saying and I think we are finally figuring out how to, is that serverless helps communicate business complexities in a standardized way. Clear purpose components and services help reduce abstraction, and make it easier to understand what you are trying to build. In addition, you can usually understand where your system will have distinct types of issues (scalability, reliability, security) and architect specifically to address those areas without needing to increase the complexity of all the other parts of the system.

- @lhochstein: I bet "received input in a form never imagined by the developer" is a more common failure mode for distributed systems than "race conditions".

- Ammar: Linus Tech Tips (a technology channel) was the channel that produced most trending [YouTube] videos in 2019. It is a technology (not entertainment nor music) and personal (not corporate) channel. More than that, the 2nd and 3rd channels after Linus Tech Tips were cooking channels.

- @tmclaughbos: And I can tell you no team has just switched from containers to serverless and magically gotten faster. They probably got slower.

- pm edited: My experience with [K8s] has been extremely negative. Have been working with it for the past few years in three companies I consulted for. In one instance, they replaced two rest APIs running on EC2 with handrolled redundancy and zero downtime automated deployments using only AWS CLI and a couple of tiny shellscripts with a kubernetes cluster. Server costs jumped 2000% (not a typo) and downtimes became an usual thing for all sorts of unknown reasons that often took hours for the “expert” proponents of kubernetes to figure out.

- Gerrit De Vynck: On smartphones, the change has been more pronounced. From June 2016 to June 2019, the proportion of mobile searches that led to clicks on free web links dropped to 27% from 40%. No-click searches, which Fishkin says suggests the user found the information they needed on Google, rose to 62% from 56%. Meanwhile, clicks on ads more than tripled, Jumpshot data show. “This has been the slowest but most consistent march in tech,” venture capitalist Bill Gurley wrote on Twitter last year. “If you are still holding out hope for a SEO strategy you must be intentionally ignoring all of the data in front of you,” he added, referring to search engine optimization, a popular way of improving websites to rank higher in Google’s free results.

- @schneems: If you provide an API client that doesn't include rate limiting, you don't really have an API client. You've got an exception generator with a remote timer.

- @eastdakota: Details on how we caused an 23 min outage for~50% of @Cloudflare's network today. The root cause was a typo in a router configuration on our private backbone. We've applied safeguards to ensure a mistake like this will not cause problems in the future.

- @QuinnyPig: Lockin: people, data gravity, The second form of lock-in that gets overlooked is whatever your provider is using for Identity and Access Management (IAM).

- hibikir: Having worked at some of those companies, along with small startups, the biggest difference isn't really plain old sophistication, but expenses so high as to make the engineering effort worthwhile. If your production system is 10 instances on some random cloud, a 10% efficiency savings saves me 1 instance, so maybe $2k a year. Taking into account opportunity costs vs doing things that raise revenue, said startup would consider the effort a waste of time unless it took a few hours. With the same architecture, but instead 20K instances, then suddenly that 10% is saving 2000 instances, and 4 million a year. Unless there's a major engineering shortage, chances are that spending a over a month on 4 million in yearly savings will be completely justified, and would even be a highlight in someone SRE's review.

- Gartner: Adopt a tactical requirement approach to your CDN selection. Choose a CDN provider that will support all your Tier 1 and at least 75% to 85% of your Tier 2 requirements, and use location, service differentiation and support as key considerations rather than price.

- rdkls: All these experts saying don't lift and shift. We did, it worked well. Afterwards we continue to update and optimize. I headed up cost op initiative with each team, strong management support, our bill continues to drop. You need strong direction, clear timelines and diplomacy and everyone aligned (not hard when cloud is clearly the future). Until we had that (new CTO) the initiative floundered. We had a big countdown on one TV till DC exit.

- Slack: As a result of the pandemic, we’ve been running significantly higher numbers of instances in the webapp tier than we were in the long-ago days of February 2020. We autoscale quickly when workers become saturated, as happened here — but workers were waiting much longer for some database requests to complete, leading to higher utilization. We increased our instance count by 75% during the incident, ending with the highest number of webapp hosts that we’ve ever run to date.

- Corey Quinn: A majority of spend across the board is and always has been the direct cost of EC2 instances. The next four are, in order, RDS, Elastic Block Store (an indirect EC2 cost), S3, and data transfer (yup, another EC2 cost). That's right. The cloud really is a bunch of other people's virtualized computers being sold to you. The rest of it is largely window dressing.

- Andy Cockburn: Given the high proportion of computer science journals that accept papers using dichotomous interpretations of p, it seems unreasonable to believe that computer science research is immune to the problems that have contributed to a replication crisis in other disciplines. Next, we review proposals from other disciplines on how to ease the replication crisis, focusing first on changes to the way in which experimental data is analyzed, and second on proposals for improving openness and transparency.

- @mcnees: "The whole matter of the world may have been present at the beginning, but the story it has to tell may be written step by step." — Georges Lemaître

- @abbyfuller: Software engineering doesn’t need to be some crazy thing where you have to work nights and weekends and make it your sole hobby and love to code! It’s ok to do it as “just” a job. It’s a job I happen to like, but it’s still a job.

- @mipsytipsy: the key is to emit only a single arbitrarily-wide event per request per service, and pack data in densely (typically 300-400 dimensions per event for a mature service). and then at very high throughput, some form of intelligent sampling strategy should kick in

- AEROSPACE SAFETY ADVISORY PANEL: SpaceX and Boeing have very different philosophies in terms of how they develop hardware. SpaceX focuses on rapidly iterating through a build-test-learn approach that drives modifications toward design maturity. Boeing utilizes a well-established systems engineering methodology targeted at an initial investment in engineering studies and analysis to mature the system design prior to building and testing the hardware.

- Stuff Made Here: Using your tools to build new tools is one of life's great pleasures.

- @NinjaEconomics: "Our [Facebook] algorithms exploit the human brain's attraction to divisiveness."

- dougmwne: In 13 years at 5 companies, I have literally never had the experience you're describing. Workplaces were always mainly adversarial and extractive with a thin veneer of "we're a family" and "I work with the most amazing people" BS spread on top. I think my coworkers have all generally been fine and competent people, it's just that the workplace is a hostile environment where everyone is either trying to swim with the big fish or at least keep from drowning. My parents are retired now, but describe the work place in your terms of real human connection. I can't help but feel that world is gone. One of their older friends was shocked that I worked from home and couldn't understand why I'd want to separate myself from people like that and forgoe all the friendships. But again, I think that world is mostly gone, chewed up by toxic businesses culture which is why so many people would rather sit at home than deal with it.

- Shourya Pratap Singh: While On-Demand sounds good, but it can get pretty costly. Recently, we changed some code that required a particular table to be accessed more often, so we switched to On-Demand from provisioned to monitor the capacities, and it costed us almost 2x! We did this to decide the future capacity but the costs were pretty well visible in just 3 days of making the switch. Also, it is to be noted that on-demand to provisioned capacity mode conversion is allowed only once per day. As a general rule of thumb, avoid on-demand as most of the use-cases have a predictable load.

- Stephen O'Grady: But it seems equally plausible that Anthos and BigQuery are merely the first manifestation of a fundamentally new approach for Google. For years, would be challengers, armed only with largely similar offerings, dutifully charged up the hill that was an incumbent AWS operating at velocity in its core market. Most were cut down. Google, in particular, never seemed to benefit from this approach. More recently, however, there have been signs of creativity.

- cs702: The main point of this article is that Robinhood has brought Silicon Valley-style maximization of user engagement to retail stock-market trading without regard for the psychological, social, and financial consequences to the people who use the service.

- Rule11: Sometimes we have to remember the cost of the network is telling us something—just because we can do a thing doesn’t mean we should. If the cost of the network forces us to consider the tradeoffs, that’s a good thing. And remember that if your toaster makes your bread at the same time every morning, you have to adjust to the machine’s schedule, rather than the machine adjusting to yours…

- crazygringo: Unfortunately, the author/article seems to completely miss the meaning of "joins don't scale". Obviously indexed joins on a single database server scale just fine, that's the entire selling point of an RDMBS! The meaning of "joins don't scale" is that they don't scale across database servers, when your dataset is too big to fit in a single database instance. Joins scale across rows, they don't scale across servers. Now a lot of people don't realize how insanely powerful single-server DB's can be. A lot of people that assume they need to architect for a multi-server DB don't realize they can get away with a hugely-provisioned SSD single-server indexed database with a backup, with performant queries.

- jonathantn: The bottle line is that thinking in "sets of data" instead of "individual records" will pay off handsomely on the operational cost of such a data ingestion pipeline.

- The Orbital Index: Al Amal also carries a high resolution imager, capable of 12 megapixel monochrome images (with discrete RGB filters) at 180 fps, creating an opportunity for the first 4K video from another planet—just not in anywhere near real-time since the probe sports 250 kbps - 1.6 Mbps of bandwidth depending on distance to Earth.

- Julia Ebert: Positive feedback. Longer intervals between observation gave consistently higher accuracy. Taking observations more frequently got samples quicker but since they were so spatially correlated it meant the overall decisions were less accurate because they didn't represent the true environment.

- @mipsytipsy: if you can solve your app problem with a monolith, do that. if you can solve your architecture problem with a LAMP stack, do that. if you can debug your problem with printf to stdout, do that. just watch out for the day when the solution reaches its edge & becomes the new problem.

- martintrapp: AD does automatic differentiation, PLLs transform a generative model into some suitable form to perform automatic Bayesian inference, e.g. by using AD and black-box variational inference. Or said differently, in a PPL you specify the forward simulation of a generative process and the PPL helps to automatically invert this process using black-box algorithms and suitable transformations. Without a PPL, you would traditionally write your code for your model and would have to implement a suitable inference algorithm yourself. With a PPL you only specify the generative process and don't have to implement the inference side of things nor care about an implementation of your model that is suitable for inference.

- Samuel Greengard: The value of neuromorphic systems is they perform on-chip processing asynchronously. Just as the human brain uses the specific neurons and synapses it needs to perform any given task at maximum efficiency, these chips use event-driven processing models to address complex computing problems. The resulting spiking neural network—so called because it encodes data in a temporal domain known as a "spike train"—differs from deep learning networks on GPUs. Existing deep learning methods rely on a more basic brain model for handling tasks, and they must be trained in a different way than neuromorphic chips.

- @GemmaBlackUK: I "love" serverless. But infrastructure complexity, plus setting up IAM policies of least privilege against each resource is why I have decided instead to go to containers for the majority of my backend apps. If it can be abstracted away, I think I'd be tempted to revisit it.

- Gerry McGovern: In 1994, there were 3,000 websites. In 2019, there were estimated to be 1.7 billion, almost one website for every three people on the planet. Not only has the number of websites exploded, the weight of each page has also skyrocketed. Between 2003 and 2019, the average webpage weight grew from about 100 KB to about 4 MB. The results? “In our analysis of 5.2 million pages,” Brian Dean reported for Backlinko in October 2019, “the average time it takes to fully load a webpage is 10.3 seconds on desktop and 27.3 seconds on mobile.” In 2013, Radware calculated that the average load time for a webpage on mobile was 4.3 seconds.

- Tyler Treat: Luckily, there is a better solution—one that fits our serverless model and enables us to control external traffic while allowing App Engine services to securely communicate internally. IAP supports context-aware access, which allows enforcing granular access controls for web applications, VMs, and GCP APIs based on an end-user’s identity and request context. Essentially, context-aware access brings a richer zero-trust model to App Engine and other GCP services.

- @benthompson: Notable to see Apple confirming a point I’ve been trying to make: the company believes it is entitled to *all* commerce that happens on an iPhone.

- throwaway_aws: An Amazon spokesman said the company doesn’t use confidential information that companies share with it to build competing products" Maybe...but in the past, AWS proactively looked at traction of products hosted on its platform, built competing products, and then scraped & targeted customer list of those hosted products. In fact, I was on a team in AWS that did exactly that. Why wouldn't their investing arm do the same?

- John Hagel: That sets the stage for a new way of organizing the gig economy. We’re going to begin to see impact groups forming and coming together into broader networks that will help them to learn even faster. That’s where guilds come in.

- Bryon Moyer: Nakamura started by reminding us that 5G went live just this last March and that it will be the dominant technology through the 2020s. He said that 6G is a technology for the 2030s...6G then will focus on solving social issues and a closer fusing of the physical and the cyber-worlds, enabled by an expanded set of higher-bandwidth communications options and by more sophisticated fusion between the physical and cyber realms.

- Bruce Schneier: But inefficiency is essential security, as the COVID-19 pandemic is teaching us. All of the overcapacity that has been squeezed out of our healthcare system; we now wish we had it. All of the redundancy in our food production that has been consolidated away; we want that, too. We need our old, local supply chains -- not the single global ones that are so fragile in this crisis. And we want our local restaurants and businesses to survive, not just the national chains. We have lost much inefficiency to the market in the past few decades. Investors have become very good at noticing any fat in every system and swooping down to monetize those redundant assets. The winner-take-all mentality that has permeated so many industries squeezes any inefficiencies out of the system.

- Frederic Lardinois: Indeed, Workers Unbound, Cloudflare argues, is now significantly more affordable than similar offerings. “For the same workload, Cloudflare Workers Unbound can be 75% percent less expensive than AWS Lambda, 24 percent less expensive than Microsoft Azure Functions, and 52 percent less expensive than Google Cloud Functions,”

- Slack: The broken monitoring hadn’t been noticed partly because this system ‘just worked’ for a long time, and didn’t require any change.

- @johncutlefish: “Scaling” is very different from breaking things up and making them functional “at scale”. Which is why big lumbering orgs can’t just copy the structure of rapidly scaled/scaling new ventures and expect it to work.

- @ShaiDardashti: "AMZN has invested $18B over the past 10 years to turn every major cost into a source of revenue." - @chamath on $AMZN (2016);

- Tsuyoshi Hirashima: Cells are tightly connected and packed together, so when one starts contracting from ERK activation, it pulls in its neighbors. This then caused surrounding cells to extend, activating their ERK, resulting in contractions that lead to a kind of tug-of-war propagating into colony movement

- Necessary-Space: Scaling a web services is trivial if you just have one database instance: Write in a compiled fast language, not a slow interpreted language. Bump up the hardware specs on your servers. Distribute your app servers if necessary and make them utilize some form of in-memory LRU cache to avoid pressuring the database. Move complicated computations away from your central database instance and into your scaled out application servers. A single application server on a beefed up machine should have no problem handling > 10k concurrent connections.

- snoob2015: People usually jump into redis when it comes to cache. IMO, if the traffic is fit inside a server, just use a caching library (in-process cache?) in your app ( for example, I use java caffeine). It doesn't add another network hop, no serialization cost, easier to fine tune. I added caffeine into my site and the cpu goes from 50% to 1% in no time, never have another perf problem since then.

- throwawaymoney666: I've watched our Java back-end over its 3 year life. It peaks over 4000 requests a second at 5% CPU. No caching, 2 instances for HA. No load balancer, DNS round robin. As simple as the day we went live. Spending a bit of extra effort in a "fast" language vs an "easy" one has saved us from enormous complexity. In contrast, I've watched another team and their Rails back-end during a similar timeframe. Talks about switching to TruffleRuby for performance. Recently added a caching layer. Running 10 instances, working on getting avg latency below 100ms. It seems like someone on their team is working on performance 24/7.

- Schneier: Class breaks are security vulnerabilities that break not just one system, but an entire class of systems. They might exploit a vulnerability in a particular operating system that allows an attacker to take remote control of every computer that runs on that system's software. Or a vulnerability in internet-enabled digital video recorders and webcams that allows an attacker to recruit those devices into a massive botnet. Or a single vulnerability in the Twitter network that allows an attacker to take over every account.

- Sabine Hossenfelder: The brief summary is that if you hear something about a newly proposed theory of everything, do not ask whether the math is right. Because many of the people who work on this are really smart and they know their math and it’s probably right. The question you, and all science journalists who report on such things, should ask is what reason do we have to think that this particular piece of math has anything to do with reality. “Because it’s pretty” is not a scientific answer. And I have never seen a theory of everything that gave a satisfactory scientific answer to this question.

- DANIEL OBERHAUS: Autonomy is critical for Perseverance’s mission. The distance between Earth and Mars is so large that it can take a radio signal traveling at the speed of light up to 22 minutes to make a one-way trip. The long delay makes it impossible to control a rover in real time, and waiting nearly an hour for a command to make a round trip between Mars and the Earth isn’t practical either...Most tests of Perseverance’s navigation algorithms were tested in virtual simulations, where the rover team threw every conceivable scenario at the rover’s software to get an idea of how it would perform in those situations

- cactus2093: The companies I've seen succeed were 100% focused on shipping their product to customers. Not 90% focused on customers and 10% focused on code quality, but 100% focused. They'd rather have to spend 30 engineer-days a few years from now fixing an issue if they get to that point than spend 3 hours getting it right upfront. As an engineer that goes against every instinct I have, it really seems like spending a couple hours upfront must be a better use of time. It seems like it should be possible to spend 10% of your time setting yourself up well for the future, that's still just a rounding error of your time. And then if you do survive another few years, you'll have a huge leg up on other series B or C stage competitors if you're not hindered by a lot of tech debt at that point. But from a capitalist perspective, it's probably not so crazy. If you are working with a $200,000 seed round in the beginning, and an engineer costs $80,000 a year, 3 hours of their time costs $115 which is 0.06% of your funds. And more importantly, that $200k is maybe enough for a year of runway, so 3 hours is 0.14% of the time you have to live given a 40-hour a week year (or 0.07% of an 80 hour a week year). Every bit of that starts to add up. Whereas by the time you're a later-stage company and you've raised, say, $40 million dollars and are paying engineers $150k, 30 engineer-days of work is $17,300 but that's only 0.04% of the money you've raised plus your runway is now approaching infinity if you're close to profitable.

- amdelamar: Eventually you get to enjoy deprecating old services as much as building new ones, simply because you never have to teach others about them again.

- LinkedIn: For those considering a similar migration, a key piece of advice we’d like to share is to defer timelines until you are certain about your throughput. Our initial calculations were based on the ideal performance of our databases, and we soon found that real-world differences in hardware, the extended duration of the migration and more, caused multiple revisions to our timelines. In the end, this entire data migration effort took us nearly six months to complete. It’s important to remember that a data migration is a marathon, not a sprint.

- Khan Academy: Our iOS and Android apps share a single codebase [using React Native], with engineers specializing in features of the app, rather than platform. This means we’re way better about improving the quality of a given feature over time, and we can make incremental improvements to features, rather than feeling like we need to get everything in the initial version.

- Audrius Kucinskas: In our last AWS bill we found an additional $4k (total bill was 8k) increase what appeared to be NAT Gateway traffic cost. After tracking down recent pull requests, we found one obscure change that essentially added VPC(which routed all traffic through NAT GW) to a global `serverless.yml` scope in order to access MongoDB. This routed all the traffic of every lambda in that stack through NAT. One of those lambdas was an ML lambda which was downloading and uploading Models to and from S3. Needless to say, paying NAT GW traffic price for S3 traffic is not fun.

- Matt Lacey: You've only added two lines - why did that take two days! It might seem a reasonable question, but it makes some terrible assumptions: lines of code = effort; lines of code = value; all lines of code are equal. None of those are true.

- cpnielsen: We have been running our own self-hosted kubernetes cluster (using basic cloud building blocks - VMs, network, etc.), starting from 1.8 and have upgraded up to 1.10 (via multiple cluster updates). First off, don’t ever self-host kubernetes unless you have at least one full-time person for a small cluster, and preferably a team. There are so many moving parts, weird errors, missing configurations and that one error/warning/log message you don’t know exactly what means (okay, multiple things). From a “user” (developer) perspective, I like kubernetes if you are willing to commit to the way it does things. Services, deployments, ingress and such work nicely together, and spinning up a new service for a new pod is straightforward and easy to work with. Secrets are so-so, and you likely want to do something else (like Hashicorp Vault) unless you only have very simple apps.

- Mark Litwintschik: Many believe that for near-instant analytics on billions of records you'd need dedicated Linux clusters, several GPUs or proprietary Cloud offerings. Some of my fastest benchmarks were run on such environments. But in 2020, an off-the-shelf MacBook Pro using OmniSciDB (formerly MapD) can happily do the job.

- Wisdom: Dual-channel memory has measurable performance improvement over single-channel memory. With DDR5 adopting 2x 32-bit channels per DIMM, dual-channel will likely act more like a quad-channel when compared to a dual-channel DDR4 with 1x 64-bit channel per DIMM. I fully expect DDR5 to increase FPS by a noticeable amount. Plus, DDR5-4800 starting point is quite a bit faster than the officially supported DDR4-3200.

- Tel Aviv University: We discovered that brain connectivity — namely the efficiency of information transfer through the neural network — does not depend on either the size or structure of any specific brain," says Prof. Assaf. "In other words, the brains of all mammals, from tiny mice through humans to large bulls and dolphins, exhibit equal connectivity, and information travels with the same efficiency within them. We also found that the brain preserves this balance via a special compensation mechanism: when connectivity between the hemispheres is high, connectivity within each hemisphere is relatively low, and vice versa."

Useful Stuff:

- Substitute manufacturing accuracy with computation, the latter being enormously less costly. What we learned from 100m smartphones: Optimizing between strict performance specifications, pressure to source from lower cost battery manufacturers in China, and absolute safety is no longer exclusively in the realm of materials. Instead it demands battery intelligence and smart software...auto OEMs now rank battery intelligence software as critical to their systems. OEMs are also becoming more involved in the design and manufacturing of batteries...So what can intelligent battery management software do? Here’s one representative data point from our results. Our average longevity over 100 million smartphones shipping with our solutions is 1,900 cycles at 25 °C, and an incredible 1,300 cycles at the punishing temperature of 45 °C. Otherwise, an average smartphone will last between 500 and 1,000 cycles...Then innovation struck in the form of cameras embedded in smartphones. These used cheap plastic lenses but corrected for their optical deficiencies using software. Images from modern smartphone cameras can surpass the quality of those from expensive DSLR cameras. The camera industry showed that it can substitute manufacturing accuracy with computation, the latter being enormously less costly...We use a similar philosophy to improve the safety of batteries. We recognize that manufacturing defects are part of building batteries. We use predictive algorithms to identify these defects long before they become a safety hazard, then manage the operation of the battery to reduce the risk of a failure.

- Papers and videos from USENIX ATC '20 Technical Sessions are now available. You might like Serverless in the Wild: Characterizing and Optimizing the Serverless Workload at a Large Cloud Provider: in this paper, we first characterize the entire production FaaS workload of Azure Functions. We show for example that most functions are invoked very infrequently, but there is an 8-order-of-magnitude range of invocation frequencies. Using observations from our characterization, we then propose a practical resource management policy that significantly reduces the number of function cold starts, while spending fewer resources than state-of-the-practice policies. Or Faasm: Lightweight Isolation for Efficient Stateful Serverless Computing. Or Fine-Grained Isolation for Scalable, Dynamic, Multi-tenant Edge Clouds.

- After experiencing the troubles of a system built from 2,200 microservices, Uber finds a middle-way. Introducing Domain-Oriented Microservice Architecture.

- "Our goal with DOMA is to provide a way forward for organizations that want to reduce overall system complexity while maintaining the flexibility associated with microservice architectures."

- "The critical insight of DOMA is that a microservice architecture is really just one, large, distributed program and you can apply the same principles to its evolution that you would apply to any piece of software."

- "By providing a systematic architecture, domain gateways, and predefined extension points, DOMA intends to transform microservice architectures from something complex to something comprehensible: a structured set of flexible, reusable, and layered components."

- "It’s not an exaggeration to say that Uber would not have been able to accomplish the scale and quality of execution that we maintain today without a microservice architecture. However, as the company grew even larger, 100s of engineers to 1000s, we began to notice a set of issues associated with greatly increased system complexity."

- "Understanding dependencies between services can become quite difficult, as calls between services can go many layers deep. A latency spike in the nth dependency can cause a cascade of issues upstream. "

- The core principles and terminology associated with DOMA are:

- Instead of orienting around single microservices, we oriented around collections of related microservices. We call these domains.

- We further create collections of domains which we call layers.

- We provide clean interfaces for domains that we treat as a single point of entry into the collection. We call these gateways.

- Finally, we establish that each domain should be agnostic to other domains, which is to say, a domain shouldn’t have logic related to another domain hard coded inside of its code base or data models.

- The central conflict of software has always been what to put where. You might even call that software's Original Sin. Before that decision is made we live in the Garden of Abstraction, where everything is beautiful. Bite the apple and there's the pain of the fall. Complexity. Brittleness. Bugs. Latency. Friction. Chaos. We think we can get back to the garden by creating rules that we call methodologies, but it never quite works. In our soul we are always trying to get back to the garden. Always creating more and different rules. But that ideal is unattainable. So we create moral systems that allow us to work together without too much strife. And that's what Uber has successfully done here.

- Also, Building Domain Driven Microservices from Walmart Labs.

- Fun discussion of Data Structures & Algorithms I Actually Used Working at Tech Companies. Most programming is grunt work, but sometimes you get to sparkle.

- Have you had that nagging feeling that clouds are no longer competing on price? You're right. IaaS Pricing Patterns and Trends 2020: Furthermore, the abatement of downward pricing pressure continues. When controlling for instance size, prices for standard infrastructure have largely leveled out over the last few years. Providers are largely choosing not to compete using price-based differentiation on their base compute offerings...Standard base infrastructure is reaching a commodity status. While pricing of these instances is still important, it is no longer the primary point of competition for most cloud providers.

- Nicely done. Systems design for advanced beginners. There's a lot there. If you know someone who needs to grasp the complexity of a real system this is great place to start. Also, Inside The Muse Series. Also also, The Cadence: How to Operate a SaaS Startup.

- Scaling to handle COVID induced traffic is no Picnic. Just kidding. Scaling Picnic systems in Corona times.

- Our systems run on AWS. Kubernetes (EKS) is used to run compute workloads, and for data storage we use managed services like Amazon RDS and MongoDB Atlas.

- [Services] communicate with REST over HTTP, and most of our services are implemented on a relatively uniform Java 11 + Spring Boot stack.

- The highest traffic peaks occur when new delivery slots are opened up for customers. People start anticipating this moment, and traffic surges so quickly that the Autoscaler just can’t keep up. Since we know the delivery slot opening moments beforehand, we combined the HPA with a simple Kubernetes CronJob. It automatically increases the `minReplicas` and `maxReplicas` values in the HPA configuration some time before the slots open.

- Just scaling pods isn’t enough: the Kubernetes cluster itself must have enough capacity across all nodes to support the increased number of pods. Costs now also come into the picture: the more and heavier nodes we use, the higher the EKS bill will be. Fortunately, another open source project called Cluster Autoscaler solves this challenge. We created an instance group that can be automatically scaled up and down by the Cluster Autoscaler based on utilization.

- Combining HPA and Cluster Autoscaler helped us to elastically scale the compute parts of our services. However, the managed MongoDB and RDS clusters also needed to be scaled up. Scaling up these managed services is a costly affair, and cannot be done as easily as scaling up and down Kubernetes nodes.

- A somewhat bigger change we applied for some services was to provision them with their own MongoDB clusters.

- So what was causing the slowdown of all these user requests? Finally, a hypothesis emerged: it might be a connection pool starvation issue of the Apache HTTP client we use to make internal service calls. We could try to tweak these settings and see if it helps, but in order to really understand what’s going on, you need accurate metrics.

- The best internal service call is the one you never make. Through careful log and code analysis, we identified several calls that could be eliminated, either because they were duplicated in different code paths, or not strictly necessary for certain requests.

- Again, metrics helped locate high-volume service calls where introducing caching would help the most.

- Part of our caching setup also worked against us during scaling events. One particular type of caching we use is a polling cache...To prevent such a ‘thundering herd’, we introduced random jitter in the schedule of polling caches. This smoothens the same load over time.

- During these first weeks, we had daily check-ins where both developers and infrastructure specialists analyzed the current state and discussed upcoming changes. Improvements on both code and infrastructure were closely monitored with custom metrics in Grafana and generic service metrics in New Relic

- Scaling to 100k Users.

- Nearly every application, be it a website or a mobile app - has three key components: an API, a database, and a client (usually an app or a website).

- 10 Users: Split out the Database Layer.

- 100 Users: Split Out the Clients.

- 1,000 Users: Add a Load Balancer. We’re going to place a separate load balancer in front of our web client and our API. This means we can have multiple instances running our API and web client code. What we also get out of this is redundancy.

- 10,000 Users: CDN

- 100,000 Users: Scaling the Data Layer. Caching. Add read replicas.

- I'm not sure people understand that the internet is a network of networks. You can't break up the internet. It's not a single thing. The internet was separated into networks based on different underlying technologies, now it looks like the internet will be separated into networks governed by different policies. If China creates their own network behind the Great Firewall and connects it to the internet through some sort of gateway, they are still on the internet. What dies is the dream of a free and open internet, not the internet itself. China will do to the internet what they have done to capitalism: freedom turns out to be orthogonal to both.

- Two Weeks. Covers in detail HEY's mollification of our Apple overloards.

- Jason Fried related his Atlas Shrugged moment where he contemplated retirement because he didn't "want to be in this industry if I can’t run my own business my own way." But of course, since this is real life—he compromised. Nobody needs email in a gulch.

- After that it gets more interesting. When a product is taking off big there are a lot of problems to solve.

- How do you deal with bug fixes? Don't do anything in a bad state of mind. It's difficult just not to go try and fix everything. Watch things as they come in. Look for the big things and identify patterns.

- How do you deal with support? You hire temps, but that means you need an onboarding process for a new product. Not easy.

- They created a bulkhead between the farcical temporary email accounts so abuses in that area wouldn't impact paying customers.

- HEY is Basecamp's first cloud product and as advertised, the cloud allowed them to scale in days instead of weeks.

- Wait until you're ready to take on Goliath. David: The company we had in 2010 was in no position to take on Gmail. We didn’t have the capacity. We didn’t have the experience, we didn’t have the skills. We didn’t have the ambition. After 20 years of building software, 16 years of running Basecamp, we were ready.

- Minecraft has been hit with the new Window's Tax—the Azure Tax. Microsoft-owned Minecraft will stop using Amazon's cloud. I'm sure they have nothing better to do.

- Twitter uses and interesting form of client side (not UI) load balancing. Deterministic Aperture: A distributed, load balancing algorithm

- To balance requests, we use a distribution strategy called power of two choices (P2C). This is a two-step process: For every request the balancer needs to route, it picks two unique instances at random. Of those two, it selects the one with the least load.

- The key result from Mitzenmacher's work is that comparing the load on two randomly selected instances converges on a load distribution that is exponentially better than random, all while reducing the amount of state (e.g., contentious data structures) a load balancer needs to manage.

- Deterministic aperture has been a quantum leap forward for load balancing at Twitter. We’ve been able to successfully operate it across most Twitter services for the past several years and have seen great results

- We see a marked improvement in request distribution with a 78% reduction in relative standard deviation of load.

- All while reducing the connection count drastically. This same service topology saw a 91% drop (from ~280K to ~25K) in connection count during the upgrade

- Any similarities between software testing and COVID testing? You be the judge. TWiV 640: Test often, fast turnaround, with Michael Mina. The big idea here is that instead of expensive COVID tests that find any hint of the virus, what we should be doing is investing in cheap tests that identify when people are infectious. If people have had COVID or are getting over COVID it doesn't matter. What matters is if they can shed virus and get other people sick. To detect that all we need are cheap $1 tests that people can test with everyday. If you test positive stay home. The other big idea is that in the US tests are judged to be effective if they are 80% as accurate as PCR tests. That shouldn't be the threshold. The threshold should be if the test can identify if a person is infectious. That level of accuracy is far cheaper and can be made ubiquitous.

- Engineering Failover Handling in Uber’s Mobile Networking Infrastructure:

- Uber’s edge infrastructure comprises front-end proxy servers that terminate secure TLS over TCP or QUIC connections from the mobile apps. The HTTPS traffic originating from the mobile apps over these connections are then forwarded to the backend services in the nearest data-center using existing connection pools. The edge infrastructure spans across public cloud and privately managed infrastructures

- On the mobile side, a core component of our networking stack is the failure handler that intelligently routes all mobile traffic from our applications to the edge infrastructure. Uber’s failure handler was designed as a finite state machine (FSM) to ensure that the traffic sent via cloud infrastructure is maximized. During times when the cloud infrastructure is unreachable, the failover handler dynamically re-routes the traffic directly to Uber’s data centers without significantly impacting the user’s experience. After rolling out the failover handler at Uber, we witnessed a 25-30 percent reduction in tail-end latencies for the HTTPS traffic when compared to previous solutions. The failover handler has also ensured reasonably low error rates for the HTTPS traffic during periods of outages faced by the public cloud infrastructure. Combined, these performance improvements have led to better user experiences for markets worldwide.

- Videos from fwd:cloudsec 2020 are now available.

- Digital Ocean would like you to know they try harder: A CDN Spaces ($5/month) reduces latency and increases scalability by offloading static content like images, CSS, etc. A fully managed Load Balancer ($10/month) increases availability by distributing traffic between two Droplets ($10/month for 2 Droplets). A free Cloud Firewall makes your website secure as it blocks malicious traffic. You also adopt some best practices and start using Volumes Block Storage ($5/month for 50GiB) to store data separated from your Droplets, reducing the chance of data loss in the case of hardware failure. Being a responsible builder, you also decide to maintain a backup schedule ($2/month) so you can easily revert to an older state of the Droplet in case things go wrong. Since managing databases at this scale may not be your favorite activity (or the area where you feel your value lies), you decide to decouple your database from your application server by utilizing our Managed Databases ($15/month). While this seems like a ton of products in use, the overall cost of this configuration on DigitalOcean is still as little as $47 per month. Two-node Kubernetes cluster ($20/month). We charge only ~10-20% of what other clouds do for bandwidth.

- Running spot instances effectively with Amazon EKS: Coming out of our first two weeks running the app with a real production traffic load, we’re sitting at ~90% of our compute running on spot instances. For HEY, we are able to get away with running the entire front-end stack (OpenResty, Puma) and the bulk of our async background jobs (Resque) on spot.

- Rate Limiting, Rate Throttling, and how they work Together: Rate limiting is a server-side concept. If you’re hosting a service or an API, you want people who are consuming your service to spread the load predictably. This strategy helps with capacity planning and also helps to mitigate certain types of abuse. If you have an API, then you most likely should protect it by rate-limiting requests. In the beginning, there was an API server, and it was good. But then some jerk went and added rate-limiting code to it, which made the script you wrote crash. Enter: Rate throttling. Rate throttling is a client-side concept. Imagine you’re consuming some API endpoints. You can make 1000 requests an hour...When rate limiting is implemented on the server, and a client is provided with rate throttling, then everyone mostly gets what they want. People making requests aren’t getting a ton of errors, and the admins wearing pagers on the server-side can sleep better at night.

- Predicting and Managing Costs in Serverless Applications. Serverless developers cut the new application development lifecycle down from 6 months to 1 month. The average productivity increase for development teams is 33%.

- Race Conditions/Concurrency Defects in Databases: A Catalogue: If a transaction can read data written by another transaction that is not yet committed (or aborted), this read is called a “Dirty Read”; If a transaction can overwrite data written by another transaction that is not yet committed (or aborted), this read is called a “Dirty Write”; A transaction (typically, long running) may read different parts of the database at different points in time causing a Read Skew; Two or more concurrent transactions might read the same value from the database, modify it, and write it back, not including the modification made by the other concurrent transactions. This could lead to updates getting lost; Write Skew is a generalisation of the Lost Update problem. It is also caused by a “read-modify-write” cycle, but the objects getting modified could be different.

- What is a High Traffic Website?

- "I personally can’t think of a low-traffic online service I’ve worked on that couldn’t have been implemented cleanly enough using a simple, monolithic web app (in whatever popular language) in front of a boring relational database, maybe with a search index like Xapian or Elasticsearch. Many major websites aren’t much different from that. It’s not the only valid architecture, but it’s a tried-and-tested one."

- Performance is all about how well (usually how fast) the system can handle a single request or unit of work.

- Scalability is about the volume or size of work that can be handled.

- Scalability and performance often get confused because they commonly work together. For example, suppose you have some online service using a slow algorithm and you improve perfomance by replacing the algorithm with another that does the same job with less work. Primarily that’s a performance gain, but as long as the new algorithm doesn’t use more memory or something, you’ll be able to handle more requests in the same time.

- The first level is for sites that get well under 100k dynamic requests a day. Most websites are at this level, and a lot will stay that way while being totally useful and successful. They can also pose complex technical challenges, both for performance and functionality, and a lot of my work is on sites this size. But they don’t have true scalability problems (as opposed to problems that can be solved purely by improving performance).

- The next level is after leaving the 1M dynamic requests a day boundary behind. I think of sites at this level as being high traffic. Let me stress that that’s a technical line, not a value judgment or ego statement

- What happens with sites that get even bigger? Once the problems at one set of bottlenecks are fixed, the site should just scale until it hits a new set of bottlenecks, either because the application has changed significantly, or just because of a very large increase in traffic. For example, once the application servers are scalable, the next scaling bottleneck could be database reads, followed by database writes. However, the basic ideas are the same, just applied to a different part of the system.

- Scaling Kafka Mirroring Pipelines at Wayfair: We will explain how we transitioned from configuring, operating, and maintaining 212 distinct Kafka Mirror Maker clusters to only 14 Brooklin clusters to support 33 local Kafka clusters and 17 aggregate Kafka clusters. Upon transitioning, we used 57 percent fewer CPUs while gaining new capabilities for successfully managing our Kafka environment. We carried out this migration with no global downtime in an environment composed of thousands of machines and many thousands of CPUs, processing over 15 million messages per second.

- Tumblr is Rolling out a New Activity Backend. The old system: The current backend that powers every blog’s activity stream is pretty old and uses an asynchronous microservice-like architecture which is separate from the rest of Tumblr. It’s written in Scala, using HBase and Redis to store its data about all of the activity happening everywhere on Tumblr. The new system: written in PHP, using MySQL and Memcached for data storage.

- Power of DNA to Store Information Gets an Upgrade: DNA is about 5 million times more efficient than current storage methods. Put another way, a one milliliter droplet of DNA could store the same amount of information as two Walmarts full of data servers. And DNA doesn’t require permanent cooling and hard disks that are prone to mechanical failures. There’s just one problem: DNA is prone to errors. “We found a way to build the information more like a lattice,” Jones said. “Each piece of information reinforces other pieces of information. That way, it only needs to be read once.”

- Our AWS bill is ~ 2% of revenue. Here's how we did it: Use lightsail instances (20$ per instance) instead of EC2 instances (37$ per instance); Use a lightsail database (60$ per DB) instead of RDS (200$ per DB); Use a self hosted redis server on a compute instance (40$) instead of ElastiCache (112$); If feasible, use a free CDN (cost savings depends on traffic size); Use a self hosted NGINX server (20$ fixed cost) instead of ELB (cost depends on traffic and usage)

- We keep hearing about IoT, but what does it look like? John Deere with a truly fascinating real-life evolution of an IoT data processing system. GOTO 2020 • Batching vs. Streaming - Scale & Process Millions of Measurements a Second. In the end they created something like a Lambda architecture.

- In 2014 they started capturing equipment data: RPM, Oil Temp, etc. Data is aggregated into a 30 minute summary of what happened on the machine which is then sent to the cloud. Across the entire active fleet of machines they consume about 250 records a second with about 20 measurements per record, so about 5000 measurements per second.

- Their architecture was AWS Lambda, Kinesis, DynamoDB, S3, Scala. Data was aggregated by machine into 6 hour buckets of time, then days, weeks, and months, years, and lifetime.

- It worked. It scaled. It handled spikes. They didn't like how Lambda forced logic to spread out all over the system which made it hard to troubleshoot and debug. Logging was not so good.

- In 2016 they started streaming agronomic data.That requires handling 75,000 measurements a second. Data is aggregated into spatial buckets. The Bing maps tile system is used at level 21 which breaks down space into postage stamp sized chunks.

- Now dealing with operational data, with what's happening in the field and what's happening in the interaction with the field.

- For example, each row has its own sensor. Data is captured once a second. So for a 16 row unit they capture 16 * 1 * 15 sensor readings = 240 measurements per machine / second. That's 5000 records per second or 75K measurements per second total.

- What's a typical field look like? Depends where you are. In central Illinois a typical field is 48 acres. That's 40 football fields or a quarter mile long. That field grows 1.5 million corn plants. 2 billion kernels. It's spatially divided into 100,000 3'x3' tiles. In western Kansas you have 1000 acre fields. In Brazil or the Ukraine fields are 10,000 acres.

- Machines aren't always connected. Older machines load data onto a thumbdrive. Even new machines may go out of coverage. Data has to be queued up. So they must handle streams, micro-batches of data, and large batches of data.

- As data comes in it is statefully processed. They don't want to get the wrong data with the wrong field or wrong operation. They don't want to process data twice. They need to handle data that arrives later. It's complex.

- It sorta worked. Customers were sort-of happy. Data was being lost. They had to store 100s of TB of data because they spacial requirements were so high. It's not really scaling. It's struggling. It's hard to maintain.

- In 2017 they need to handle 255K measurements per second. They moved away from Lambda. They went with batch processing. AWS firehose, EMR/Spark, S3, Scala.

- This worked. Lambda code moved right over. Data quality was good. It scaled. Customers were sort of happy. The problem was it was slow. It took 3 hours to process the data. Customers wanted the data in 10 minutes. Storage requirements were still huge at ~ 7 PB, even though they only processed 7% of their data. Instance contention problems. Working with spot clusters could fail. It was more expensive than Lambda.

- In 2018 streaming requirements are now 12 million measurements per second. Why? They now sample 5 times a second. Moved to 32 row units. Each sample is 15 sensor readings. There are lots more machines capturing data. They can have 5,000 machines capturing data at once across the world. That's 12,000 million measurements per second.

- What do they do? They can't afford to prebuild and store it in S3. It's too expensive and too slow.

- They looked at how they use their data. Most of the data isn't ever looked at. Can they build just enough to respond to customer needs? Meta data was moved to Aurora. Summaries and totals were moved to Elasticsearch so they can do aggregations on the fly.

- New stack: AWS Kinesis, EMR/Fink, Aurora, S3, Scala. Fink allows you to do stateful stream processing. Sensor data and session data is joined. Spatial positions are computed. Using the Key By operation in Fink using a window, a session, a tile key, and the results are stored in S3 and Aurora.

- This is good. Storage is down to 2 PB for all customers data. 2% of what it would have been. They've processed 67 trillion measurement multiple times. It's fast. Customers have data within minutes. The problem is Flink doesn't auto-scale, neither does EMR. Hard to manage. With any kind of back-pressure state would just explode. More expensive than batch. They had a very large cluster running 24x7. API response times were slower because a lot of compute was pushed to on-demand.

- In 2020 they are looking at 5x the number of machines. The volume of data keeps going up. What will they do? A little bit of everything. Does all data need to have the same SLA? Can they move to batch for some data? Use Lambda to preprocess data when state isn't required.

- Now instead of turning everything into a stream they are taking large batches, micro-batches, and streams and turning them into batches.

- New stack: AWS Kinesis, ECS, S3, ElasticSearch, S3. This is for streaming batch operation. The Flink system is till running. They are back to pre-rasterizing operations, but they are smarter about it. API load times have moved from 15 seconds down to one second. Still fast, about 15 seconds per operation. Simpler to operate than Flink. ECS autoscales so they can keep up with the stream. The bad is they now manage two ingestion engines. They only pre-rasterize data they believe their customers will actually use, everything else must use the slower APIs that the Flink app produces. It's expensive. Uses a lot of S3 and a lot of compute.

- Beyond 2020. Careful tuning of what is pre-generated. Re-architect the streaming pipeline again. Looking at GPUs, timeseries databases, and for something else.

- Trends: more machines, more sensors, connected more often, higher density data, higher frequency data, customers was data faster, and customers want actionable insights.

- I wonder if they could do more processing on each site rather than centralize all the data? See machines as compute engines rather than dumb data sample producers. It seems pushing simpler systems to the edge would be more practical than processing all the world's data in one place. Failing that, how about regional systems? And if they could store data on the machine, turning each machine into a storage endpoint, maybe all the data wouldn't have to be in S3.

Soft Stuff:

- alex-petrenko/sample-factory (paper, article) : Codebase for high throughput asynchronous reinforcement learning.

- GhostDB: a distributed, in-memory, general purpose key-value data store that delivers microsecond performance at any scale. GhostDB is designed to speed up dynamic database or API driven websites by storing data in RAM in order to reduce the number of times an external data source such as a database or API must be read.

Video Stuff:

- The Bit Player: In 1948, Claude Shannon introduced the notion of a "bit", laying the foundation for the Information Age. His ideas power our modern life, influencing computing, genetics, neuroscience and AI. Mixing contemporary interviews, archival film, animation and dialogue from interviews with Shannon, The Bit Player tells the story of an overlooked genius with unwavering curiosity.

- Thomas Edison's Stunning Footage of the Klondike Gold Rush. Money was made by the suppliers. Only 30,000 made it the fields, exracting 75 tons of gold. Only a few made money.

Pub Stuff:

- Matchmaker Paxos: In this paper, we present Matchmaker Paxos and Matchmaker MultiPaxos, a reconfigurable consensus and state machine replication protocol respectively. Our protocols can perform a reconfiguration with little to no impact on the latency or throughput of command processing; they can perform a reconfiguration in one round trip (theoretically) and a few milliseconds (empirically)

- Learning Graph Structure With A Finite-State Automaton Layer: In this work, we study the problem of learning to derive abstract relations from the intrinsic graph structure. Motivated by their power in program analyses, we consider relations defined by paths on the base graph accepted by a finite-state automaton. We show how to learn these relations end-to-end by relaxing the problem into learning finite-state automata policies on a graph-based POMDP and then training these policies using implicit differentiation. The result is a differentiable Graph Finite-State Automaton (GFSA) layer that adds a new edge type (expressed as a weighted adjacency matrix) to a base graph.

- Scalable data classification for security and privacy: The approach described here is our first end-to-end privacy system that attempts to solve this problem by incorporating data signals, machine learning, and traditional fingerprinting techniques to map out and classify all data within Facebook. The described system is in production achieving a 0.9+ average F2 scores across various privacy classes while handling a large number of data assets across dozens of data stores.

- Papers from ISCA 2020 (International Symposium on Computer Architecture) are now available.

- There’s plenty of room at the Top: What will drive computer performance after Moore’s law? As miniaturization wanes, the silicon-fabrication improvements at the Bottom will no longer provide the predictable, broad-based gains in computer performance that society has enjoyed for more than 50 years. Software performance engineering, development of algorithms, and hardware streamlining at the Top can continue to make computer applications faster in the post-Moore era. Unlike the historical gains at the Bottom, however, gains at the Top will be opportunistic, uneven, and sporadic. Moreover, they will be subject to diminishing returns as specific computations become better explored.

- Demystifying the Real-Time Linux Scheduling Latency: Aiming at clarifying the PREEMPT_RT Linux scheduling latency, this paper leverages the Thread Synchronization Model of Linux to derive a set of properties and rules defining the Linux kernel behavior from a scheduling perspective. These rules are then leveraged to derive a sound bound to the scheduling latency, considering all the sources of delays occurring in all possible sequences of synchronization events in the kernel.

- Systems Performance: Enterprise and the Cloud, 2nd Edition: The second edition adds content on BPF, BCC, bpftrace, perf, and Ftrace, mostly removes Solaris, makes numerous updates to Linux and cloud computing, and includes general improvements and additions. It is written by a more experienced version of myself than I was for the first edition, including my six years of experience as a senior performance engineer at Netflix. This edition has also been improved by a new technical review team of over 30 engineers.

- LogPlayer: Fault-tolerant Exactly-once Delivery using gRPC Asynchronous Streaming: In this paper, we present the design of our LogPlayer that is a component responsible for fault-tolerant delivery of transactional mutations recorded on a WAL to the backend storage shards. LogPlayer relies on gRPC for asynchronous streaming. However, the design provided in this paper can be used with other asynchronous streaming platforms.

- Debugging Incidents in Google's Distributed Systems: SWEs are more likely to consult logs earlier in their debugging workflow, where they look for errors that could indicate where a failure occurred. SREs rely on a more generic approach to debugging: Because SREs are often on call for multiple services, they apply a general approach to debugging based on known characteristics of their system(s). They look for common failure patterns across service health metrics (e.g., errors and latency for requests) to isolate where the issue is happening, and often dig into logs only if they're still uncertain about the best mitigation strategy. Newer engineers are more likely to use recently developed tools, while engineers with extensive experience (ten or more years running complex, distributed systems at Google) tend to use more legacy tools. Scale and complexity. The larger the blast radius (i.e., its location(s), the affected systems, the importance of the user journey affected, etc.) of the problem, the more complex the issue. Underlying cause. On-callers are likely to respond to symptoms that map to six common underlying issues: capacity problems; code changes; configuration changes; dependency issues (a system/service my system/service depends on is broken); underlying infrastructure issues (network or servers are down); and external traffic issues.

Reader Comments (3)

Very useful.

A pleasure to read as always.

Hey Todd - one small corrections if possible:

- @tsimonite: Larry Page in 2004: "We want to get you out of Google and to the right place as fast as possible" Google in 2020: Google is the right place, no outward links for you.

^^^ the last part is duplicated

Thanks man!