Paper: XORing Elephants: Novel Erasure Codes for Big Data

Thursday, June 27, 2013 at 8:45AM

Thursday, June 27, 2013 at 8:45AM

Erasure codes are one of those seemingly magical mathematical creations that with the developments described in the paper XORing Elephants: Novel Erasure Codes for Big Data, are set to replace triple replication as the data storage protection mechanism of choice.

The result says Robin Harris (StorageMojo) in an excellent article, Facebook’s advanced erasure codes: "WebCos will be able to store massive amounts of data more efficiently than ever before. Bad news: so will anyone else."

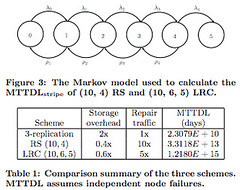

Robin says with cheap disks triple replication made sense and was economical. With ever bigger BigData the overhead has become costly. But erasure codes have always suffered from unacceptably long time to repair times. This paper describes new Locally Repairable Codes (LRCs) that are efficiently repairable in disk I/O and bandwidth requirements:

These systems are now designed to survive the loss of up to four storage elements – disks, servers, nodes or even entire data centers – without losing any data. What is even more remarkable is that, as this paper demonstrates, these codes achieve this reliability with a capacity overhead of only 60%.

They examined a large Facebook analytics Hadoop cluster of 3000 nodes with about 45 PB of raw capacity. On average about 22 nodes a day fail, but some days failures could spike to more than 100.

LRC test results found several key results.

- Disk I/O and network traffic were reduced by half compared to RS codes.

- The LRC required 14% more storage than RS, information theoretically optimal for the obtained locality.

- Repairs times were much lower thanks to the local repair codes.

- Much greater reliability thanks to fast repairs.

- Reduced network traffic makes them suitable for geographic distribution.

- LRC test results found several key results.

- Disk I/O and network traffic were reduced by half compared to RS codes.

I wonder if we'll see a change in NoSQL database systems as well?

Related Articles

- Erasure Coding vs. Replication: A Quantitative Comparison

- Ceph - a distributed object store.

Reader Comments (1)

Erasure codes are great - no doubt about that, but they do have a bunch of limitations. When erasure coding is used, only one copy of the data exists, and redundancy is provided by means of parities. So this affects read performance. Also, if you want to modify even a single bit, the parities need to be recomputed. These are some of the reasons why erasure codes are generally used on "cold data" - data that has not been touched (read/written) for a long time.

NoSQL systems and databases in general might store "hot data". Erasure codes might actually make it harder for these systems to scale since the read/write performance might be affected tremendously. Its all about finding the right tradeoff.

(I am one of the authors on the paper).