There’s a long history of donating spare compute cycles for worthy causes. Most of those efforts were started in the Desktop Age. Now, in the Cloud Age, how can we donate spare compute capacity? How about through a private spot market?

There are cycles to spare. Public Cloud Usage trends:

Maybe all that CapEx sunk into Reserved Instances can be put to some use? Maybe over provisioned instances could be added to the resource pool as well? That’s a lot of power Captain. How could it be put to good use?

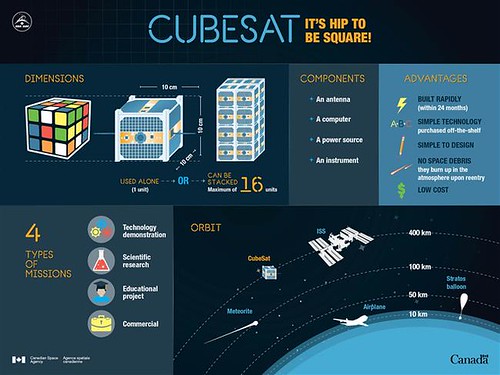

There is a need to crunch data. For science. Here’s a great example as described in This is how you count all the trees on Earth. The idea is simple: from satellite pictures count the number of trees. It’s an embarrassingly parallel problem, perfect for the cloud. NASA had a problem. Their cloud is embarrassingly tiny. 400 hypervisors shared amongst many projects. Analysing all the data would would take 10 months. An unthinkable amount of time in this Real-time Age. So they used the spot market on AWS.

The upshot? The test run cost a measly $80, which means that NASA can process data collected for an entire UTM zone for just $250. The cost for all 11 UTM zones in sub-Sarahan Africa and the use of all four satellites comes in at just $11,000.

“We have turned what was a $200,000 job into a $10,000 job and we went from 100 days to 10 days [to complete],” said Hoot. “That is something scientists can build easily into their budget proposals.”

That last quote, That is something scientists can build easily into their budget proposals, stuck in my craw.

Imagine how much science could get done if you didn’t have the budget proposal process slowing down the future? Especially when we know there are so many free cycles available that are already attached to well supported data processing pipelines. How could those cycles be freed up to serve a higher purpose?

Netflix shows the way with their internal spot market. Netflix has so many cloud resources at their disposal, a pool of 12,000 unused reserved instances at peak times, that they created their own internal spot market to drive better utilization. The whole beautiful setup is described Creating Your Own EC2 Spot Market, Creating Your Own EC2 Spot Market -- Part 2, and in High Quality Video Encoding at Scale.

The win: By leveraging the internal spot market Netflix measured the equivalent of a 210% increase in encoding capacity.

Netflix has a long and glorious history of sharing and open sourcing their tools. It seems likely when they perfect their spot market infrastructure it could be made generally available.

Perhaps the Netflix spot market could be extended so unused resources across the Clouds could advertise themselves for automatic integration into a spot market usable by scientists to crunch data and solve important world problems.

Perhaps donated cycles could even be charitable contributions that could help offset the cost of the resource? My wife is a tax accountant and she says this is actually true, under the right circumstances.

This kind of idea has a long history with me. When AWS first started, I like a lot of people wondered, how can I make money off this gold rush? That’s before we knew Amazon was going to make most of the tools to sell to the miners themselves. The idea of exploiting underutilized resources fascinated me for some reason. That is, after all, what VMs do for physical hardware, exploit the underutilized resources of powerful machines. And it is in some ways the idea behind our modern economy. Yet even today software architectures aren’t such that we reach anything close to full utilization of our hardware resources. What I wanted to do was create a memcached system that allowed developers to sell their unused memory capacity (and later CPU, network, storage) to other developers as cheap dynamic pools of memcached storage. Get your cache dirt cheap and developers could make some money back on underused resources. A very similar idea to the spot market notion. But without homomorphic encryption the security issues were daunting, even assuming Amazon would allow it. With the advent of the Container Age sharing a VM is now way more secure and Amazon shouldn’t have a problem with the idea if it’s for science. I hope.

Tuesday, July 25, 2017 at 9:19AM

Tuesday, July 25, 2017 at 9:19AM

In a really far ranging and insightful interview by Steve Peterson:

In a really far ranging and insightful interview by Steve Peterson: