The Next Scalability Hurdle: Massively Multiplayer Mobile AR

Wednesday, August 2, 2017 at 9:30AM

Wednesday, August 2, 2017 at 9:30AM

Many moons ago, in Building Super Scalable Systems: Blade Runner Meets Autonomic Computing In The Ambient Cloud, I said we still had scaling challenges ahead, that we've not yet begun to scale, that we still don't know how to scale at a planetary level.

That was 7 years ago. Now Facebook has 2 billion monthly users. There's no reason to think they can't scale an unimpressive 3.5x to handle the rest of the planet. WhatsApp is at one billion daily users. YouTube is at 1.5 billion monthly users.

So it appears we do know how to service a whole planet full of people (and bots). At least a select few companies with vast resources know how. We are still no closer to your average developer being able to field a planet scale service. The winner take all nature of the Internet seems to fend off decentralization like it's a plague. Maybe efforts like Filecoin will change the tide.

There's another area we have scaling challenges: Massively Multiplayer Mobile AR (Augmented Reality). While AR has threatened to be the future for quite some time, it now looks like the future may be just around the virtual corner.

Apple Introducing ARKit, a hit with developers, means that future will be sooner rather than later. One billion iPhone users make it so. Remember when the iPhone was introduced, how the increased data usage melted AT&Ts' network? This will be worse.

Pokémon Go had a little event recently that shows what incredible stress such systems will put on our infrastructure. No need to repeat the story, iMore has it all: Pokémon Go Fest: What happened and why, Pokémon Go Fest's big flop shows Niantic needs to think bigger, Pokémon Go Fest Chicago: The fun, the failure, and the legendary, UPDATED: Are AT&T's iPhone Problems Due to Network Configuration Errors?

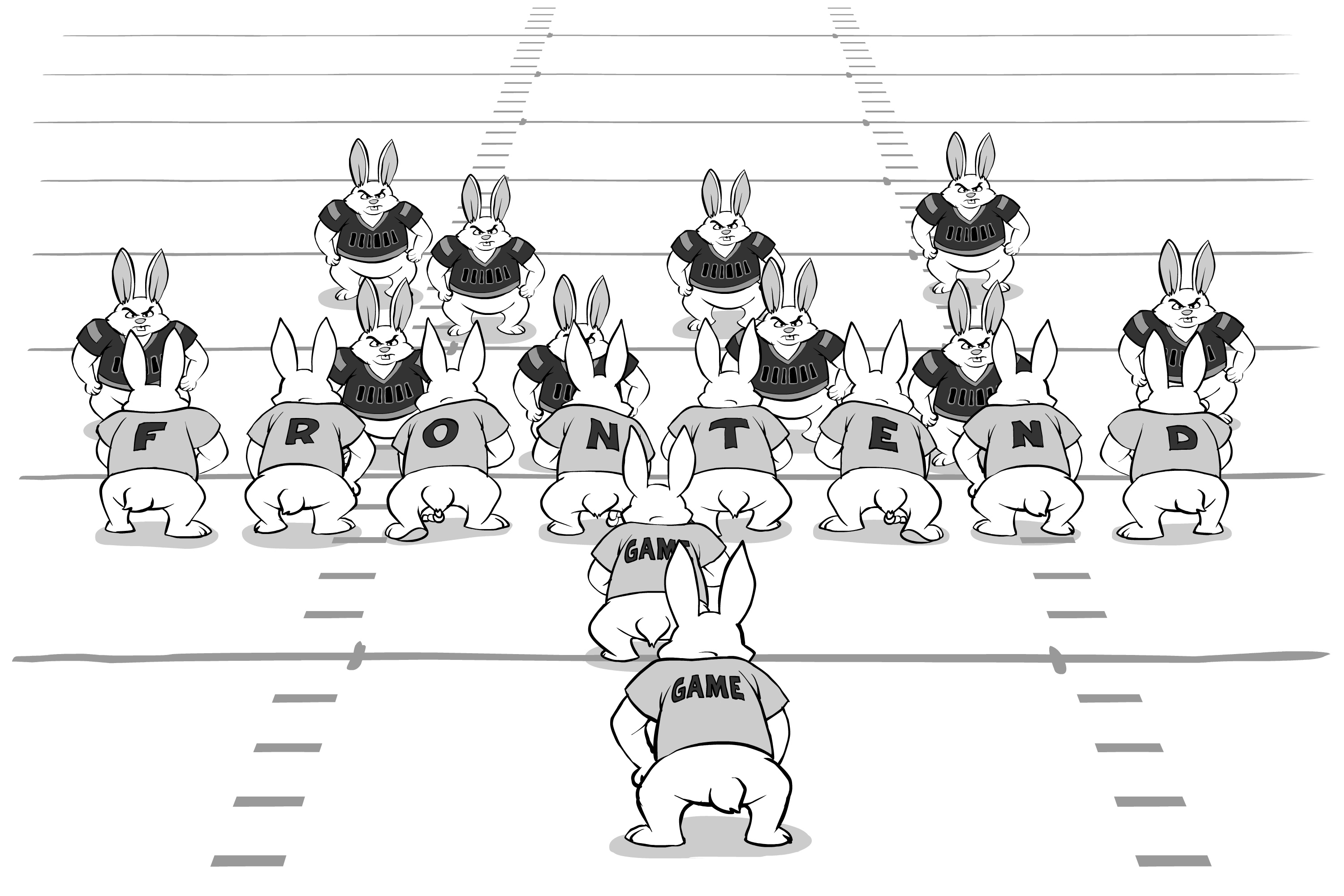

It's true, Pokémon Go has been well known for its scaling problems, but this was a planned event, shouldn't it have been handled better? No doubt. Still, a concentration of 20,000 players in a single shard, in such a small "kill zone" like a park, is a challenge. Should they have brought in Cell on Wheels, use high density WiFi, maybe put up microwave links to increase the backhaul? Yep, that seems reasonable. EM spectrum is a terrible thing to waste.

But what happens when Pokémon Go Fest is just what we call Tuesday? When everyone is using mobile AR? Every product in every store, every building, every sign, everything will have some sort of data driven overlay. There will be no chance to build special infrastructure. Infrastructure must be improved to handle the new loads. Hopefully 5G will come to the rescue.

Spectrum isn't the only problem. Compute resources are also a problem. Pokémon Go isn't a particularly data intensive game. It doesn't require a lot interaction between users or constant communication with backend servers. What happens we we have multiple games like that all operating at once?

Pokémon Go seems like a poster child for edge computing. The entire shard could have been handled by a portable onsite datacenter with its own local communication infrastructure. An onsite datacenter combines low latency compute with enough scale to handle the load. My guess is the thundering herd problem that blocked players connecting to the game would have disappeared. Players would have connected quickly to the local game servers and started playing the game with little muss or fuss. Same with game state.

Perhaps in the future we'll have datacenter handoff protocols just like we have cell network handoff protocols today. And if we really do it right, the big scheduler in the sky that will coordinate all these moving parts, might consider distributed compute resources like smartphones as part of the compute fabric.

We have not yet begun to scale Massively Multiplayer Mobile AR.

In a really far ranging and insightful interview by Steve Peterson:

In a really far ranging and insightful interview by Steve Peterson:

Ten million players a day and over fifty million players a month interact socially with friends using

Ten million players a day and over fifty million players a month interact socially with friends using