A Well Known But Forgotten Trick: Object Pooling

Wednesday, July 29, 2015 at 8:56AM

Wednesday, July 29, 2015 at 8:56AM

This is a guest repost by Alex Petrov. Find the original article here.

Most problem are quite straightforward to solve: when something is slow, you can either optimize it or parallelize it. When you hit a throughput barrier, you partition a workload to more workers. Although when you face problems that involve Garbage Collection pauses or simply hit the limit of the virtual machine you're working with, it gets much harder to fix them.

When you're working on top of a VM, you may face things that are simply out of your control. Namely, time drifts and latency. Gladly, there are enough battle-tested solutions, that require a bit of understanding of how JVM works.

If you can serve 10K requests per second, conforming with certain performance (memory and CPU parameters), it doesn't automatically mean that you'll be able to linearly scale it up to 20K. If you're allocating too many objects on heap, or waste CPU cycles on something that can be avoided, you'll eventually hit the wall.

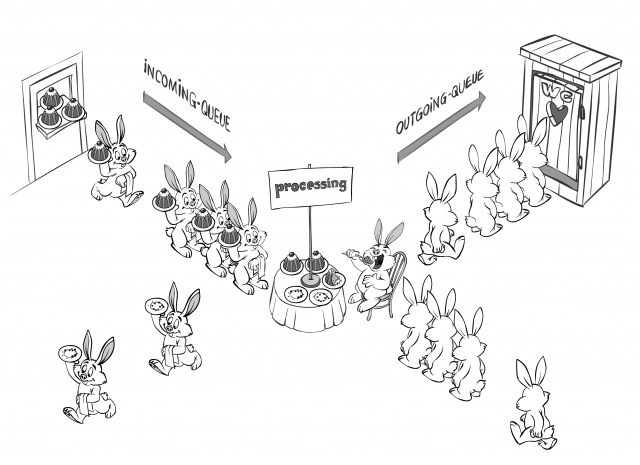

The simplest (yet underrated) way of saving up on memory allocations is object pooling. Even though the concept is sounds similar to just pooling objects and socket descriptors, there's a slight difference.

When we're talking about socket descriptors, we have limited, rather small (tens, hundreds, or max thousands) amount of descriptors to go through. These resources are pooled because of the high initialization cost (establishing connection, performing a handshake over the network, memory-mapping the file or whatever else). In this article we'll talk about pooling larger amounts of short-lived objects which are not so expensive to initialize, to save allocation and deallocation costs and avoid memory fragmentation.