10 Tips for Optimizing NGINX and PHP-fpm for High Traffic Sites

Wednesday, April 30, 2014 at 8:57AM

Wednesday, April 30, 2014 at 8:57AM  Adrian Singer has boiled down 7 years of experience to a set of 10 very useful tips on how to best optimize NGINX and PHP-fpm for high traffic sites:

Adrian Singer has boiled down 7 years of experience to a set of 10 very useful tips on how to best optimize NGINX and PHP-fpm for high traffic sites:

- Switch from TCP to UNIX domain sockets. When communicating to processes on the same machine UNIX sockets have better performance the TCP because there's less copying and fewer context switches.

- Adjust Worker Processes. Set the worker_processes in your nginx.conf file to the number of cores your machine has and increase the number of worker_connections.

- Setup upstream load balancing. Multiple upstream backends on the same machine produce higher throughout than a single one.

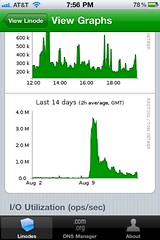

- Disable access log files. Log files on high traffic sites involve a lot of I/O that has to be synchronized across all threads. Can have a big impact.

- Enable GZip.

- Cache information about frequently accessed files.

- Adjust client timeouts.

- Adjust output buffers.

- /etc/sysctl.conf tuning.

- Monitor. Continually monitor the number of open connections, free memory and number of waiting threads and set alerts if thresholds are breached. Install the NGINX stub_status module.

Please take a look at the original article as it includes excellent configuration file examples.