10 Common Server Setups For Your Web Application

Wednesday, September 10, 2014 at 8:56AM

Wednesday, September 10, 2014 at 8:56AM

If you need a good overview of different ways to setup your web service then Mitchell Anicas has written a good article for you: 5 Common Server Setups For Your Web Application.

We've even included a few additional possibilities at no extra cost.

- Everything on One Server. Simple. Potential for poor performance because of resource contention. Not horizontally scalable.

- Separate Database Server. There's an application server and a database server. Application and database don't share resources. Can independently vertically scale each component. Increases latency because the database is a network hop away.

- Load Balancer (Reverse Proxy). Distribute workload across multiple servers. Native horizontal scaling. Protection against DDOS attacks using rules. Adds complexity. Can be a performance bottleneck. Complicates issues like SSL termination and stick sessions.

- HTTP Accelerator (Caching Reverse Proxy). Caches web responses in memory so they can be served faster. Reduces CPU load on web server. Compression reduces bandwidth requirements. Requires tuning. A low cache-hit rate could reduce performance.

- Master-Slave Database Replication. Can improve read and write performance. Adds a lot of complexity and failure modes.

- Load Balancer + Cache + Replication. Combines load balancing the caching servers and the application servers, along with database replication. Nice explanation in the article.

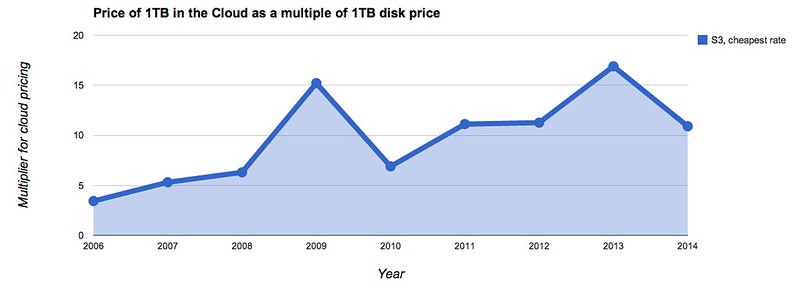

- Database-as-a-Service (DBaaS). Let someone else run the database for you. RDS is one example from Amazon and there are hosted versions of many popular databases.

- Backend as a Service (BaaS). If you are writing a mobile application and you don't want to deal with the backend component then let someone else do it for you. Just concentrate on the mobile platform. That's hard enough. Parse and Firebase are popular examples, but there are many more.

- Platform as a Service (PaaS). Let someone else run most of your backend, but you get more flexibility than you have with BaaS to build your own application. Google App Engine, Heroku, and Salesforce are popular examples, but there are many more.

- Let Somone Else Do it. Do you really need servers at all? If you have a store then a service like Etsy saves a lot of work for very little cost. Does someone already do what you need done? Can you leverage it?

Once upon a time a great

Once upon a time a great