Strategy: Run a Scalable, Available, and Cheap Static Site on S3 or GitHub

Monday, August 22, 2011 at 9:10AM

Monday, August 22, 2011 at 9:10AM One of the best projects I've ever worked on was creating a large scale web site publishing system that was almost entirely static. A large team of very talented creatives made the art work, writers penned the content, and designers generated templates. All assets were controlled in a database. Then all that was extracted, after applying many different filters, to a static site that was uploaded via ftp to dozens of web servers. It worked great. Reliable, fast, cheap, and simple. Updates were a bit of a pain as it required pushing a lot of files to a lot of servers, and that took time, but otherwise a solid system.

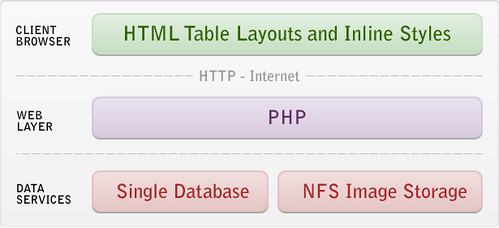

Alas, this elegant system was replaced with a new fangled dynamic database based system. Content was pulled from a database using a dynamic language generated front-end. With a recent series of posts from Amazon's Werner Vogels, chronicling his experience of transforming his All Things Distributed blog into a static site using S3's ability to serve web pages, I get the pleasure of asking: are we back to static sites again?

It's a pleasure to ask the question because in many ways a completely static site is the holly grail of content heavy sites. A static site is one in which files (html, images, sound, movies, etc) sit in a filesystem and a web server directly translates a URL to a file that is directly reads the file from the file system and spits it out to the browser via a HTTP request. Not much can go wrong in this path. Not much going wrong is a virtue. It means you don't need to worry about things. It will just work. And it will just keep on working over time, bit rot hits program and service heavy sites a lot harder than static sites.

Here's how Werner makes his site static:

You may not scale often, but when you scale, please drink HighScalability:

You may not scale often, but when you scale, please drink HighScalability: