Google with justly earned pride recently announced:

Today at the 2015 Open Network Summit, we are revealing for the first time the details of five generations of our in-house network technology. From Firehose, our first in-house datacenter network, ten years ago to our latest-generation Jupiter network, we’ve increased the capacity of a single datacenter network more than 100x. Our current generation — Jupiter fabrics — can deliver more than 1 Petabit/sec of total bisection bandwidth. To put this in perspective, such capacity would be enough for 100,000 servers to exchange information at 10Gb/s each, enough to read the entire scanned contents of the Library of Congress in less than 1/10th of a second.

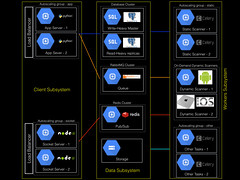

Google’s datacenter network is the magic behind what makes much of Google really work. But what is “bisectional bandwidth” and why does it matter? We talked about bisectional bandwidth a while back in Changing Architectures: New Datacenter Networks Will Set Your Code And Data Free. In short, bisectional bandwidth refers to the networks Google servers use to talk to each other.

Historically datacenter networks were oriented around talking to users. Let’s say a request for a web page came in from a browser. The request would go to a server and a reply was crafted by talking to just a few other servers, or perhaps even none at all, and the reply would be sent back to the client. This style of network is called a North/South oriented network. Very little internal communication was needed to implement a request.

That all changed as website and API services grew richer over time. Now literally thousands of backend requests can be made to create a single web page. Mind blowing. This meant communication shifted from being dominated by talking to users to talking to other machines within a datacenter. So these are called East/West oriented networks.

The shift to East/West dominate communication patterns meant a different topology was needed for datacenter networks. The old traditional fat tree network designs were out and something new needed to take its place.

Google has been on the forefront of developing new rich service supportive network designs largely because of their guiding vision of seeing The Datacenter as a Computer. Once your datacenter is the computer then your network is equivalent to a backplane on a single computer, so it must be as fast and reliable as possible so remote disk and remote storage can be accessed as if they were local.

Google’s efforts revolve around a three pronged plan of attack: use a Clos topology, use SDN (Software Defined Networking), and build their own custom gear in their own Googlish way.

Until now we’ve had a limited exposure to Google’s network designs. While we don’t exactly have an all access pass, Amin Vahdat, Google Fellow and Technical Lead for networking at Google, shared a lot of juicy details in a great talk: ONS [Open Networking Summit] 2015: Wednesday Keynote. There’s also a paper: Jupiter Rising: A Decade of Clos Topologies and Centralized Control in Google’s Datacenter Network.

Why release details earlier than they usually do? Google has some real competition with Amazon and they need to find compelling points of differentiation. Google hopes their datacenter network is one such point.

So what makes Google different? The overall message:

-

The end of Moore’s Law means how programs are built is changing.

-

Google has figured it out. Google knows how to build great networks and achieve proper datacenter balance.

-

You can prosper by taking advantage of the innovations and capabilities of Google’s Cloud Platform, the very same platform that powers Google Search.

-

So climb on board, the network is fine!

Is that enough? Perhaps it's not a message with mass appeal, but it may find a home with the discriminating buyer.

Some key points from the talk for me:

-

We don’t know how to build big networks that deliver lots of bandwidth. Google says their network provides 1 Pb/sec of total bisection bandwidth, but it turns out that’s not nearly enough. To support a datacenter’s worth of large compute servers you’ll need 5 Pb/sec networks. Keep in mind the entire internet today is probably near 200Tb/s.

-

It’s more efficient to schedule jobs over huge clusters. Otherwise you have leftover CPU in one place and leftover memory in another. So if you can build your system correctly, a datacenter scale computer gives you a decided economy of scale.

-

Google built their datacenter network system using lessons they learned from the server and storage world: scale out, logically centralize, use commodity components, and never ever manage singlets of anything. Manage all your servers, storage, and networks as a unified whole.

-

The I/O gap is huge. Amin says it has to get solved, if it doesn’t then we’ll stop innovating. Storage capacity has increased through disaggregation. The opportunity is to access global datacenter storage as if it were local. This will get harder and harder with flash and NVM. A new tier of flash and NWM will completely change programming models. Note: unfortunately he didn’t expand on this notion, I dearly wished he had. Amin, can we talk?

What you look for in a good story are characters that act from a core identity. Here we see Google operating from a unique vision that grew organically from their deep experience building scalable software systems. Probably only Google would have had the guts to follow their vision through and build a datacenter network so completely different from accepted wisdom. That takes huge huevos. And it makes for a good story.

Here’s my hopelessly inadequate gloss on the talk:

Click to read more ...

Monday, September 7, 2015 at 8:56AM

Monday, September 7, 2015 at 8:56AM