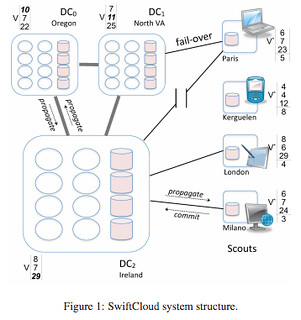

Paper: SwiftCloud: Fault-Tolerant Geo-Replication Integrated all the Way to the Client Machine

Thursday, May 15, 2014 at 9:05AM

Thursday, May 15, 2014 at 9:05AM

So how do you knit multiple datacenters and many thousands of phones and other clients into a single cooperating system?

Usually you don't. It's too hard. We see nascent attempts in services like Firebase and Parse.

SwiftCloud, as described in SwiftCloud: Fault-Tolerant Geo-Replication Integrated all the Way to the Client Machine, goes two steps further, by leveraging Conflict free Replicated Data Types (CRDTs), which means "data can be replicated at multiple sites and be updated independently with the guarantee that all replicas converge to the same value. In a cloud environment, this allows a user to access the data center closer to the user, thus optimizing the latency for all users."

While we don't see these kind of systems just yet, they are a strong candidate for how things will work in the future, efficiently using resources at every level while supporting huge numbers of cooperating users.

Abstract: