If you've wondered why I haven't been posting lately it's because I've been on an amazing

Beach's motorcycle tour of the

Alps (

and,

and,

and,

and,

and,

and,

and,

and). My wife (Linda) and I rode two-up on a BMW 1200 GS through the alps in Germany, Austria, Switzerland, Italy, Slovenia, and Lichtenstein.

The trip was more beautiful than I ever imagined. We rode challenging mountain pass after mountain pass, froze in the rain, baked in the heat, woke up on excellent Italian coffee, ate slice after slice of tasty apple strudel, drank dazzling local wines, smelled the fresh cut grass as the Swiss en masse cut hay for the winter feeding of their dairy cows, rode the amazing Munich train system, listened as cow bells tinkled like wind chimes throughout small valleys, drank water from a pure alpine spring on a blisteringly hot hike, watched local German folk dancers represent their regions, and had fun in the company of fellow riders. Magical.

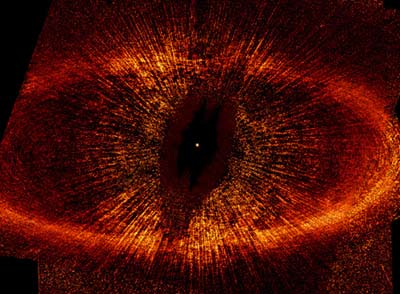

They say you'll ride more twists and turns on this trip than all the rest of your days riding put together. I almost believe that now. It wasn't uncommon at all to have 40 hairpin turns up one side of the pass and another 40 on the way down. And you could easily ride over 5 passes a day. Take a look at the above picture for one of the easier examples.

Which leads me to the subject of this post. It's required by the Official Blogger Handbook after a vacation to conjure some deep insight tying the vacation experience to the topic of blog. I got nada. Really. As you might imagine motorcycling and scalability aren't deeply explicable of each other. Except perhaps for one idea that I pondered a bit while riding through hills that were alive with music:

target fixation. Target fixation is the simple notion that

the bike goes where you look. Focus on an obstacle and you'll hit the obstacle, even though you are trying to avoid it. The brain focuses so intently on an object that you end up colliding with it. So the number one rule of riding is:

look where you want to go. Or in true self-help speak:

focus on the solution instead of the problem. Here's a great

YouTube video showing what can happen. And here's

another...

It may be hard to believe target fixation exists as a serious risk. But it's frustratingly true and it's a problem across all human endeavors. If you've ever driven a car and have managed to hit the one pot hole in the road that you couldn't take your eyes off--that's target fixation. Paragliders who want to avoid the lone tree in a large barren field can still mange to hit that tree because they become fixated on it. Fighter pilots would tragically concentrate on their gun sights so completely they would fly straight into the ground. Skiers who look at trees instead of the spaces in between slam into a cold piny embrace. Mountain bikers who focus on the one big rock will watch that rock as they tumble after. But target fixation isn't just about physical calamity. People can mentally stick to a plan that is failing because all they can see is the plan and they ignore the ground rushing up to meet them. This is where the

framework fixation that we'll talk about a little later comes in.

But for now pretend to be a motorcycle rider for a second. Imagine you are in one of those hairpin turns in the above picture. You are zooming along. You just masterfully passed a doubledecker tour bus and you are carrying a lot of speed into the turn. The corner gets closer and closer. Even closer. Stress levels jump. Corners are scary. Your brain suddenly jumps to a shiny thing off to the side of the road. The shiny thing is all you can see in your mind even though you know the corner looms and you must act. The shiny thing can be anything. In honor of

Joey Chestnut's heroic defeat of Kobayashi at Nathan’s Famous Hot Dog Eating Competition, I inserted a giant hot dog as a possible distraction in the photo. But maybe it's a cow with a particularly fine bell. Or a really cool castle ruin. A picture perfect waterfall. Or maybe it's the fact that there's no guardrail and the fall is a 4000 feet drop and a really big truck is coming into your lane. Whatever the distraction, when you focus on that shiny thing you'll drive to it and fly off the corner. That's target fixation. Your brain will guide you to what you are focused on, not where you want to go. I've done it. Even really good riders do it. Maybe we've all done it.

In true Ninja fashion we can turn target fixation to our advantage. On entering a turn pick a line, scrub off speed before beginning the turn, and turn your head to look up the road where you want to go. You will end up making a perfect turn with no conscious effort. Your body will automatically make all the adjustments needed to carry out the turn because you are looking where you want to go, which is the stretch of road after the turn. This even works in really tight obstacle courses where you need to literally turn on a dime. Now at first you don't believe this. You think you must consciously control your every movement at all times or the world fall into a chaotic mess. But that's not so. If you want to screw up someone's golf game ask them to explain their swing to you. Once they consciously start thinking about their swing they won't be able to do it anymore. This is because about half the 100 billion neurons in your brain are dedicated to learned unconscious motor movement. There's a lot of physical hardware in your brain dedicated to help you throw a rock to take down a deer for dinner. Once your clumsy conscious mind interferes all that hard won expertise looks like a 1960s AI experiment gone terribly wrong.

Frameworks can also cause a sort of target fixation. As an example, let's say you are building a microblogging product and you pick a framework that makes creating an ORM based system easy, clean, and beautiful. This approach works fine for a while. Then you take off and grow at an enviable rate. But you are having a problem scaling to meet the new demand. So you keep working and reworking the ORM framework trying to get it to scale. It's not working. But the ORM tool is so shiny it's hard to consider another possibly more appropriate scaling architecture. You end up missing the corner and flying off the side of the road, wondering what the heck happened.

That's the downside of framework fixation. You spend so much time trying frame your problem in terms of the framework that you lose sight of where you are trying to go. In the microblogging case the ORM framework is completely irrelevant to the microblogging product, yet most of the effort goes into making the ORM scale instead of stepping back and implementing an approach that will let you just turn your head and let all the other unconscious processes make the turn for you.

Framework Fixation Solutions

How can you avoid the framework fixation crash?

Realize framework fixation exists. Be mindful when hitting a tough problem that you may be focusing on a shiny distraction rather than solving a problem.

Focus on where you want to go. In whitewater river rafting they teach you not to point to the danger, but instead point to a safe route to avoid the danger. Let's say there's a big hole or a strainer you should know about. Your first reaction is to point to the danger. But that sets up a target fixation problem. You are more likely to hit what is being pointed to than avoid it. So you are taught to point to the safe route to take rather than dangerous route to avoid. This cuts down on a lot of possible mistakes. It's also a good strategy for frameworks. Have a framework in which you do the right thing naturally rather than use a framework in which you can succeed if you manage to navigate the dozens of hidden dangers. Don't be afraid to devote half your neurons to solving this problem.

Use your brain to pick the right target. It really sucks to pick a wrong target and crash anyway.

Keep your thinking processes simple. Information overload can lead to framework fixation. As situations become more and more complicated it becomes easier and easier to freeze up. Find a way to solve a problem and the right abstraction level.

Build up experience through practice. Looking away from a shiny thing is one of the most difficult things in the world to do. Until you experience it it's hard to believe how difficult it can be. Looking away take a lot of conscious effort. Looking away is a sort of muscle built through the experience of looking where you should be going. The more you practice the more you can control the dangerous impulse to look at shiny things. This problem exists at every level of development, it's not just limited to frameworks.

Related Articles

Target Fixation for Paragliders by Joe Bosworth.

Driving Review: Target Fixation ... Something Worth Looking At! by Mick Farmer

Click to read more ...

Tuesday, July 15, 2008 at 12:45AM

Tuesday, July 15, 2008 at 12:45AM