Pixable Architecture - Crawling, Analyzing, and Ranking 20 Million Photos a Day

Tuesday, February 21, 2012 at 9:15AM

Tuesday, February 21, 2012 at 9:15AM This is a guest post by Alberto Lopez Toledo, PHD, CTO of Pixable, and Julio Viera, VP of Engineering at Pixable.

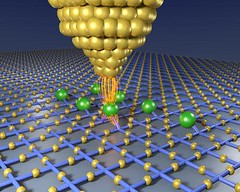

Pixable aggregates photos from across your different social networks and finds the best ones so you never miss an important moment. That means currently processing the metadata of more than 20 million new photos per day: crawling, analyzing, ranking, and sorting them along with the other 5+ billion that are already stored in our database. Making sense of all that data has challenges, but two in particular rise above the rest:

Pixable aggregates photos from across your different social networks and finds the best ones so you never miss an important moment. That means currently processing the metadata of more than 20 million new photos per day: crawling, analyzing, ranking, and sorting them along with the other 5+ billion that are already stored in our database. Making sense of all that data has challenges, but two in particular rise above the rest:

- How to access millions of photos per day from Facebook, Twitter, Instagram, and other services in the most efficient manner.

- How to process, organize, index, and store all the meta-data related to those photos.

Sure, Pixable’s infrastructure is changing continuously, but there are some things that we have learned over the last year. As a result, we have been able to build a scalable infrastructure that takes advantage of today’s tools, languages and cloud service, all running on Amazon Web Services where we have more than 80 servers running. This document provides a brief introduction to those lessons: